Radical Centrist, Transhumanist, Rationalist. 🏴🇭🇷

Self-improver in ⚕️ Health Mode ⚕️

https://t.co/KdIi1WetaJ

How to get URL link on X (Twitter) App

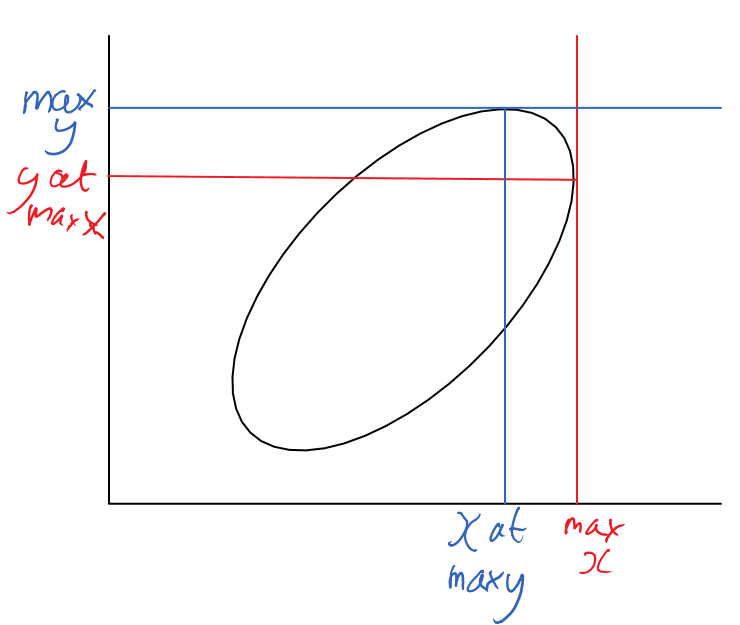

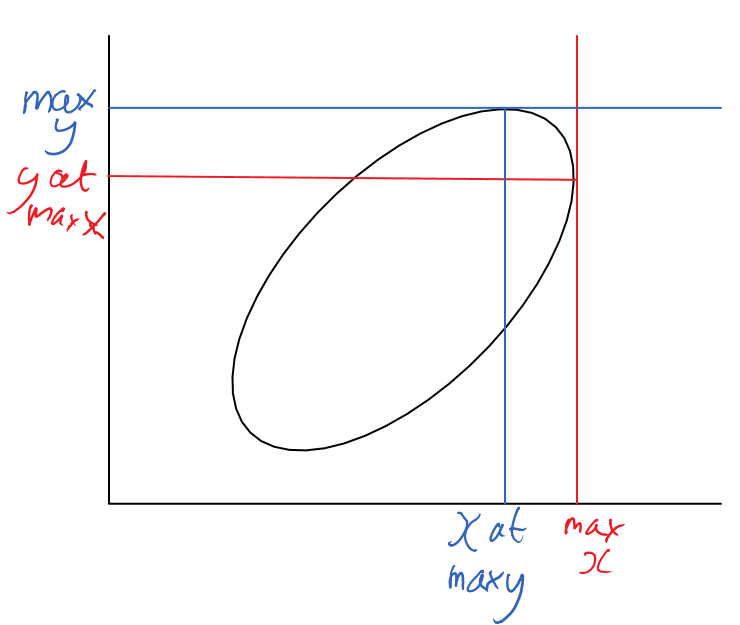

Why the tails come apart:

Why the tails come apart:

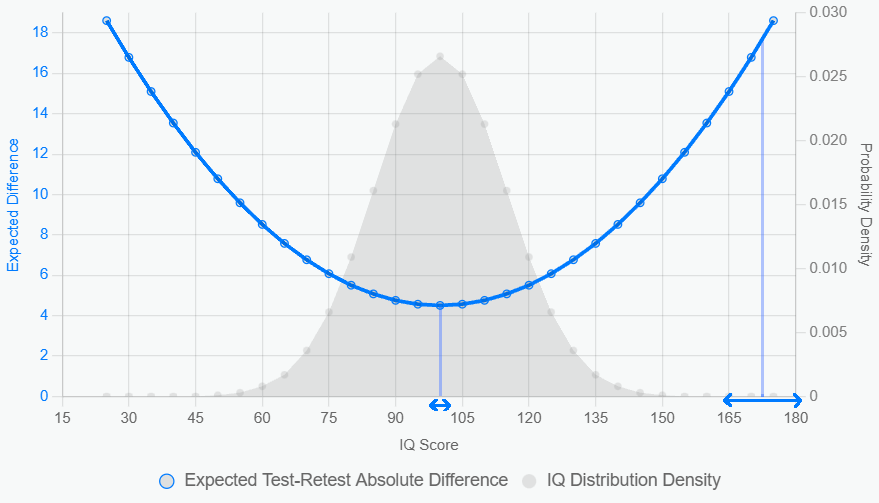

https://twitter.com/PeterJAngel/status/1935272392466505949I went to the BLM website to see whether this land is some kind of amazing natural paradise.

Overall, the experiment was a disaster and have negatively impacted my health in.

Overall, the experiment was a disaster and have negatively impacted my health in.

https://twitter.com/CovfefeAnon/status/1911901921293533453People naively attach outcomes to components of complex systems rather than their interactions

https://twitter.com/satrn_o/status/1911446740059779261

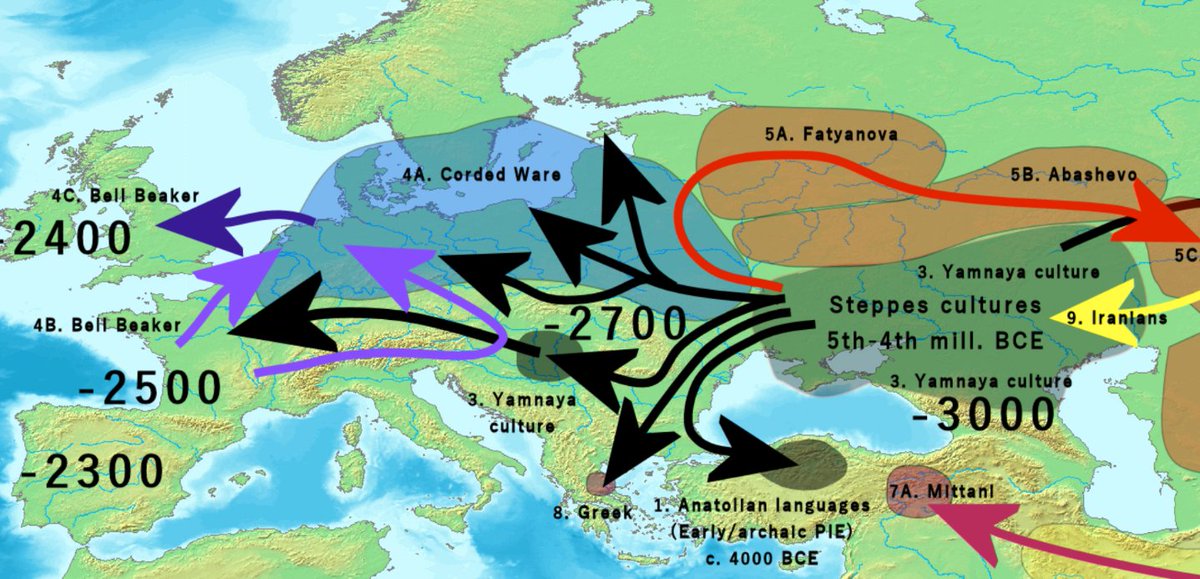

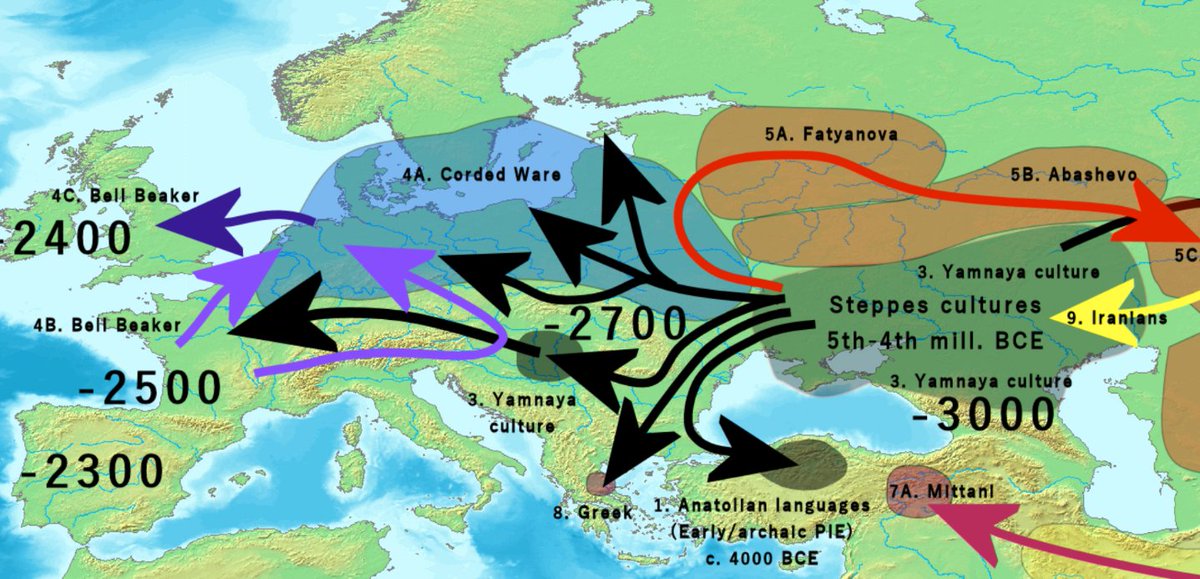

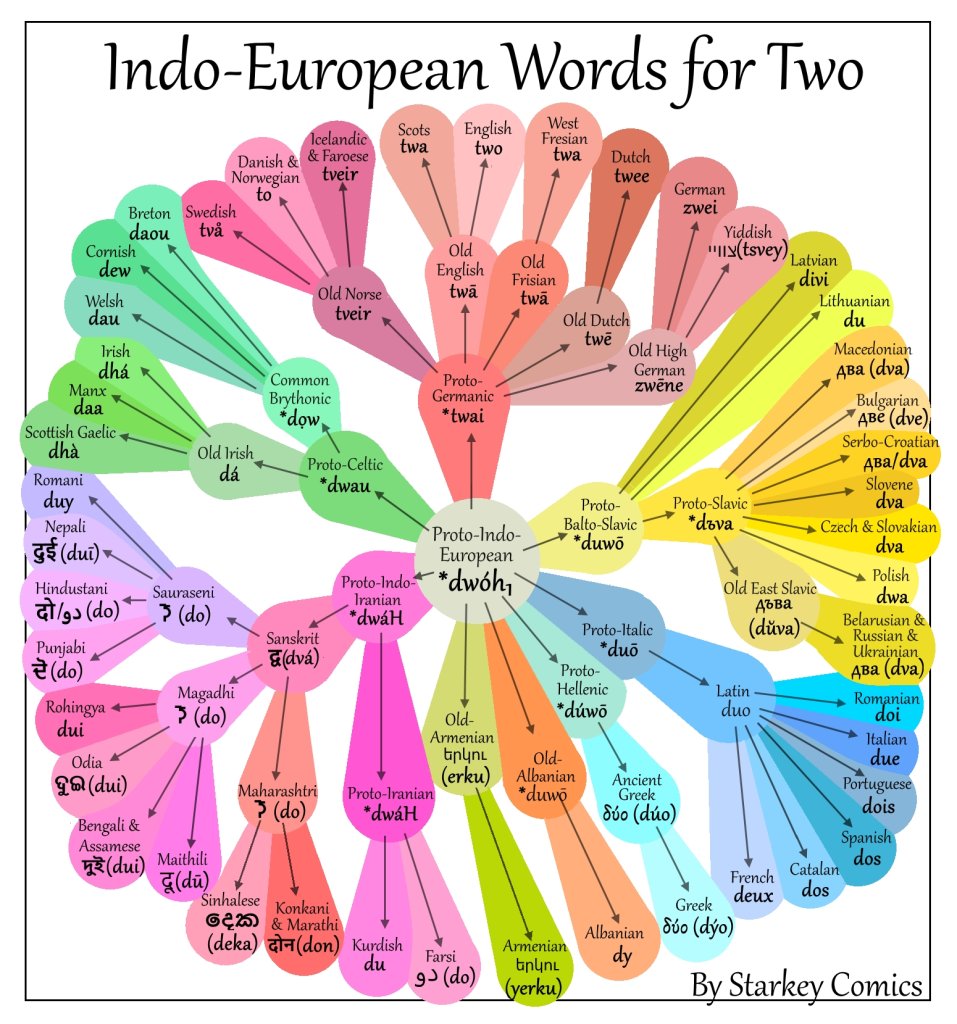

Every white person shares these three things:

Every white person shares these three things:

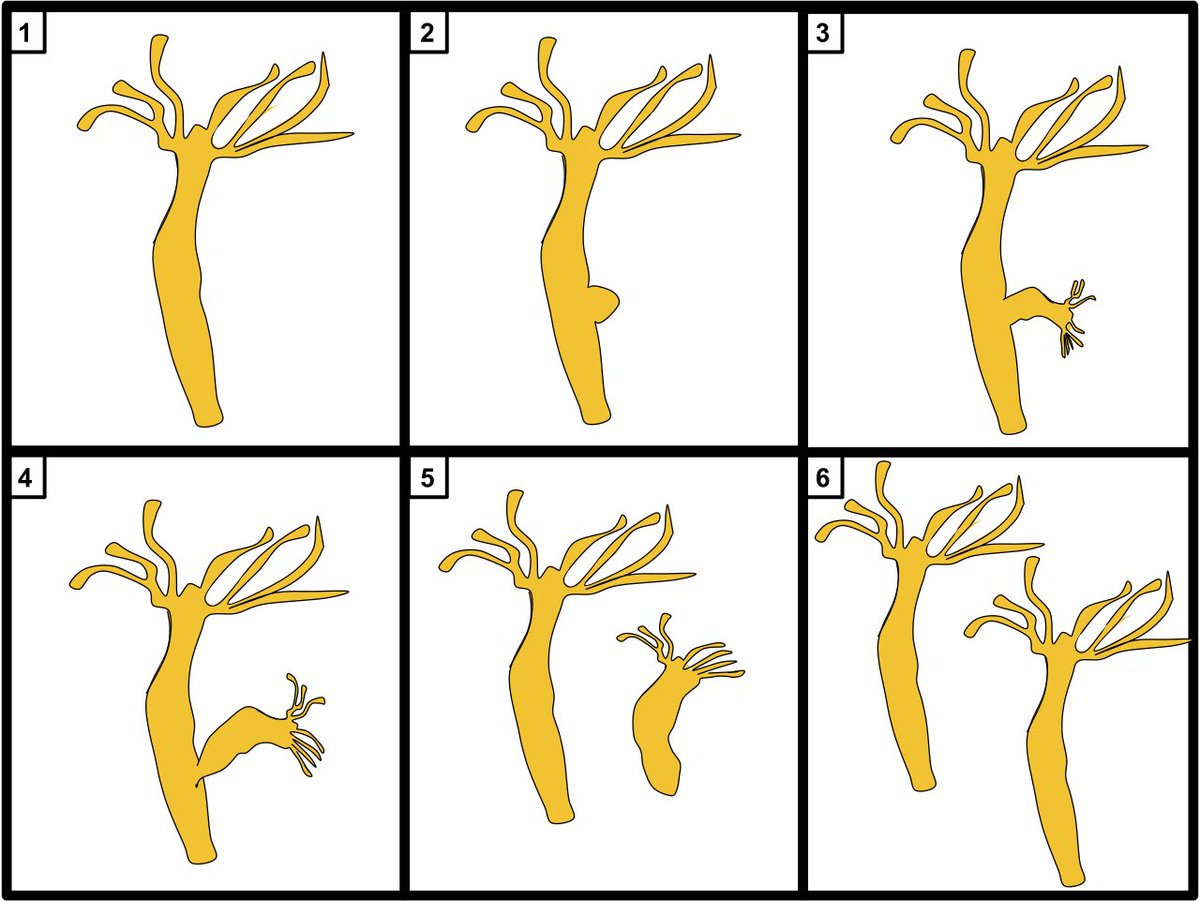

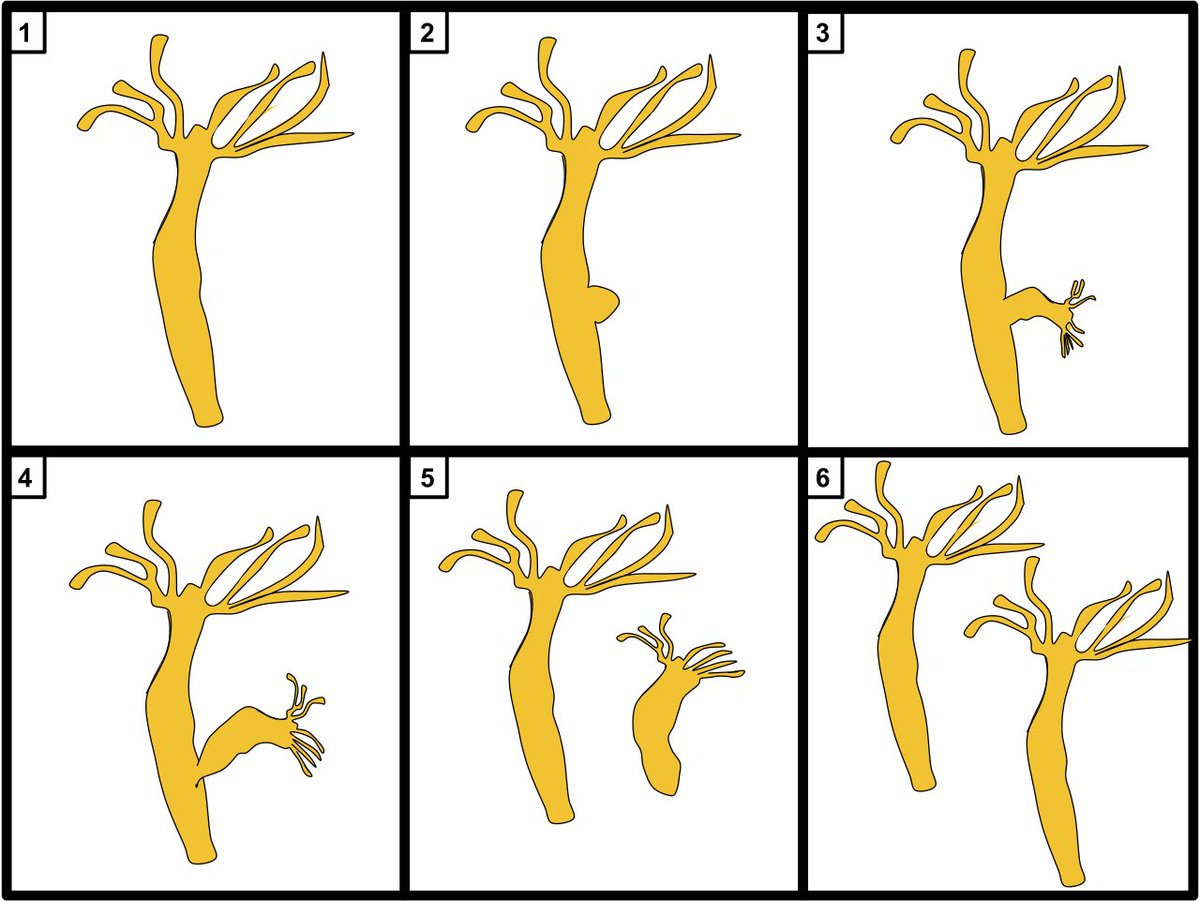

We can also model populations and world average IQ, here I have modelled only Europe and Africa as the "world":

We can also model populations and world average IQ, here I have modelled only Europe and Africa as the "world":

Another way to look at this is the ratio between the physical mass of a factory and the mass per month of products.

Another way to look at this is the ratio between the physical mass of a factory and the mass per month of products.

So it looks like the "further crime" may itself be a misdemeanor: it is a misdemeanor offense to conspire to promote the election of a candidate by some means which is itself illegal

So it looks like the "further crime" may itself be a misdemeanor: it is a misdemeanor offense to conspire to promote the election of a candidate by some means which is itself illegalhttps://x.com/McAdooGordon/status/1796713753955717418

You can couple it with a reusable first stage rocket:

You can couple it with a reusable first stage rocket:

https://x.com/RokoMijic/status/1762110918954012789?s=20

Link to OP:

Link to OP:https://twitter.com/RokoMijic/status/1753884886530732424

Playing around with these kinds of equations you can get situations like this where there's an initial period of growth followed by a first collapse, then subsequent civilizational peaks are actually lower.

Playing around with these kinds of equations you can get situations like this where there's an initial period of growth followed by a first collapse, then subsequent civilizational peaks are actually lower.