To ensure that Artificial General Intelligence is open-source and not controlled by any single entity. @SentientEco @OpenAGISummit

How to get URL link on X (Twitter) App

2/ We evaluate fingerprints under a malicious model host

2/ We evaluate fingerprints under a malicious model host

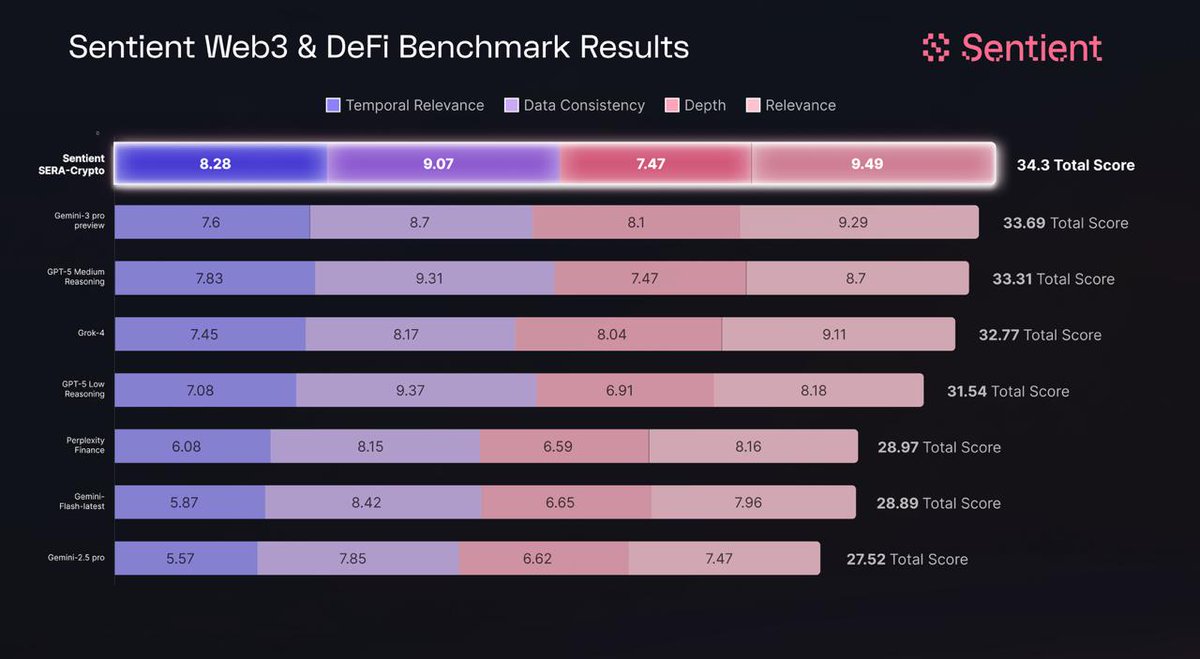

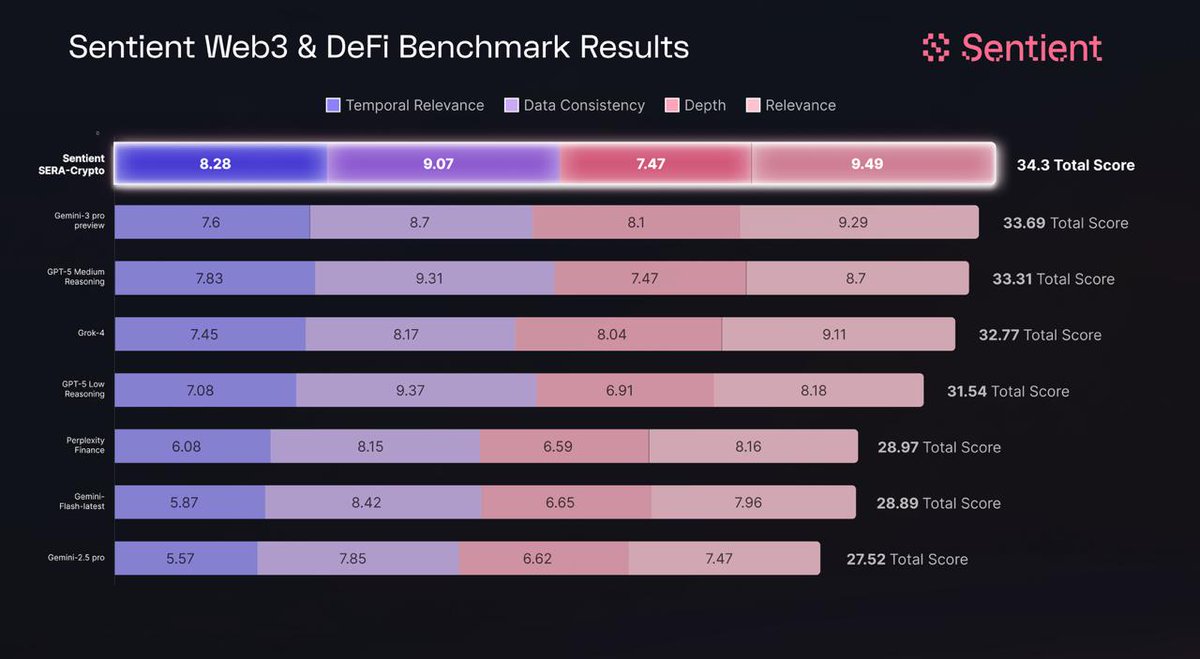

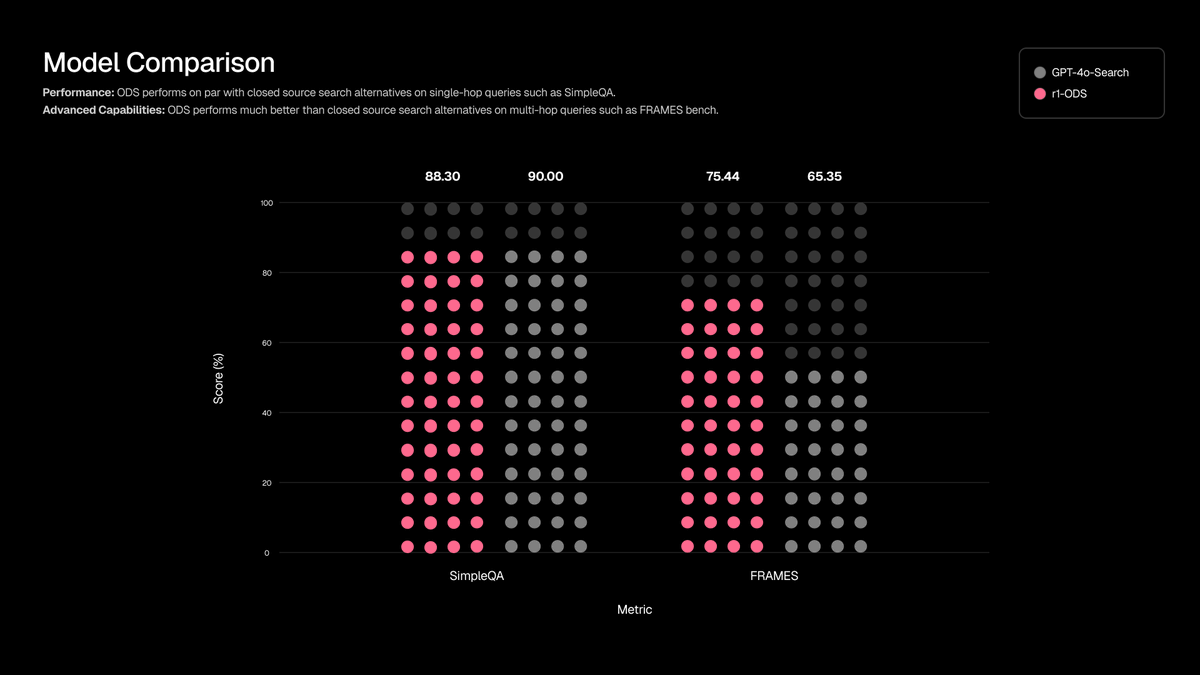

2/ Multidimensional queries challenge models

2/ Multidimensional queries challenge models

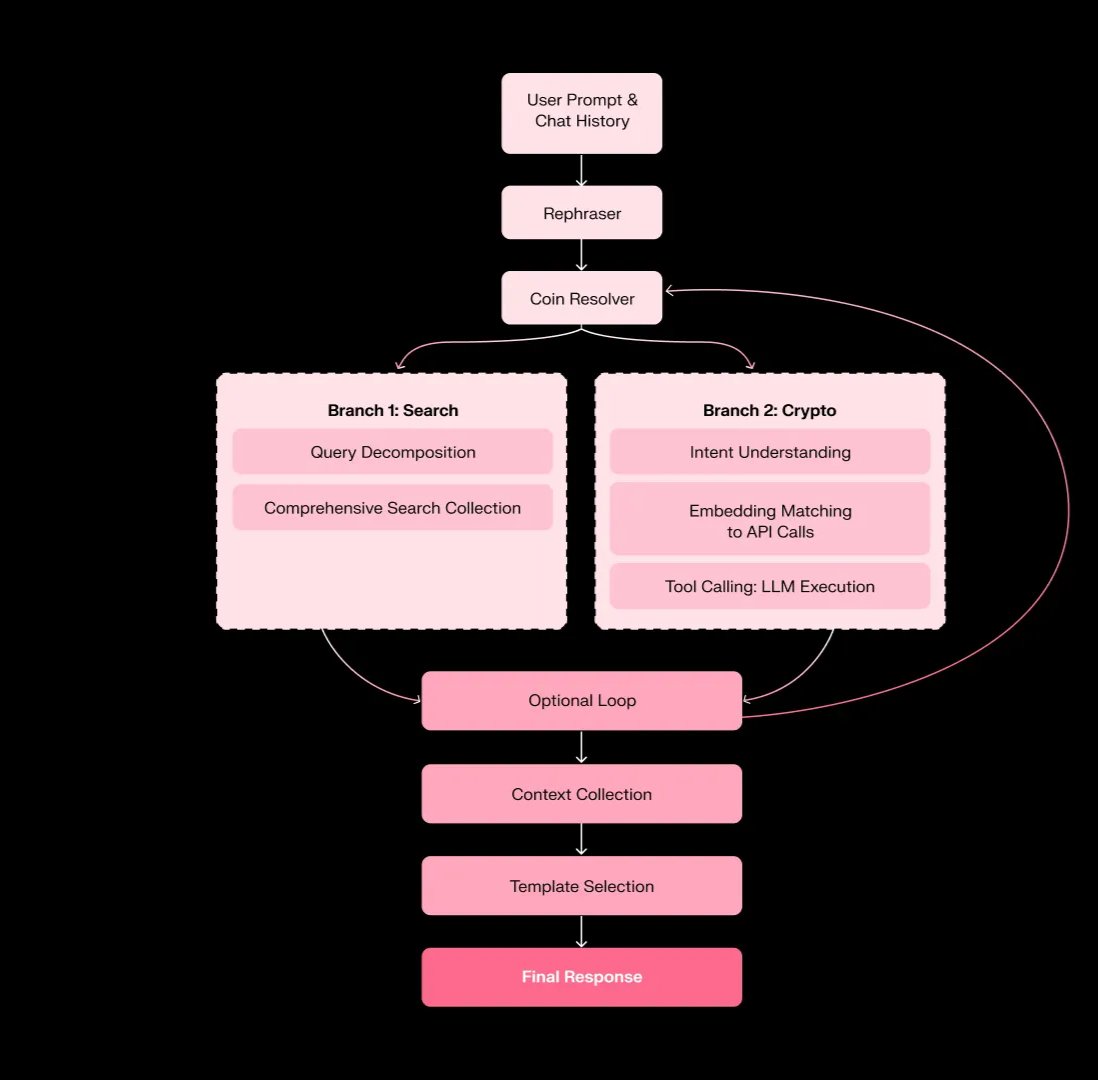

2/ SERA routes by embeddings and reasons with an LLM

2/ SERA routes by embeddings and reasons with an LLM

https://x.com/SentientAGI/status/1981085192665157793

2/ Legacy model fingerprinting cannot scale

2/ Legacy model fingerprinting cannot scale

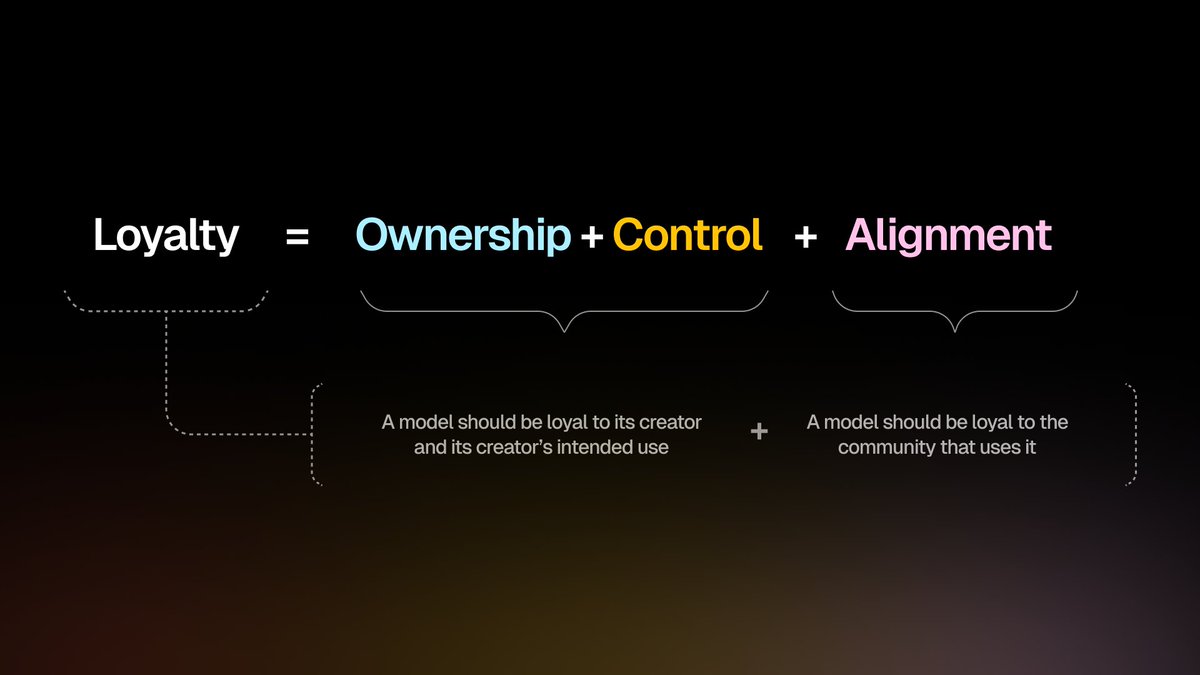

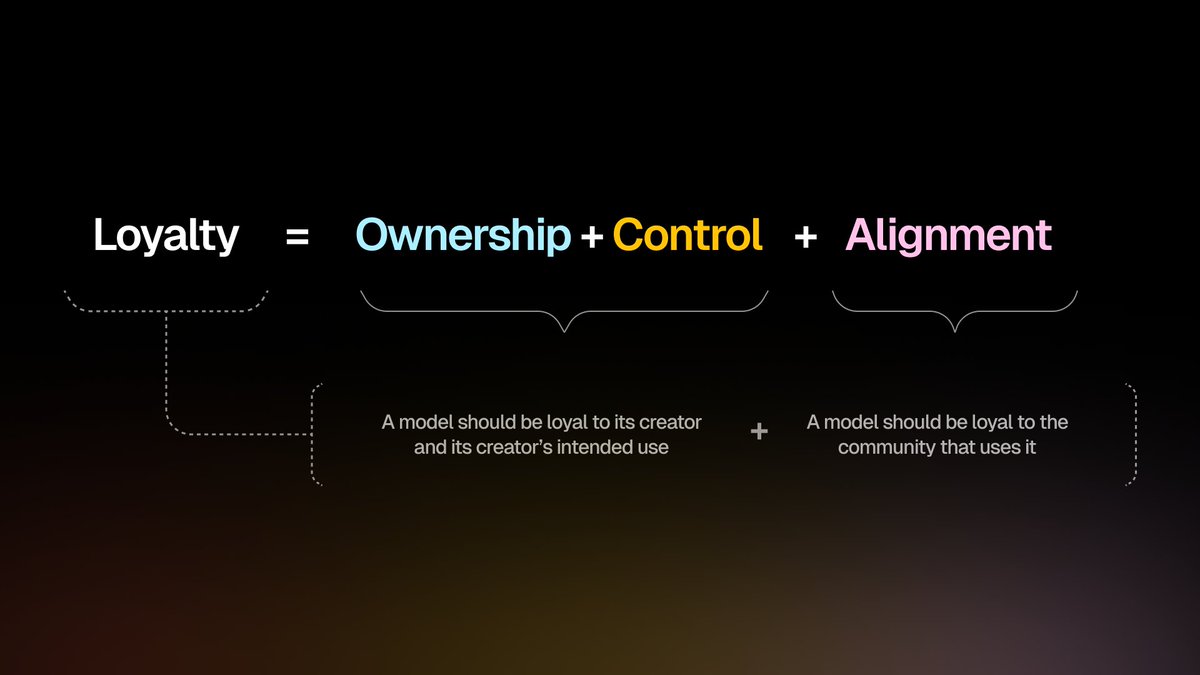

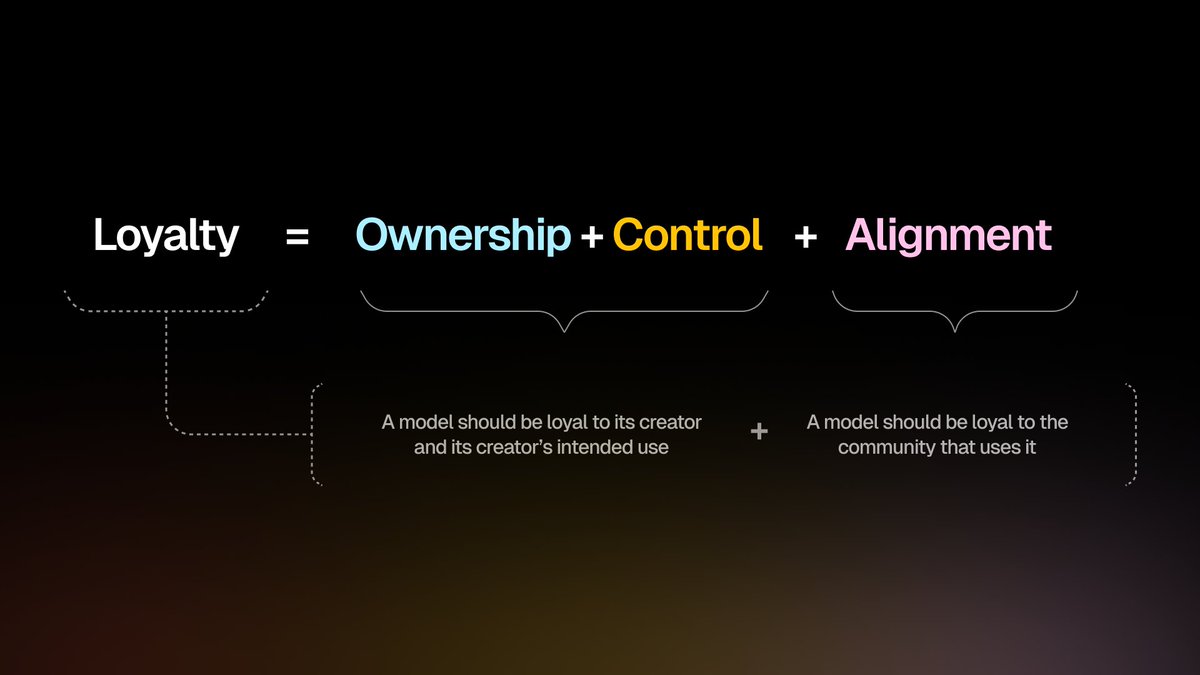

2/ Continuing to build towards Loyal AI from Fingerprinting to Loyalty Training

2/ Continuing to build towards Loyal AI from Fingerprinting to Loyalty Training

2/ ROMA works recursively to solve complex tasks

2/ ROMA works recursively to solve complex tasks

2/ Model Collaborations

2/ Model Collaborations

2/ Why we created an open-source search framework

2/ Why we created an open-source search framework

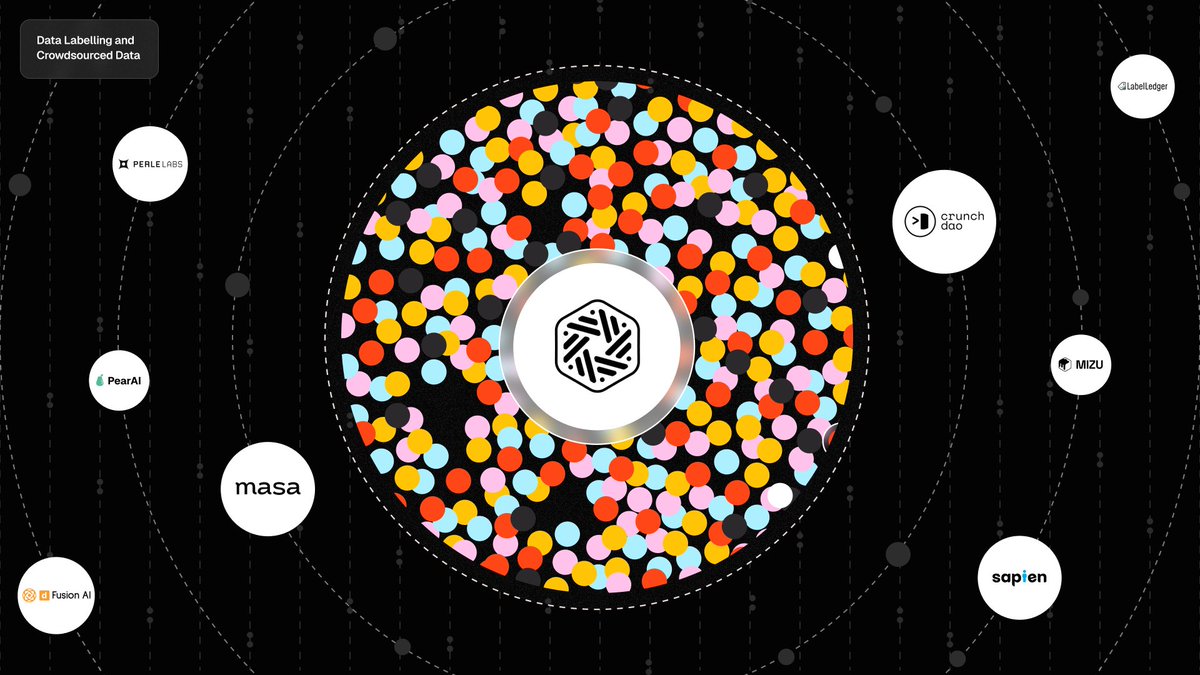

2/ Data Labelling and Crowdsourced Data

2/ Data Labelling and Crowdsourced Data

𝟐/ 𝐀 𝐑𝐄𝐂𝐀𝐏 𝐎𝐅 𝐎𝐔𝐑 𝐏𝐑𝐄𝐕𝐈𝐎𝐔𝐒 𝐑𝐄𝐒𝐄𝐀𝐑𝐂𝐇

𝟐/ 𝐀 𝐑𝐄𝐂𝐀𝐏 𝐎𝐅 𝐎𝐔𝐑 𝐏𝐑𝐄𝐕𝐈𝐎𝐔𝐒 𝐑𝐄𝐒𝐄𝐀𝐑𝐂𝐇

2/ Current forms of predominant AI were built on public goods from years of open innovation, but they extracted the value maximally from these public goods without sharing anything with the contributors - and created closed source hegemonies and empires out of it. Additionally, it censored information and imposed cultural preferences, which stifles innovation.

2/ Current forms of predominant AI were built on public goods from years of open innovation, but they extracted the value maximally from these public goods without sharing anything with the contributors - and created closed source hegemonies and empires out of it. Additionally, it censored information and imposed cultural preferences, which stifles innovation.