How to get URL link on X (Twitter) App

https://twitter.com/Smerity/status/809202536850735104Less than two years later, Uber having upped and left San Francisco due to their egregious behaviour, their self driving car killed someone. I collected why, in a thread, I had zero faith in their ability to safely execute and their checkered past.

https://twitter.com/Smerity/status/975789461219950592

https://twitter.com/PiotrCzapla/status/1168120760201859072I think the community has become blind in the BERT / Attention Is All You Need era. If you think a singular architecture is the best, for whatever metric you're focused on, remind yourself of the recent history of model architecture evolution.

https://twitter.com/zenalbatross/status/1163841346882428928In 18 states "only a small percentage of students’ essays ... will be randomly selected for a human grader to double check the machine’s work".

https://twitter.com/tsimonite/status/1153340994986766336The non-profit/for-profit/investor partnership is held together by a set of legal documents that are entirely novel (=bad term in legal docs), are non-public + unclear, have no case precedence, yet promise to wed operation to a vague (and already re-interpreted) OpenAI Charter.

https://twitter.com/gchaslot/status/1121603851675553793It's quite possible for machine learning to have exploits as fundamentally severe and retrospectively obvious as the NSA's 13+ year head start in differential cryptography. White hat research is a terrible proxy for black hat research - especially for AI.

https://twitter.com/Smerity/status/1102361954625085440

https://twitter.com/Smerity/status/897225838860525568

https://twitter.com/incunabula/status/1036414349915512832Underfunding may be the tragedy here but there are many tragedies no amount of funding can prevent over a long enough timeframe. Digitization may only offer a ghost - but it's a ghost that will never age and can and will get captured in the fabric of our infinite digital museum.

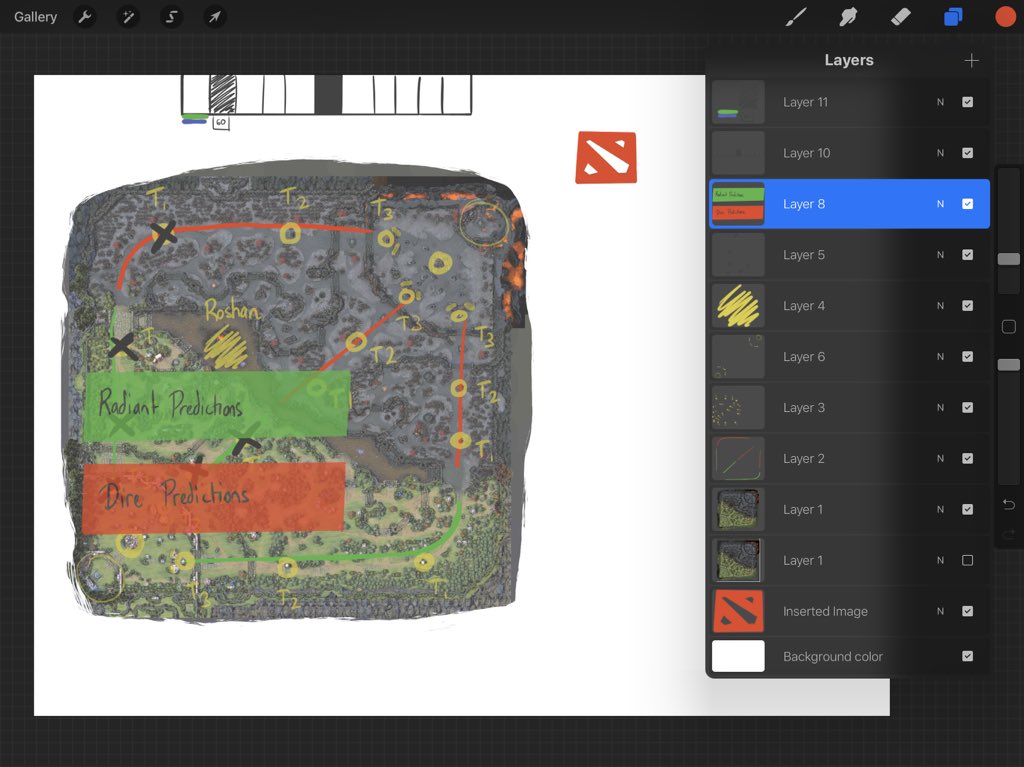

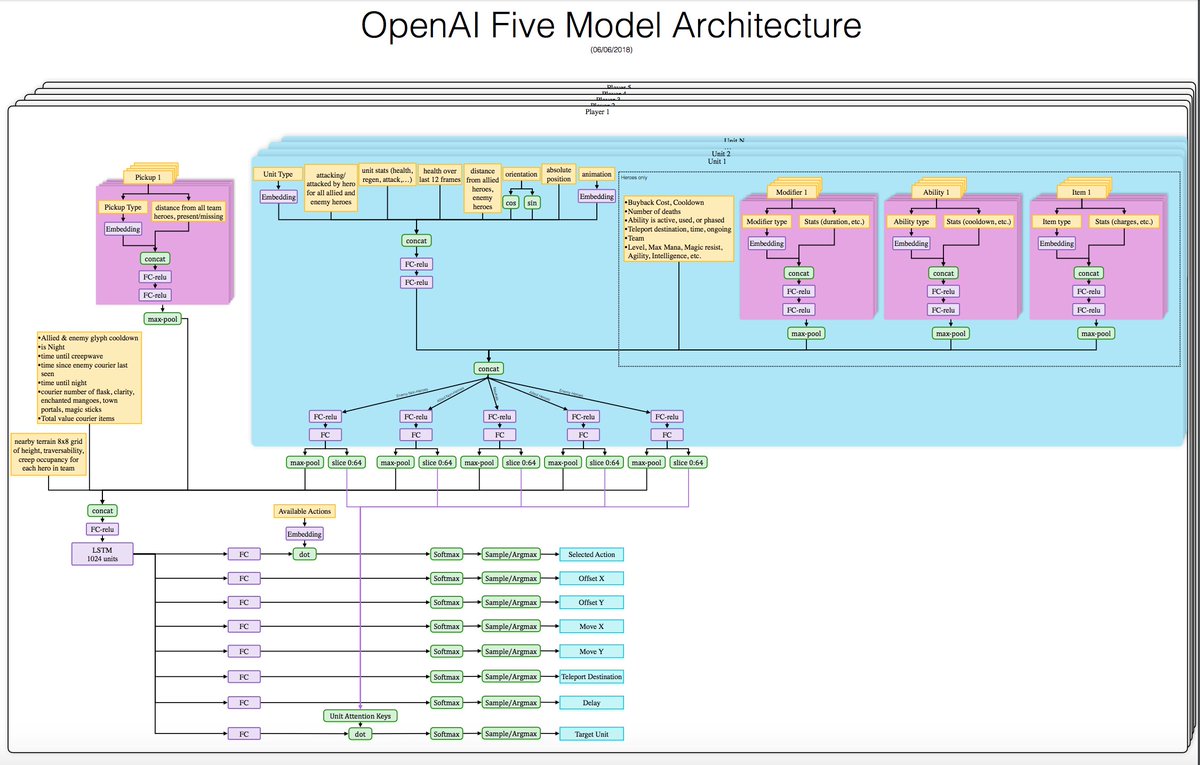

https://twitter.com/Smerity/status/1032044050168209408The match will start soon - I'm streaming live and provide insight as to how the bots are playing and what they're doing! :) Again, no DotA experience. If you're an experienced DotA player, you'll enjoy how I describe this for non DotA players! ^_^

https://twitter.com/gdb/status/1032409558076063744

Highlights:

Highlights:

https://twitter.com/GaryMarcus/status/1031567959712444416I complained thoroughly about it at the time. The main issue was that none of the technical details were released with the surprise reveal at TI 2017 which, in combination with @elonmusk overhyping the result in media, lead to speculation then rollback.

https://twitter.com/Smerity/status/897224659036393472

https://twitter.com/ShannonVallor/status/1030592227817402368My favourite conference experience ever was an accident. What started as a few people catching up for lunch became an impromptu language modeling / translation / knowledge graph discussion which literally became a round table (we found a round black marble table + sat at it) ^_^

https://twitter.com/fchollet/status/1030532125777264640Underlying broader @reddit community problems for r/ML (and other r/...):

https://twitter.com/FedeItaliano76/status/1023885314220339202Spoiler-free "setting the scene": Genetic engineering lead to food wars where companies created plagues and pests to destroy natural and competitor edible plants in order to lock in a monopoly. Think terminator / suicide seeds but next level.

https://twitter.com/RobinWigg/status/1026896160483684354"Investors can't tell if Elon Musk was making a weed joke or if he’s actually talking about investments" should be a headline from @TheOnion, not a necessary topic of financial discussion for a company that already has a laundry list of serious issues.