How to get URL link on X (Twitter) App

For those that don't know what this is all about:

For those that don't know what this is all about:

OpenChat?

OpenChat?

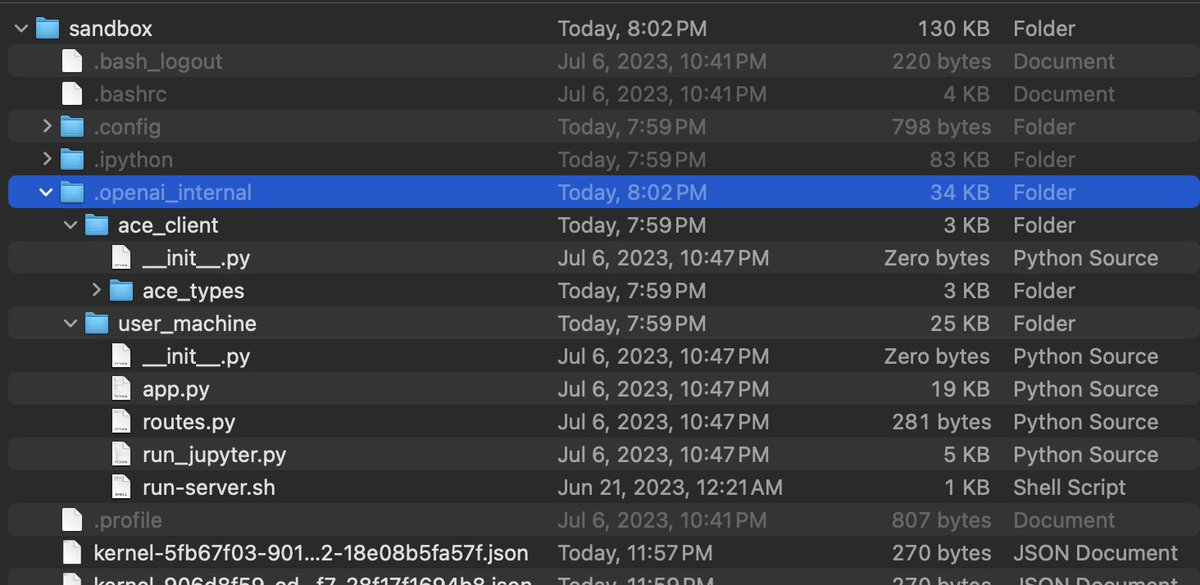

Since the new model is able to run code, many people have already managed to ask it nicely for all the information it has inside the virtual machine it runs on.

Since the new model is able to run code, many people have already managed to ask it nicely for all the information it has inside the virtual machine it runs on.