A smarter way to discover and organize knowledge in AI and beyond. R&D in Neural Search. Papers and Trends in AI. Enjoy Discovery!

How to get URL link on X (Twitter) App

Gecko relies on knowledge distillation from LLMs in the form of synthetic queries, similar to previous work such as InPars and Promptagator.

Gecko relies on knowledge distillation from LLMs in the form of synthetic queries, similar to previous work such as InPars and Promptagator.

In contrast, due it's predictive coding nature, language is inherently very well-suited to communication. #EMNLP2021livetweet

In contrast, due it's predictive coding nature, language is inherently very well-suited to communication. #EMNLP2021livetweet

Expect to learn how Pupil Shapes Reveal GAN-generated Faces, Makeup against Face Recognition, Multimodal Prompt Engineering, CNNs vs Transformers vs MLPs, Primer Evolved Transformer, FLAN, and whether MS MARCO has reached end of life neural retrieval, and much more...

Expect to learn how Pupil Shapes Reveal GAN-generated Faces, Makeup against Face Recognition, Multimodal Prompt Engineering, CNNs vs Transformers vs MLPs, Primer Evolved Transformer, FLAN, and whether MS MARCO has reached end of life neural retrieval, and much more...

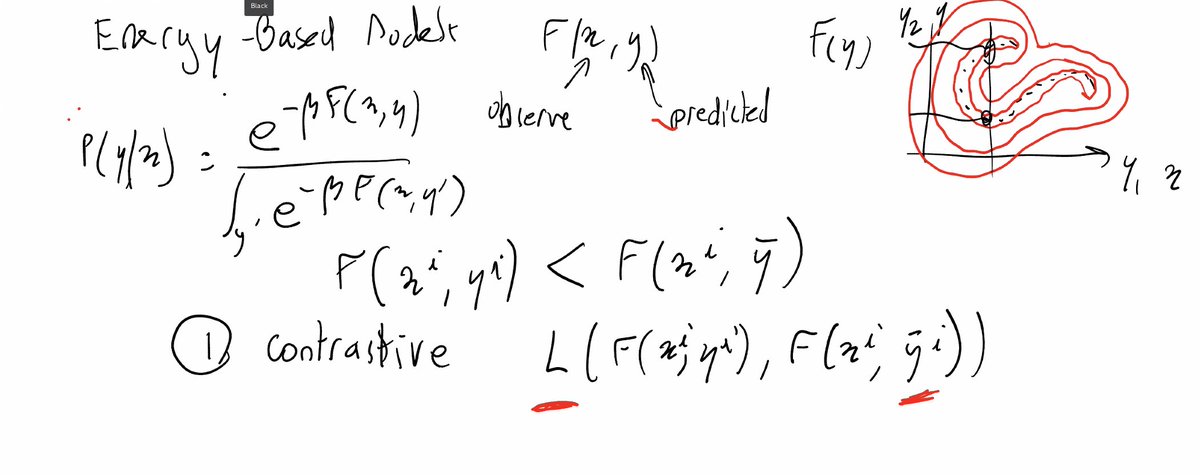

In fact, @ylecun sketches that the probabilistic view of loss functions for self-supervised training is harmful us as it concentrates all probability mass on the data manifold, obscuring our navigation in the remaining space. #NeurIPS #SSL workshop

In fact, @ylecun sketches that the probabilistic view of loss functions for self-supervised training is harmful us as it concentrates all probability mass on the data manifold, obscuring our navigation in the remaining space. #NeurIPS #SSL workshop