Machine Learning Engineer at @weights_biases. I post about machine learning, data visualisation, software tools ❤️ @pytorch @fastdotai 🇮🇪

How to get URL link on X (Twitter) App

https://twitter.com/eugeneyan/status/1652463942822957056mini tutorial to browse research papers (I'll put the code in the weave repo soon)

The plot above shows how each of your experiments performed on the task.

The plot above shows how each of your experiments performed on the task.

Technical debt is a way of framing the cost of taking shortcuts with your software development.

Technical debt is a way of framing the cost of taking shortcuts with your software development. https://twitter.com/charles_irl/status/1457840021772259332Before each of these livecoding sessions, I was tasked with watching the associated Math4ML lesson. I then joined @charles_irl and worked through the autograded exercise notebooks with him.

https://twitter.com/charles_irl/status/1457840023944921092

Data lineage is the process of keeping track of the origin of your data and tracking versions of it over time.

Data lineage is the process of keeping track of the origin of your data and tracking versions of it over time.

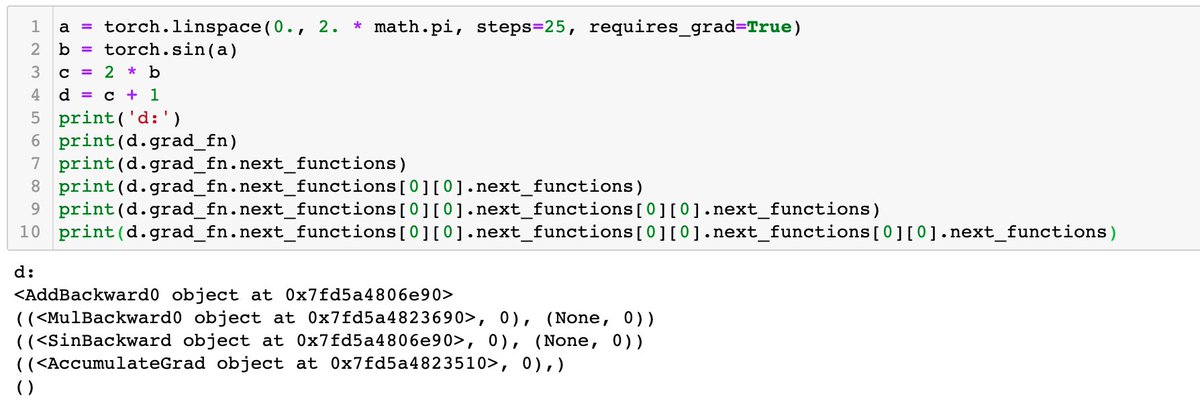

You can see the gradient functions by looking at .grad_fn of your tensors after each computation.

You can see the gradient functions by looking at .grad_fn of your tensors after each computation.