Daily tutorials and insights on DS, ML, LLMs, and RAGs • Co-founder @dailydoseofds_ • IIT Varanasi • ex-AI Engineer @ MastercardAI

10 subscribers

How to get URL link on X (Twitter) App

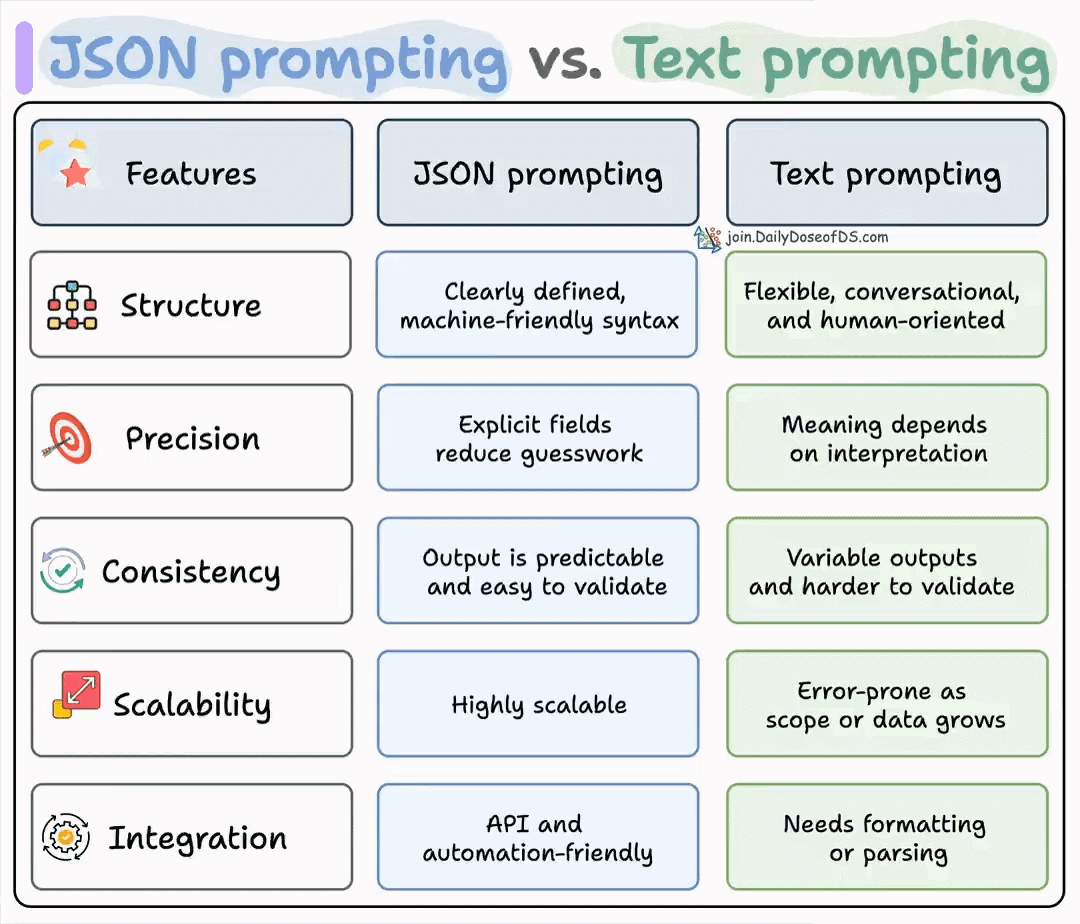

1️⃣ Prompt engineering

1️⃣ Prompt engineeringhttps://twitter.com/_avichawla/status/1974723734503342091