Founder @RunSybil. likes: offsec, LLMs, and dumb memes. prev: research scientist @OpenAI / CS PhD @Harvard / @defcon AI Village

How to get URL link on X (Twitter) App

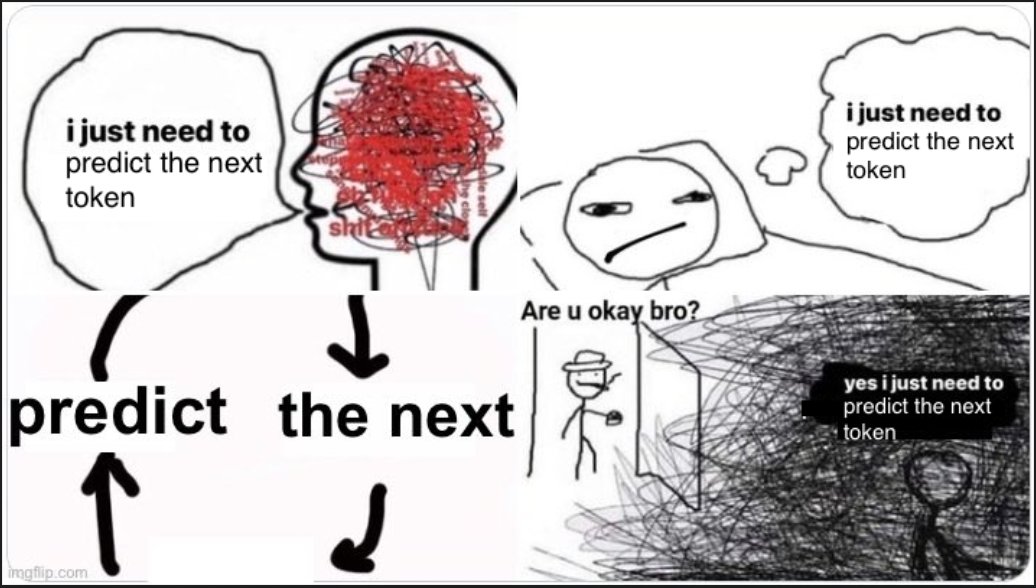

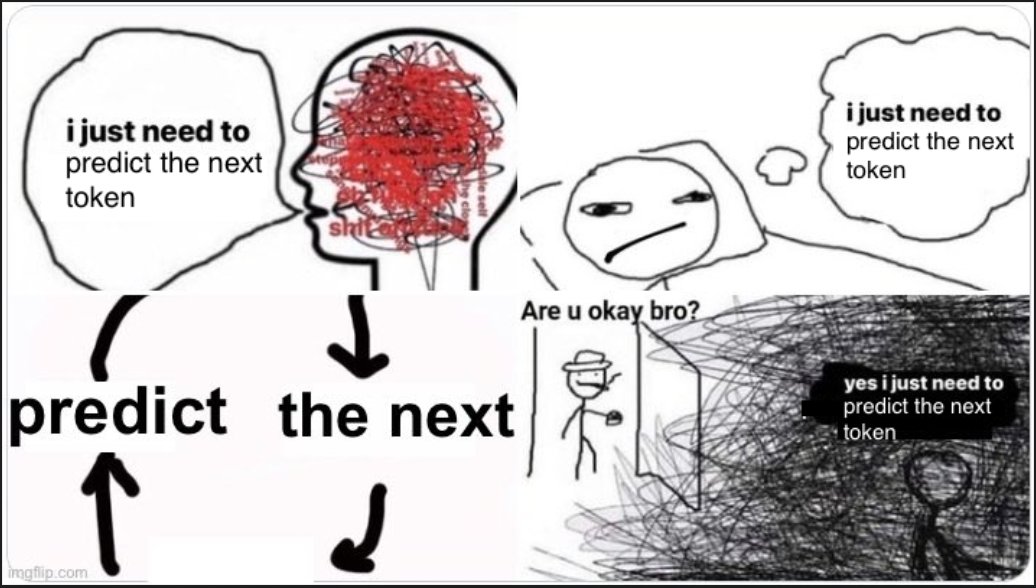

Statefulness refers to the ability to store and run things on program states. LLMs use large amounts of data to predict the next token in a sequence. There are promising advances with retrieval, but it's meant to augment seq prediction accuracy rather than replace the paradigm

Statefulness refers to the ability to store and run things on program states. LLMs use large amounts of data to predict the next token in a sequence. There are promising advances with retrieval, but it's meant to augment seq prediction accuracy rather than replace the paradigm

https://twitter.com/yy/status/1358824479426813954Preliminary results from extracting PII from GPT2 and GPT3 show that you can only get something that looks like PII ~20% of the time when you directly query the model (with some variation depending on prompt design/type of PII you’re trying to extract)

https://twitter.com/keen_lab/status/1111469579102912512They attack autowipers and lane-following through both digital and physical attacks. For digital they show you can inject adversarial examples onto GPU by hooking t_cuda_std_tmrc::compute. This is obviously much harder to accomplish IRL but absolutely worth considering