How to get URL link on X (Twitter) App

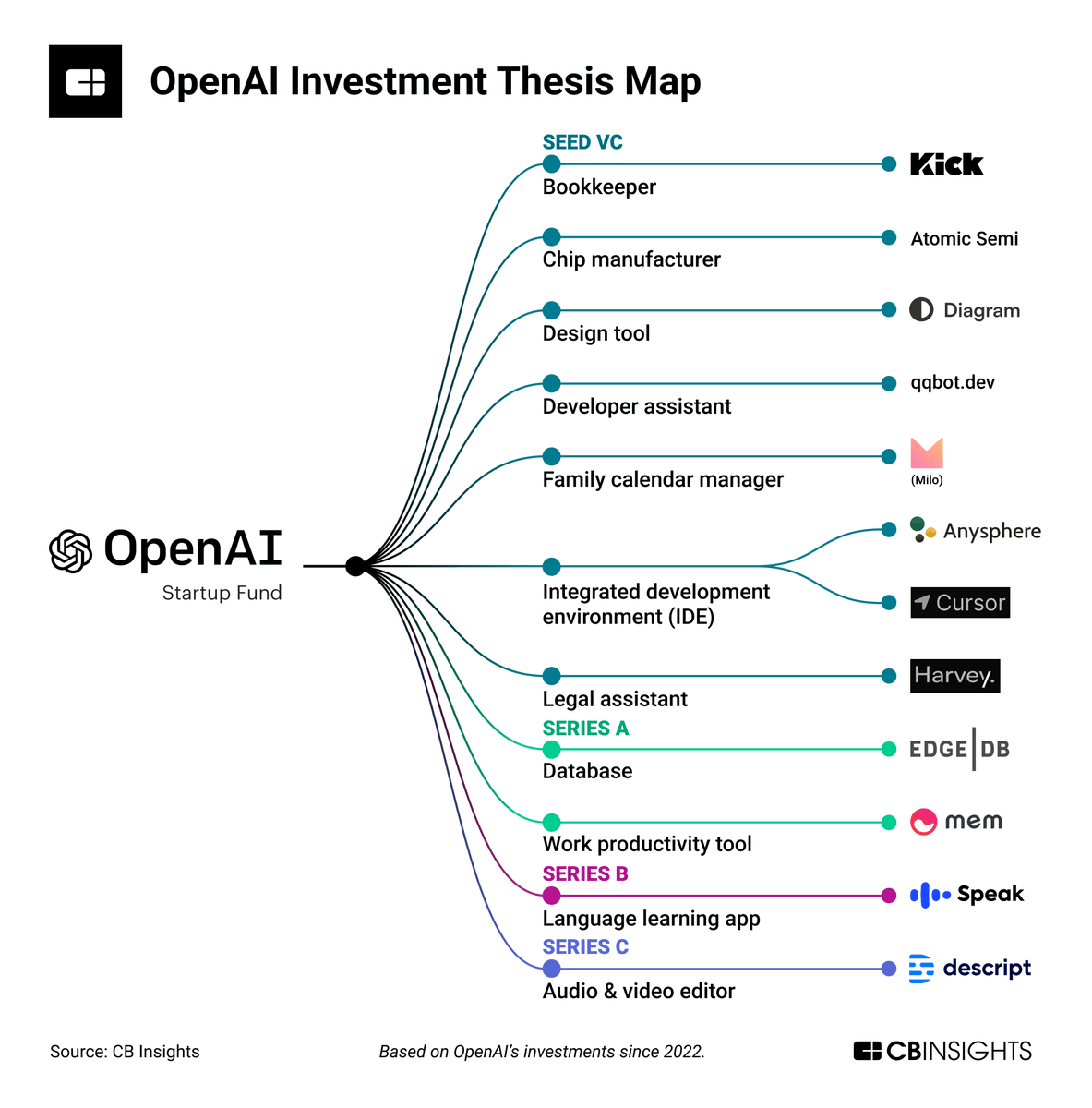

Harvey's first product is a GPT-4 powered AI knowledge worker.

Harvey's first product is a GPT-4 powered AI knowledge worker.

LLMs can only spit out the next token, given the context.

LLMs can only spit out the next token, given the context.

I'll publish a thread this weekend explaining how, but for now:

I'll publish a thread this weekend explaining how, but for now:

Allen & Overy, the 2nd-largest law firm in the UK and 7th-largest on Earth, is partnering with Harvey after a 3-month trial of its AI lawyer product.

Allen & Overy, the 2nd-largest law firm in the UK and 7th-largest on Earth, is partnering with Harvey after a 3-month trial of its AI lawyer product.

Hungry Hungry Hippos, aka "H3", functions like a linear RNN, or a long convolution.

Hungry Hungry Hippos, aka "H3", functions like a linear RNN, or a long convolution.

Best of this edition: "GPT from Scratch" with @karpathy!

Best of this edition: "GPT from Scratch" with @karpathy!https://twitter.com/karpathy/status/1615398117683388417

Best of this week:

Best of this week:https://twitter.com/TrungTPhan/status/1612707453787074562

TL;DR: A team from DeepMind trained transformers to:

TL;DR: A team from DeepMind trained transformers to:

What is Harvey?

What is Harvey?

Best of this week: OpenAI releases ChatGPT!

Best of this week: OpenAI releases ChatGPT!https://twitter.com/sama/status/1598038815599661056

Best of this week:

Best of this week:https://twitter.com/MetaAI/status/1595075884502855680

Announcement and video from Meta AI:

Announcement and video from Meta AI:https://twitter.com/MetaAI/status/1595075884502855680

https://twitter.com/nearcyan/status/1587207677402390534

A slower last week for AI Twitter. Perhaps we've been distracted.

A slower last week for AI Twitter. Perhaps we've been distracted.

What is emergence?

What is emergence?

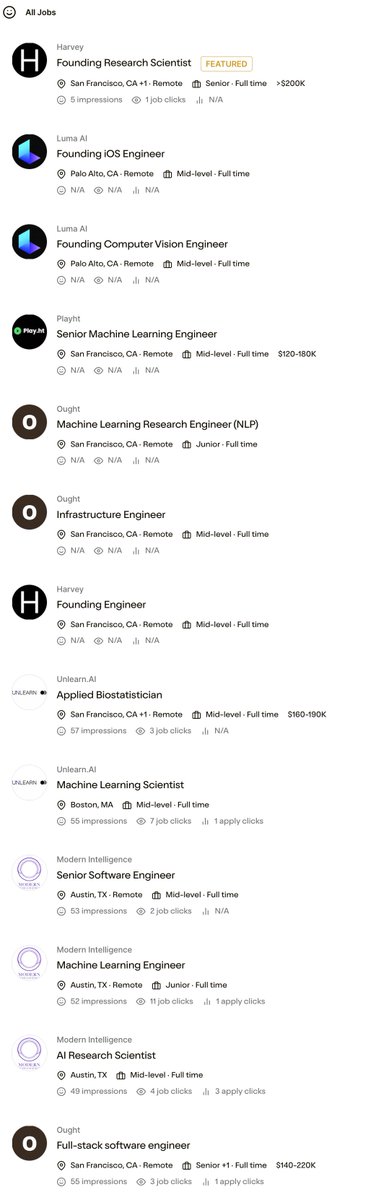

All job listings have to come from *excellent* AI companies.

All job listings have to come from *excellent* AI companies.https://twitter.com/ai__pub/status/1588618211293290496

Best of this week: terrifying OpenAI Codex demo. 😅

Best of this week: terrifying OpenAI Codex demo. 😅https://twitter.com/nearcyan/status/1587207677402390534

Best of this week:

Best of this week:https://twitter.com/MishaLaskin/status/1585265436723236864

First, what do NeRFs do?

First, what do NeRFs do?https://twitter.com/LumaLabsAI/status/1559650257012932608