Interested in how & what the brain computes. Assistant professor CS & Neuro @UTAustin. Married to the incredible @Libertysays. he/him

How to get URL link on X (Twitter) App

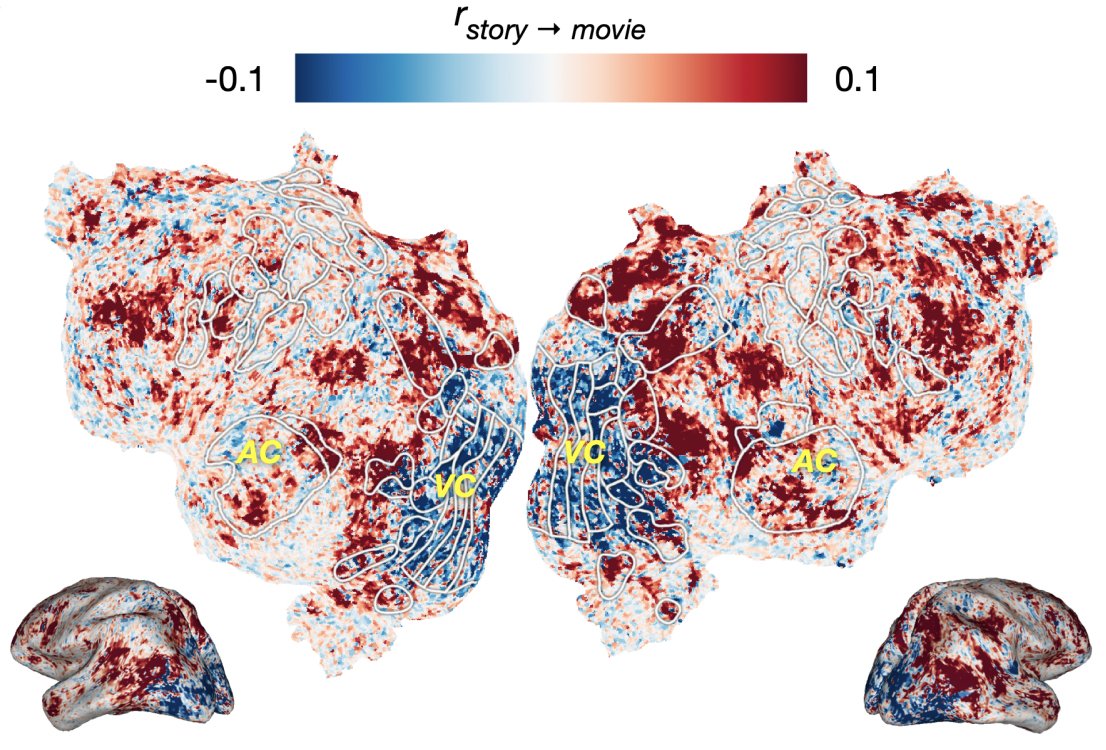

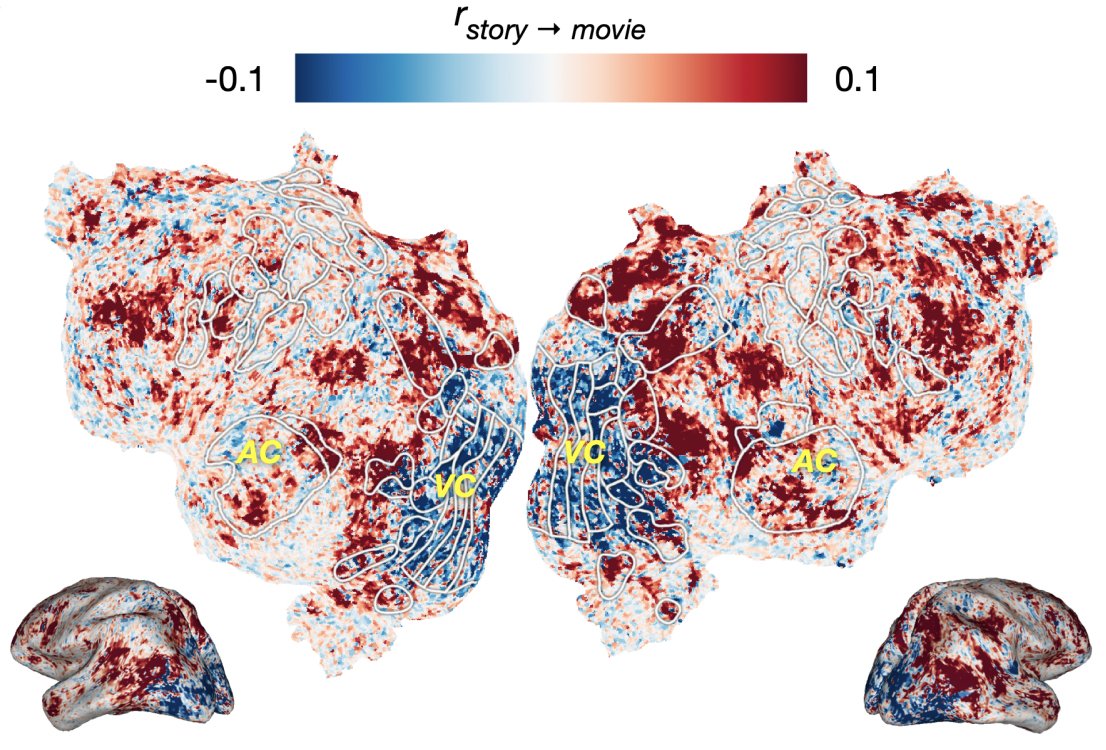

We used the BridgeTower multimodal transformer to extract representation of movie stimuli (from each frame) and story stimuli. BT learns repr's that are nicely structured for image-text matching, etc. arxiv.org/abs/2206.08657

We used the BridgeTower multimodal transformer to extract representation of movie stimuli (from each frame) and story stimuli. BT learns repr's that are nicely structured for image-text matching, etc. arxiv.org/abs/2206.08657

first, this is nuts because there's already been a ton of great work in both vision & language neuroscience that uses recent AI developments (LLMs, CNNs, etc.), cf the work by @c_caucheteux @JeanRemiKing @martin_schrimpf @ev_fedorenko @shaileeejain @GoldsteinYAriel and many more

first, this is nuts because there's already been a ton of great work in both vision & language neuroscience that uses recent AI developments (LLMs, CNNs, etc.), cf the work by @c_caucheteux @JeanRemiKing @martin_schrimpf @ev_fedorenko @shaileeejain @GoldsteinYAriel and many more

https://twitter.com/_akhaliq/status/1660511164605018117Scaling LM size (here within the OPT family) gives roughly log-linear improvement. Big models give ~15% boost in performance over more typical GPT/2-scale models (+22% var. exp.). (The biggest models have so many features that fMRI model fitting seems to suffer a bit, though.)