How to get URL link on X (Twitter) App

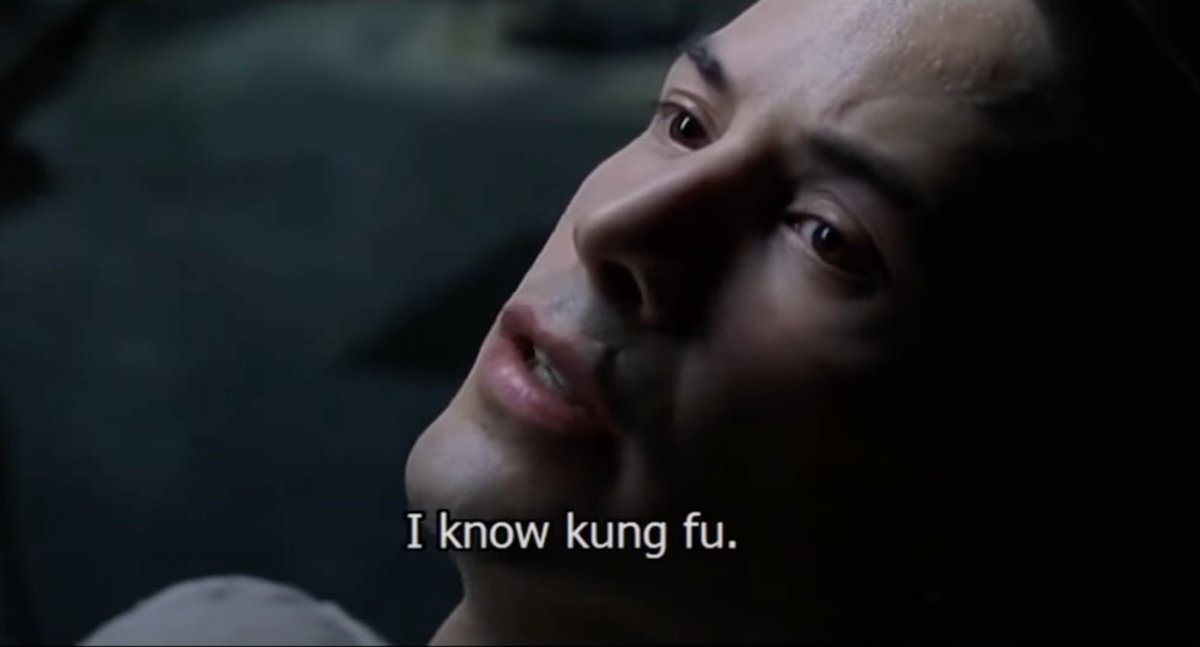

At a high level, the best analogy I've heard for Skills is something like Neo learning Kung Fu in seconds in the Matrix.

At a high level, the best analogy I've heard for Skills is something like Neo learning Kung Fu in seconds in the Matrix.

We will select four winners:

We will select four winners:

dxt's are zip archives containing the local MCP server as well as a manifest.json, which describes everything Claude Desktop and other apps supporting desktop extensions need to know.

dxt's are zip archives containing the local MCP server as well as a manifest.json, which describes everything Claude Desktop and other apps supporting desktop extensions need to know.

Let's start with some context:

Let's start with some context:

Let's start with Opus 4. It’s finally back and it's better than ever.

Let's start with Opus 4. It’s finally back and it's better than ever.

1/ CLAUDE md files are the main hidden gem. Simple markdown files that give Claude context about your project - bash commands, code style, testing patterns. Claude loads them automatically and you can add to them with # key

1/ CLAUDE md files are the main hidden gem. Simple markdown files that give Claude context about your project - bash commands, code style, testing patterns. Claude loads them automatically and you can add to them with # key

https://twitter.com/2786431437/status/1894238330138812616

We developed Claude 3.7 Sonnet with a different philosophy than other reasoning models out there. Rather than making a separate model, we integrated reasoning as one of many capabilities in a single frontier model.

We developed Claude 3.7 Sonnet with a different philosophy than other reasoning models out there. Rather than making a separate model, we integrated reasoning as one of many capabilities in a single frontier model.

Under the hood, Claude is trained to cite sources. With Citations, we are exposing this ability to devs.

Under the hood, Claude is trained to cite sources. With Citations, we are exposing this ability to devs.

https://twitter.com/1380719534970040322/status/1823751966893465630

https://twitter.com/19888273/status/1861084103556366665

Here's a quick demo using the Claude desktop app, where we've configured MCP:

Here's a quick demo using the Claude desktop app, where we've configured MCP:

To start, you enter a prompt and specify what aspects of the prompt you would like to improve.

To start, you enter a prompt and specify what aspects of the prompt you would like to improve.

We kicked the day off with a @DarioAmodei fireside chat.

We kicked the day off with a @DarioAmodei fireside chat.