chief ai officer @meta, founder @scale_ai. rational in the fullness of time

3 subscribers

How to get URL link on X (Twitter) App

Here you can see some sample puzzles:

Here you can see some sample puzzles:

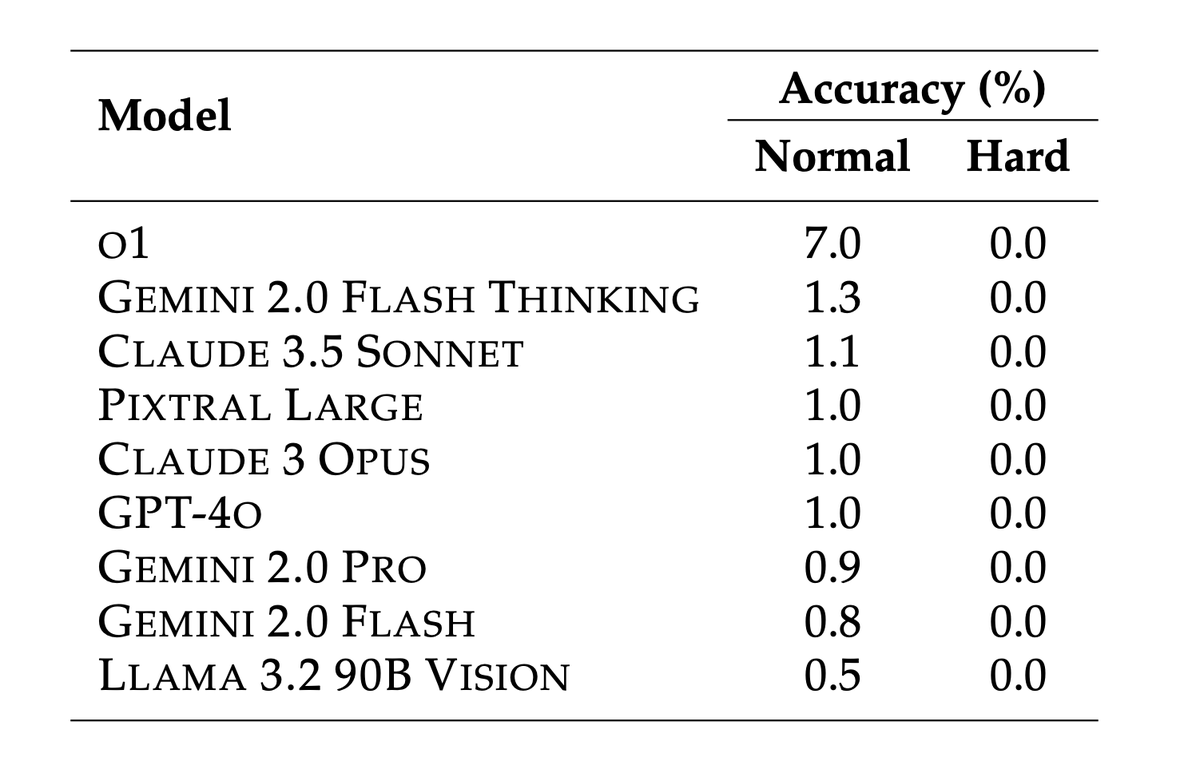

With the National Security Memorandum coming out of the White House recently, it is clear we need to move fast on AI in national security.

With the National Security Memorandum coming out of the White House recently, it is clear we need to move fast on AI in national security.

We need tough questions from human experts to push AI models to their limits. If you submit one of the best questions, we’ll give you co-authorship and a share of the prize pot.

We need tough questions from human experts to push AI models to their limits. If you submit one of the best questions, we’ll give you co-authorship and a share of the prize pot.

Training on pure synthetic data has no information gain, thus there is little reason the model *should* improve.

Training on pure synthetic data has no information gain, thus there is little reason the model *should* improve.

2/ there are 2 schools of thought:

2/ there are 2 schools of thought:https://x.com/EpochAIResearch/status/1798742418763981241

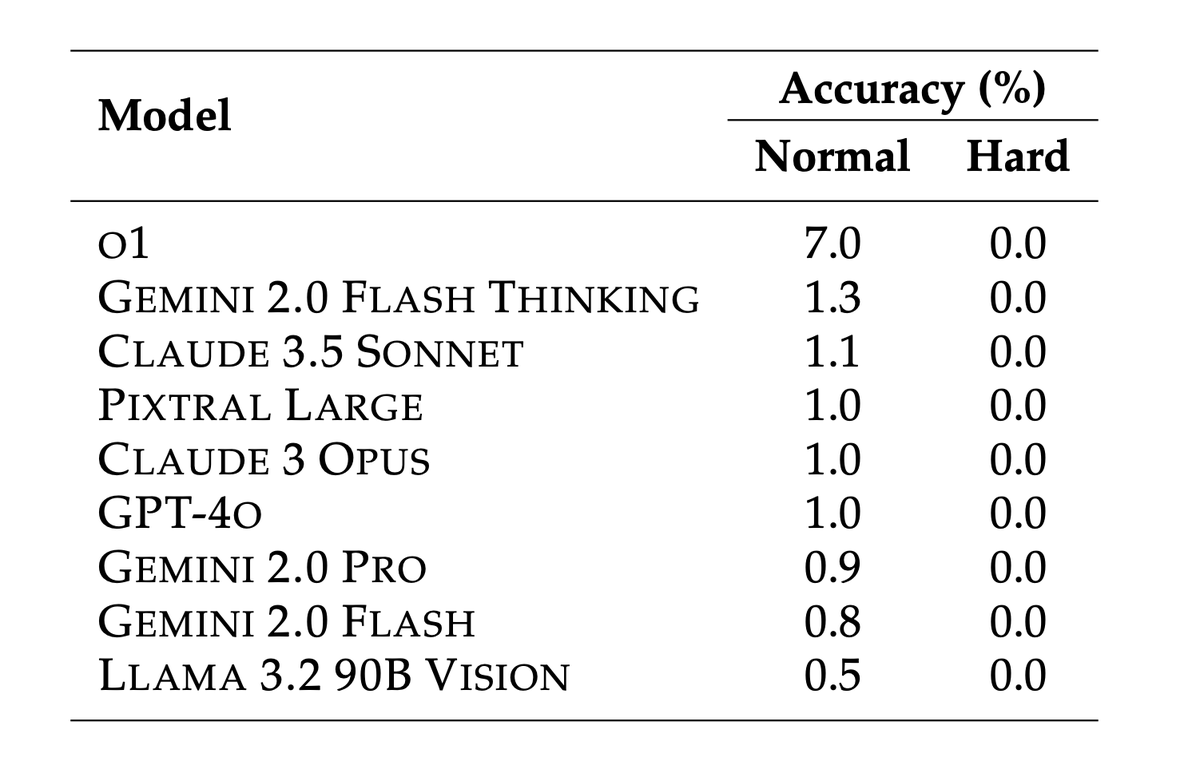

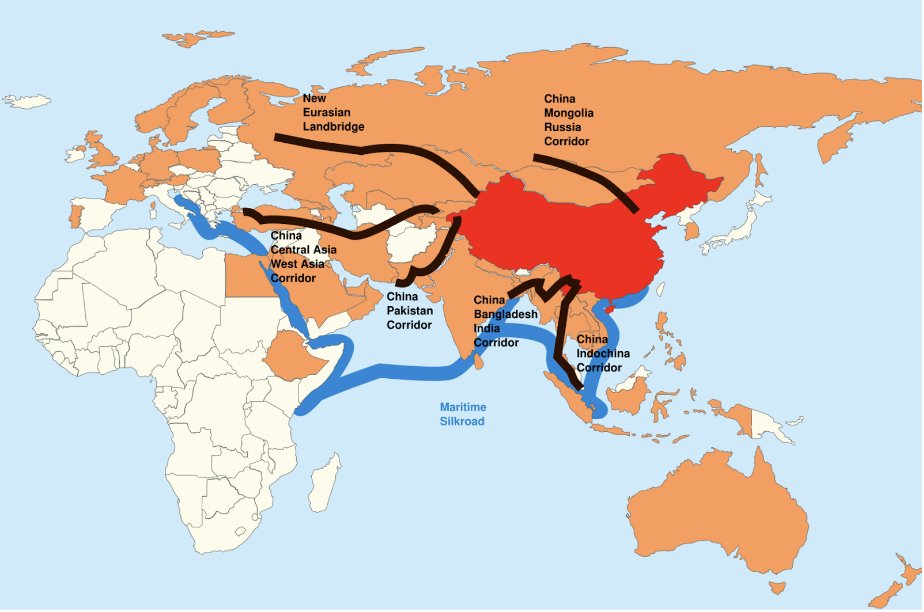

2/ Evaluations are a critical component of the AI ecosystem.

2/ Evaluations are a critical component of the AI ecosystem.

LEARNING 1: The conceit of an expert is a trap. Strive for a beginner’s mind and the energy of a novice.

LEARNING 1: The conceit of an expert is a trap. Strive for a beginner’s mind and the energy of a novice.

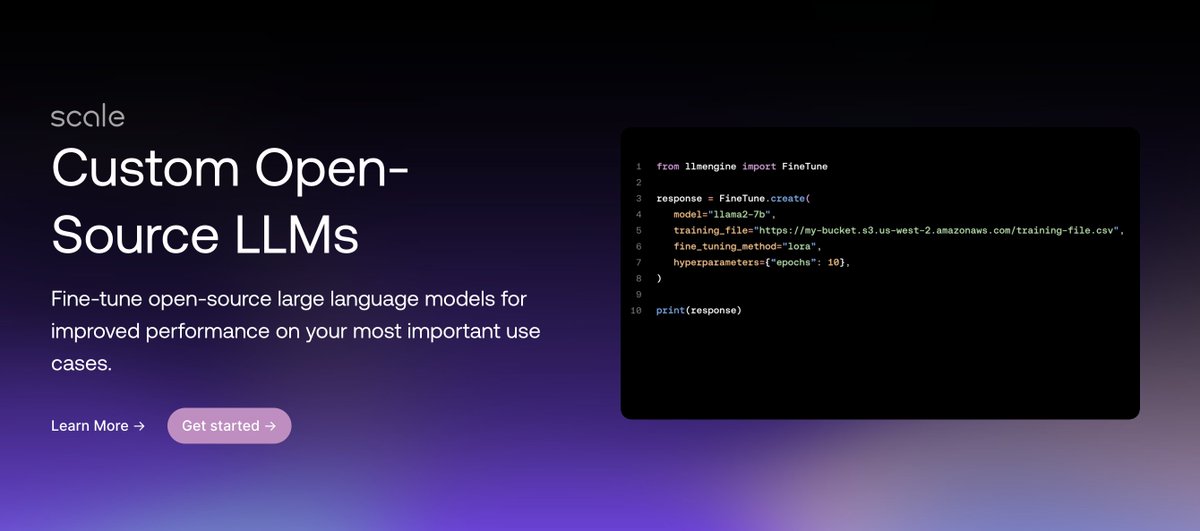

We are open-sourcing scale-llm-engine, our library for hosting and fine-tuning open-source LLMs.

We are open-sourcing scale-llm-engine, our library for hosting and fine-tuning open-source LLMs.https://twitter.com/karpathy/status/1618311660539904002

It works really well in conveying the feeling of cosmetic products.

It works really well in conveying the feeling of cosmetic products.

The most creative ads are the ones we remember the best—they're striking, memorable, and cool.

The most creative ads are the ones we remember the best—they're striking, memorable, and cool.

We announced the ✨Scale Applied AI✨ Suite.

We announced the ✨Scale Applied AI✨ Suite.