Professor at @ChicagoBooth. Economics + Applied AI.

Visiting Princeton 2025-2026 academic year.

Essays: https://t.co/9qSiQxuFtC

How to get URL link on X (Twitter) App

Link:

Link:

https://x.com/alexolegimas/status/1998073556723216569Why this setting?

https://x.com/polynoamial/status/1994439121243169176?s=20

You may be thinking, I've seen/read the Winner's Curse before.

You may be thinking, I've seen/read the Winner's Curse before.

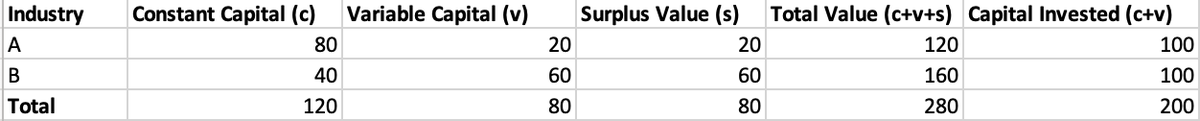

https://twitter.com/ygzgzot/status/1970620073275740363In Capital Vol 1, Marx build’s on Ricardo’s theory of value, stating that the value of commodity is proportional to the “socially necessary labor time” required for its production.

https://twitter.com/JohnHolbein1/status/1933872486237815224The original work *did not* argue that any seemingly irrelevant factor would have a huge effect everywhere. You first had to do a ton of research to see if a nudge was appropriate in the first place. Want to test a salience intervention for taxes? First make sure people are inattentive to them. What to test a reminder intervention? Make sure that imperfect recall is a problem in the setting!

https://twitter.com/lxeagle17/status/1899979401460371758

Brings question: what do we want students to learn in our classes? There is an argument that students will always have access to GPT, so assignments should allow access.

Brings question: what do we want students to learn in our classes? There is an argument that students will always have access to GPT, so assignments should allow access.