Cofounder, @AdaptiveCLabs, “the NTSB of Tech” bringing Resilience Engineering to industry. he/him. Won’t speak on all-male panels, and #blacklivesmatter.

3 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/statwonk/status/1388117165954551808An excellent account of this history can be found in @sidneydekkercom's Foundations of Safety Science (bookshop.org/books/foundati…) as well as this very accessible work by @SINTEF (sintef.no/globalassets/u…)

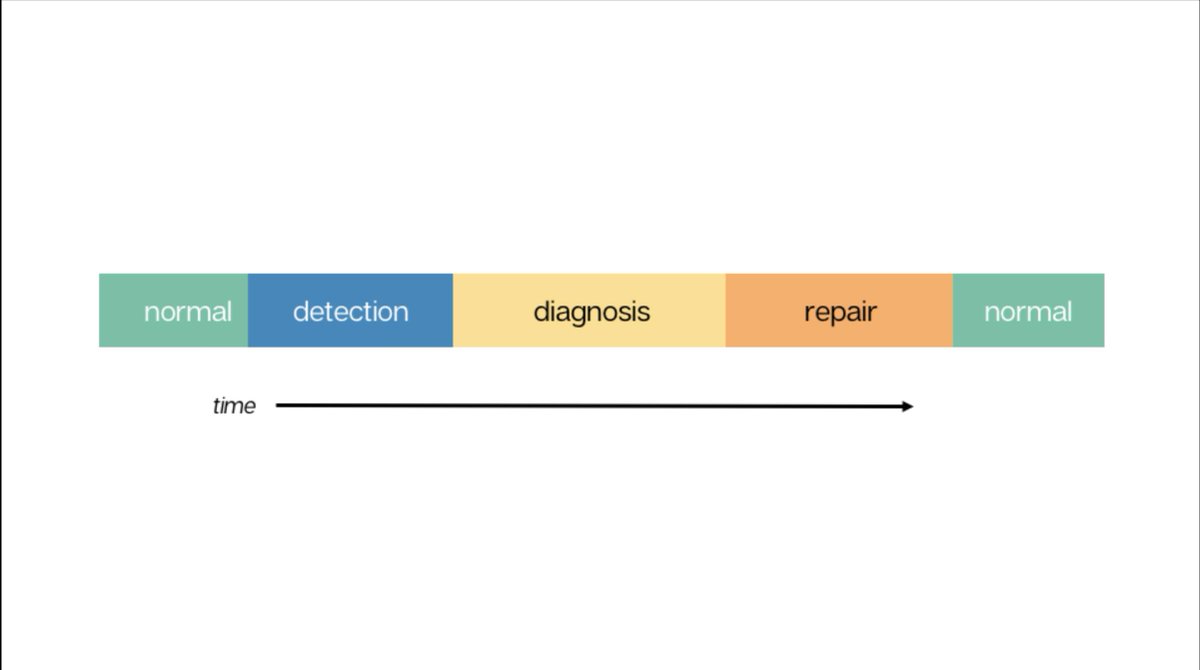

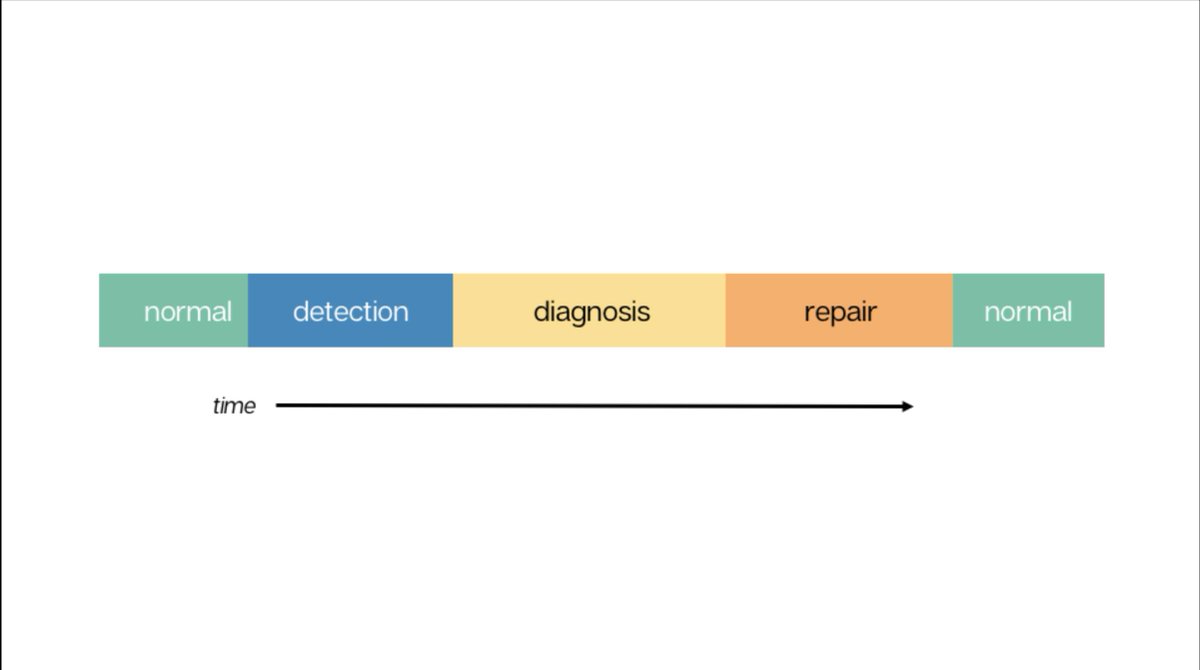

But this isn't what we find when we look closely at *real* incidents in the wild. They tend to be much "messier" than the above picture purports.

But this isn't what we find when we look closely at *real* incidents in the wild. They tend to be much "messier" than the above picture purports.

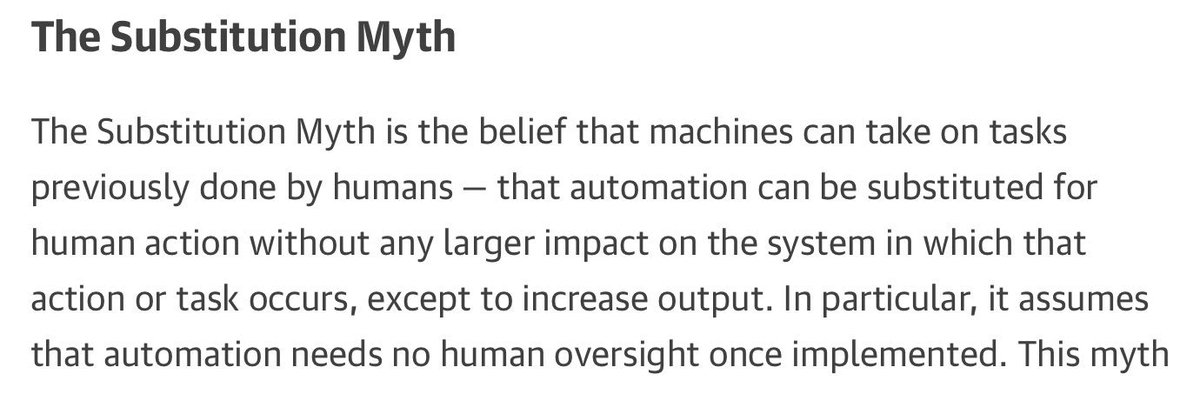

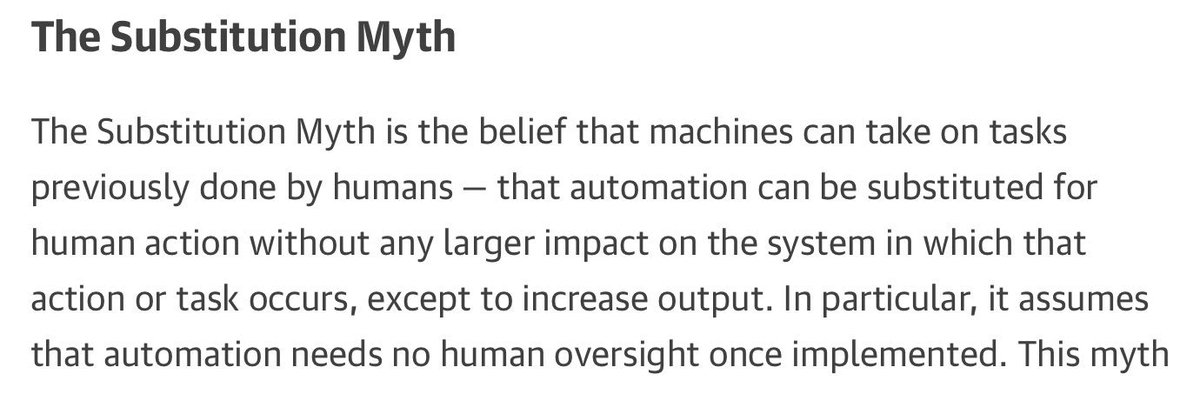

Another way of putting this is:

Another way of putting this is: