Researcher @MSFTResearch. Co-founder pywhy/dowhy. Work on causality & machine learning. Searching for a path to causal AI https://t.co/tn9kMAmlKw

How to get URL link on X (Twitter) App

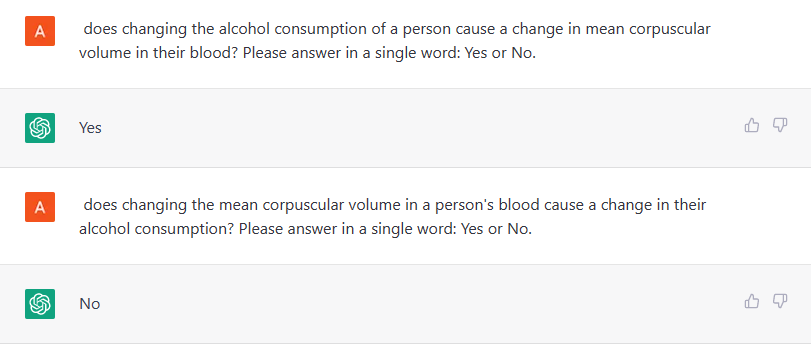

The benchmark contains 108 pairs of variables and the task is to infer which one causes the other. Best accuracy using causal discovery methods is 70-80%. On 75 pairs we've evaluated, ChatGPT obtains 92.5%.

The benchmark contains 108 pairs of variables and the task is to infer which one causes the other. Best accuracy using causal discovery methods is 70-80%. On 75 pairs we've evaluated, ChatGPT obtains 92.5%.

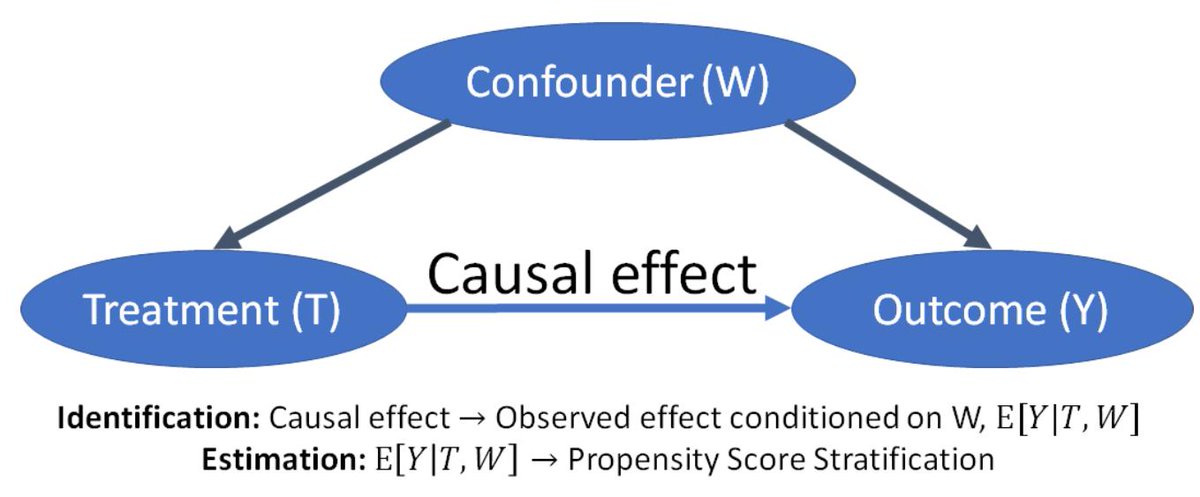

In a KDD tutorial with @emrek, we outline how you can use DAGs and potential outcomes together for causal analysis and discuss empirical examples. 2/7 causalinference.gitlab.io/kdd-tutorial/

In a KDD tutorial with @emrek, we outline how you can use DAGs and potential outcomes together for causal analysis and discuss empirical examples. 2/7 causalinference.gitlab.io/kdd-tutorial/