Causal Inference and Statistics in the Social/Health Sciences and Artificial Intelligence. Assistant Professor @uwstat @uw #causaltwitter #causalinference

How to get URL link on X (Twitter) App

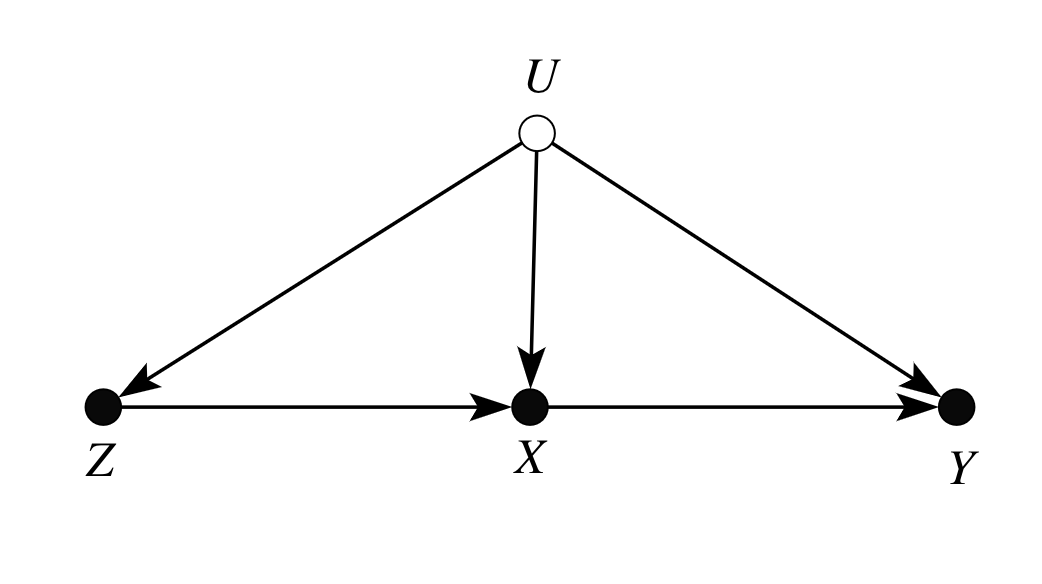

https://twitter.com/dade_us/status/1246905409530556417(2/n) Those dashed arrows are used to indicate *dependent* error terms, or more substantively, latent (unobserved) common causes.

https://twitter.com/duncanjwatts/status/1182322074628628481(2/4) Would you then conclude that the "effects are too small to warrant policy change"? Surely not. The low "variance explained" is due to low variation in exposure, but the effect of an intervention could be huge. Thus, if screen time affects one's happiness substantially,

https://twitter.com/autoregress/status/1112882689814749185...not only you can detect those testable implications immediately with the naked eye, but there are efficient algorithms that can find these testable implications automatically for you---both conditional independences, as well as "verma"-type of constraints.