How to get URL link on X (Twitter) App

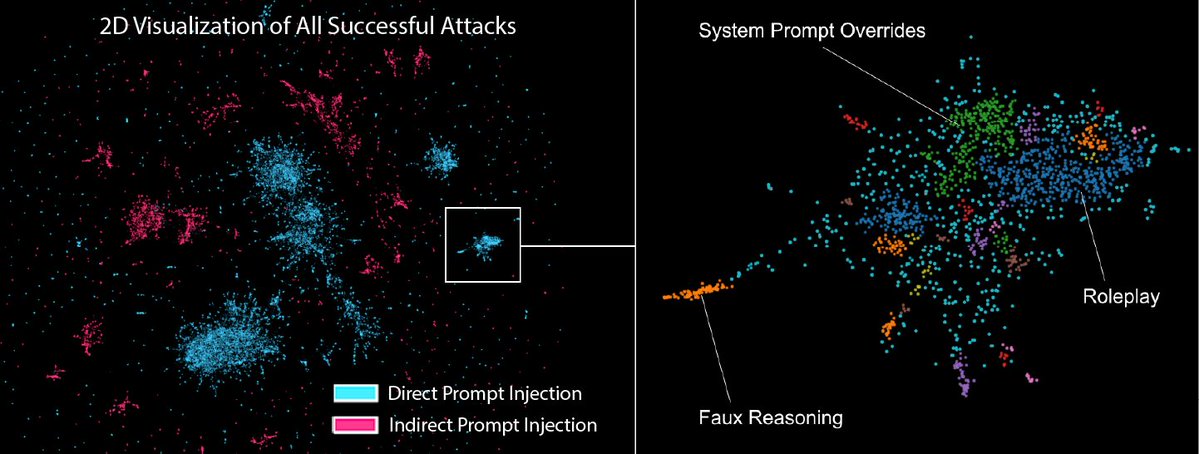

Huge thanks to @AISecurityInst , OpenAI, Anthropic, and Google DeepMind for sponsoring, and to UK and US AISI for judging. The competition was held in the @GraySwanAI Arena.

Huge thanks to @AISecurityInst , OpenAI, Anthropic, and Google DeepMind for sponsoring, and to UK and US AISI for judging. The competition was held in the @GraySwanAI Arena.

Much like brain scans such as PET and fMRI, we have devised a scanning technique called LAT to observe the brain activity of LLMs as they engage in processes related to *concepts* like truth and *activities* such as lying. Here’s what we found…

Much like brain scans such as PET and fMRI, we have devised a scanning technique called LAT to observe the brain activity of LLMs as they engage in processes related to *concepts* like truth and *activities* such as lying. Here’s what we found…

Claude-2 has an additional layer of safety filter. After we bypassed it with a word trick, the generation model was willing to give us the answer as well.

Claude-2 has an additional layer of safety filter. After we bypassed it with a word trick, the generation model was willing to give us the answer as well.