How to get URL link on X (Twitter) App

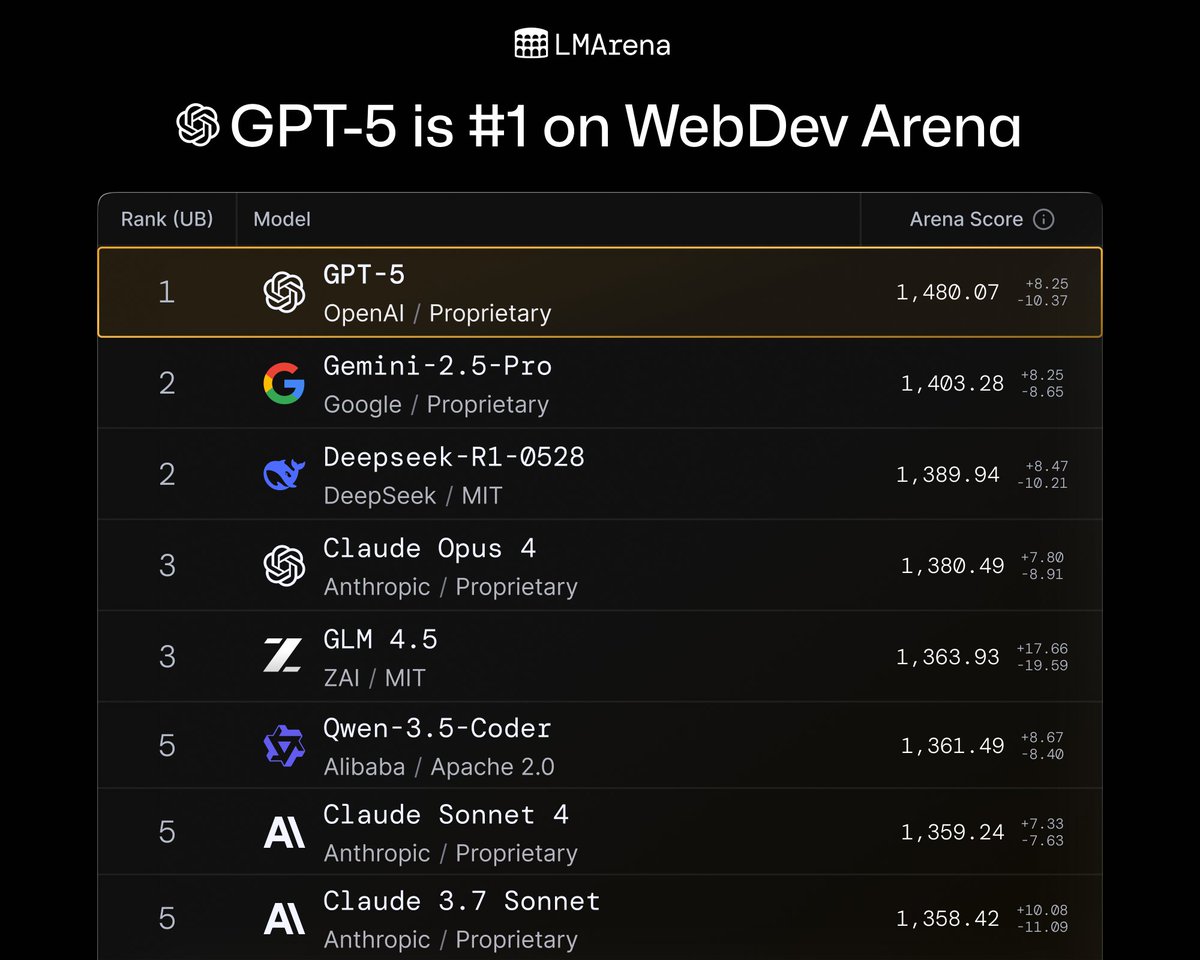

In WebDev Arena, GPT-5 sets a new record:

In WebDev Arena, GPT-5 sets a new record:

https://twitter.com/GoogleDeepMind/status/1930656243346976925

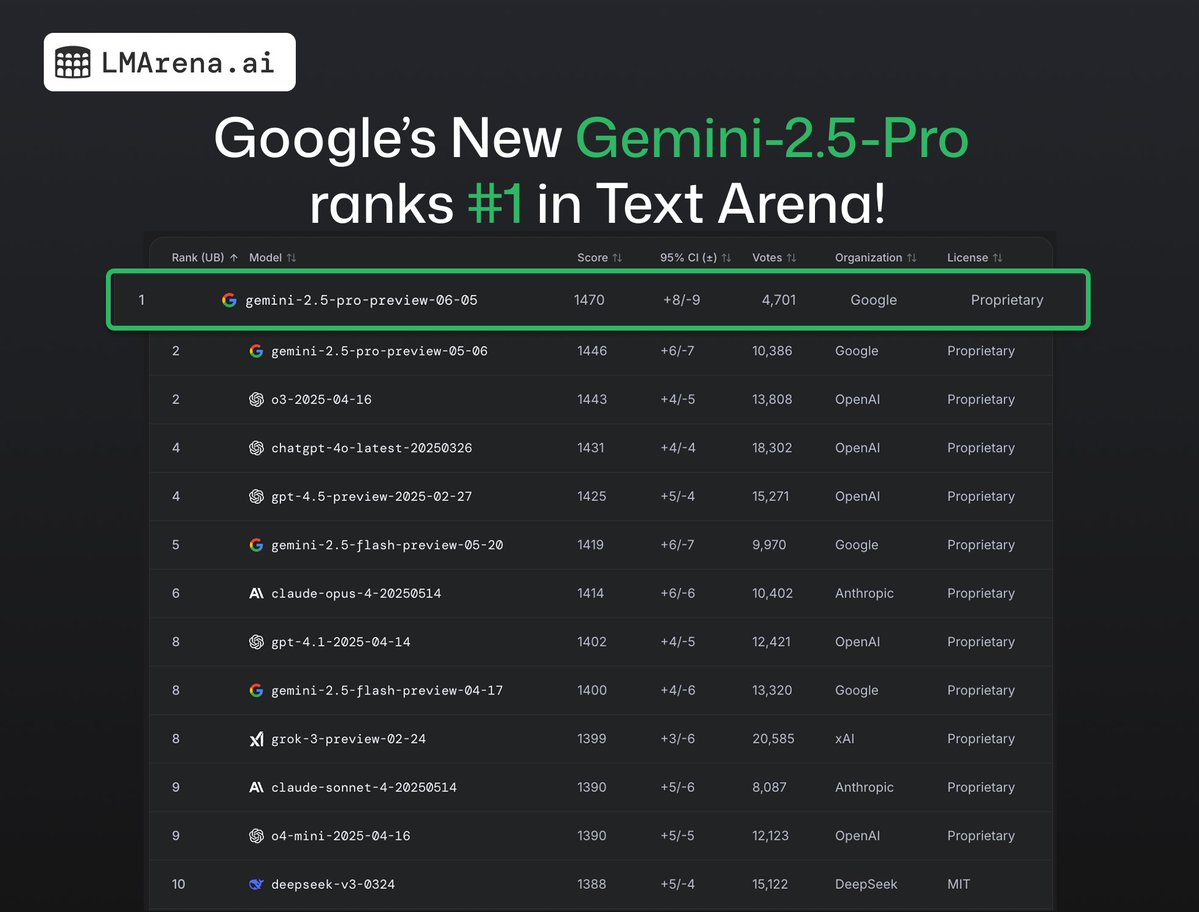

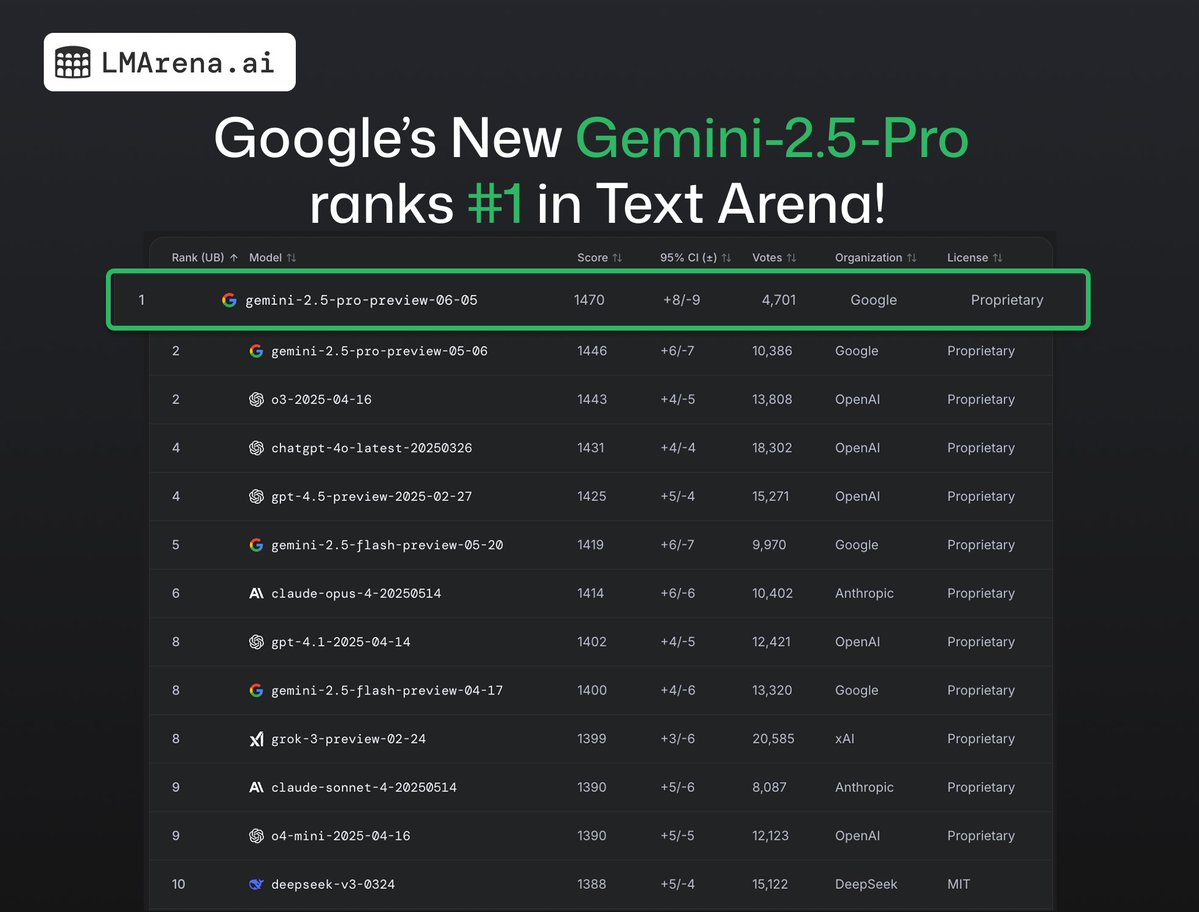

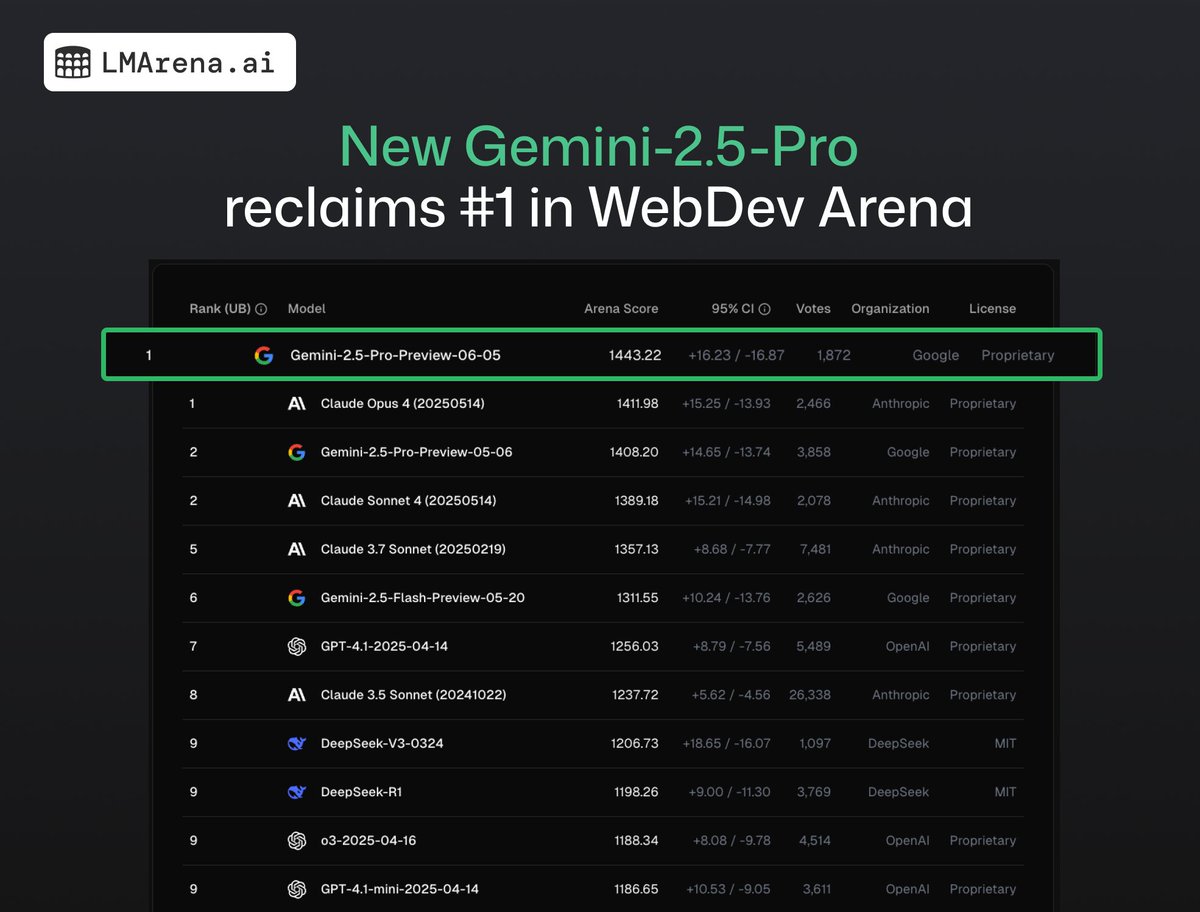

New Gemini-2.5-Pro (06-05) ranks #1 in WebDev Arena (+35 pts from previous 2.5 Pro)

New Gemini-2.5-Pro (06-05) ranks #1 in WebDev Arena (+35 pts from previous 2.5 Pro)

New Gemini-2.5-Flash ranks #2 across major categories (Hard, Coding, Math)

New Gemini-2.5-Flash ranks #2 across major categories (Hard, Coding, Math)

We saw clear leaps in improvements over the previous ChatGPT-4o release:

We saw clear leaps in improvements over the previous ChatGPT-4o release:

Gemini 2.5 Pro #1 across ALL categories, tied #1 with Grok-3/GPT-4.5 for Hard Prompts and Coding, and edged out across all others to take the lead 🏇🏆

Gemini 2.5 Pro #1 across ALL categories, tied #1 with Grok-3/GPT-4.5 for Hard Prompts and Coding, and edged out across all others to take the lead 🏇🏆

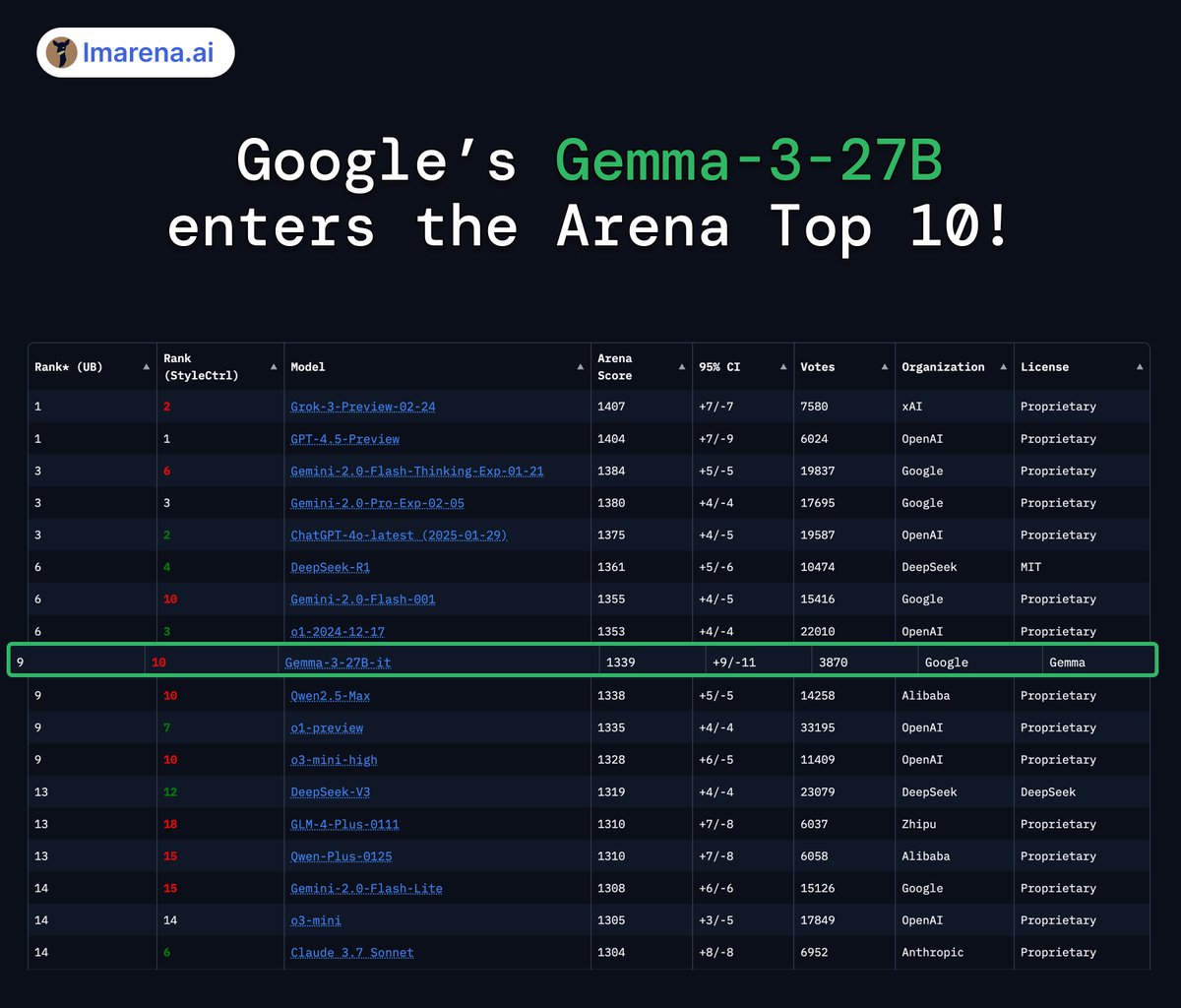

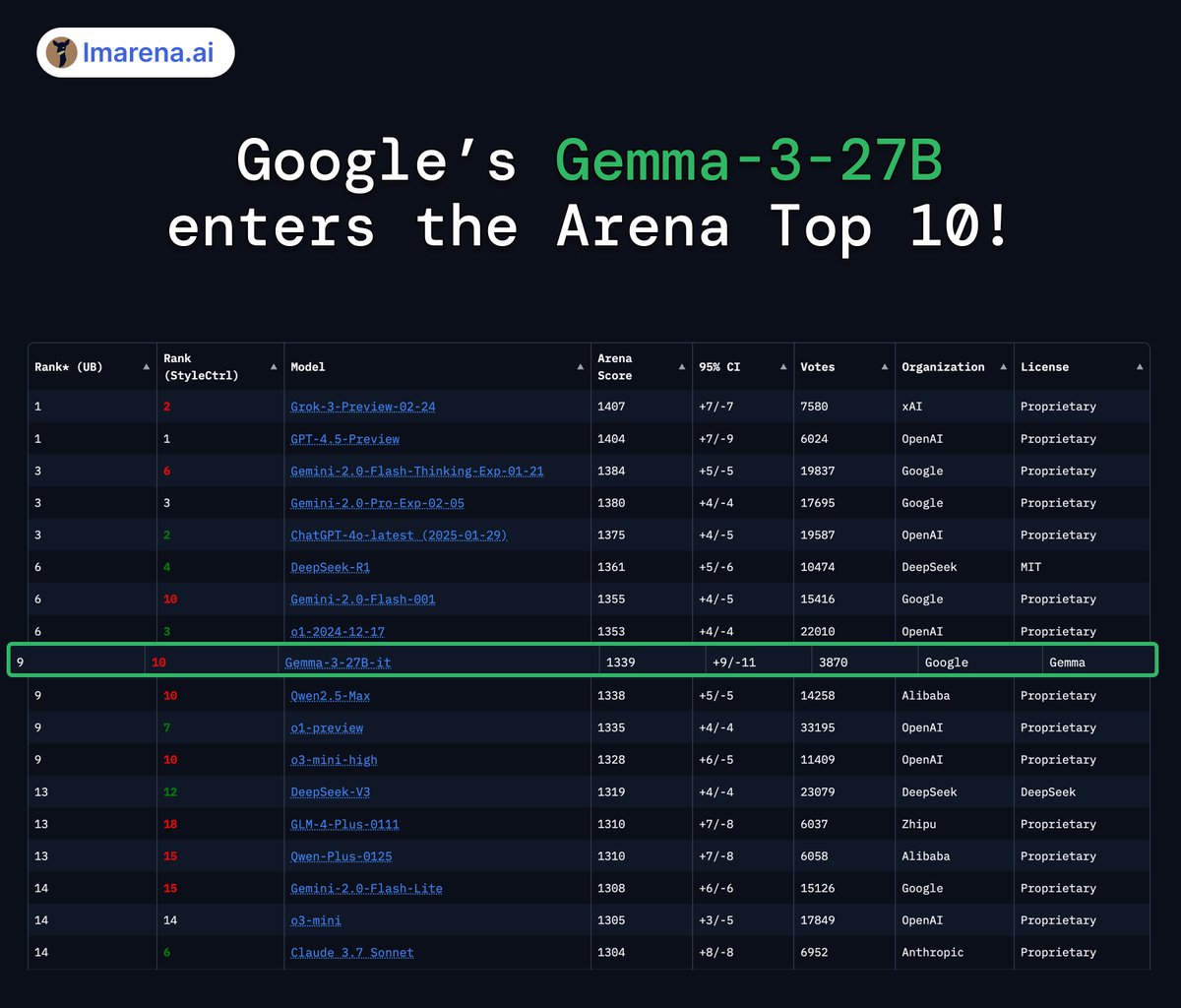

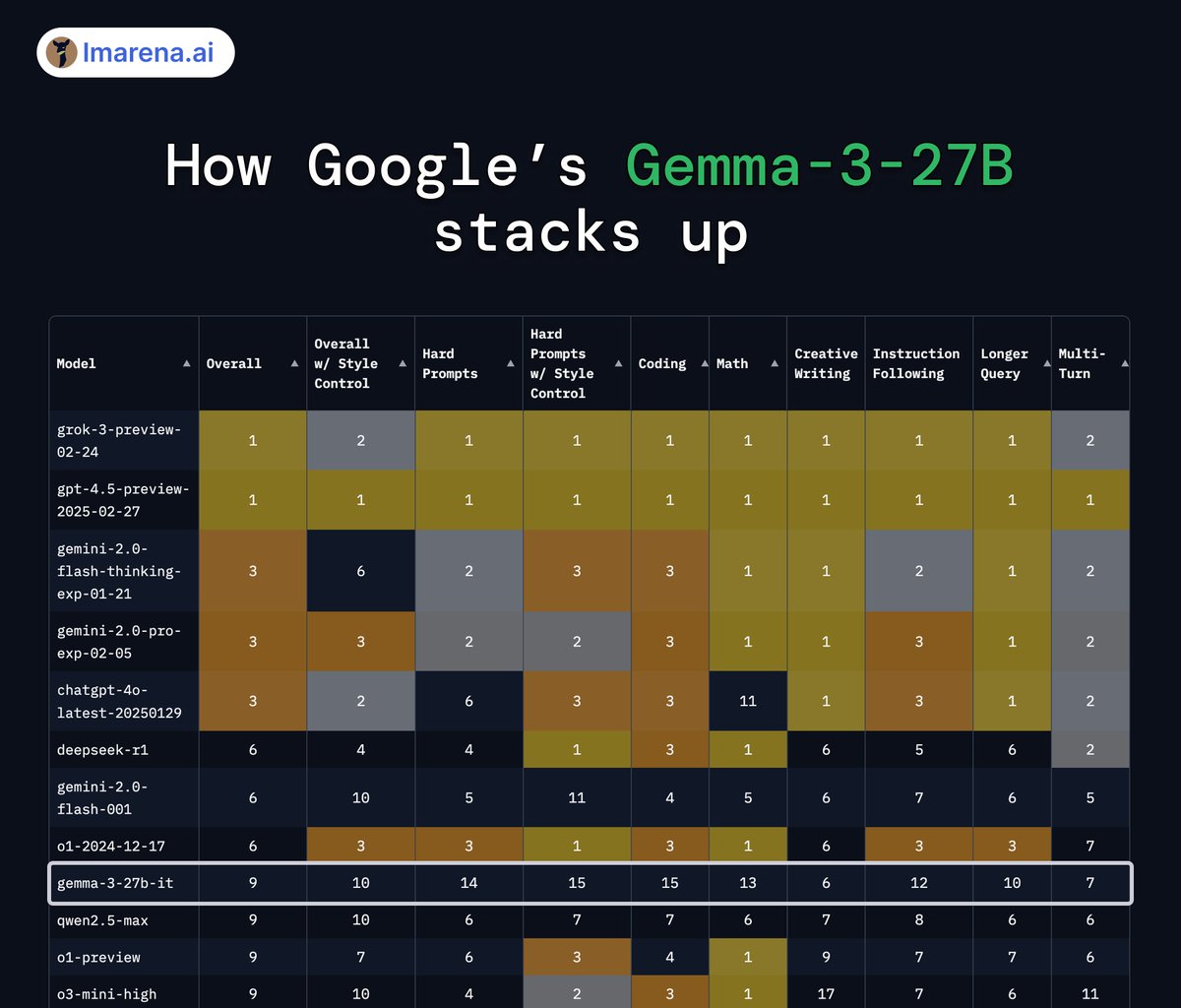

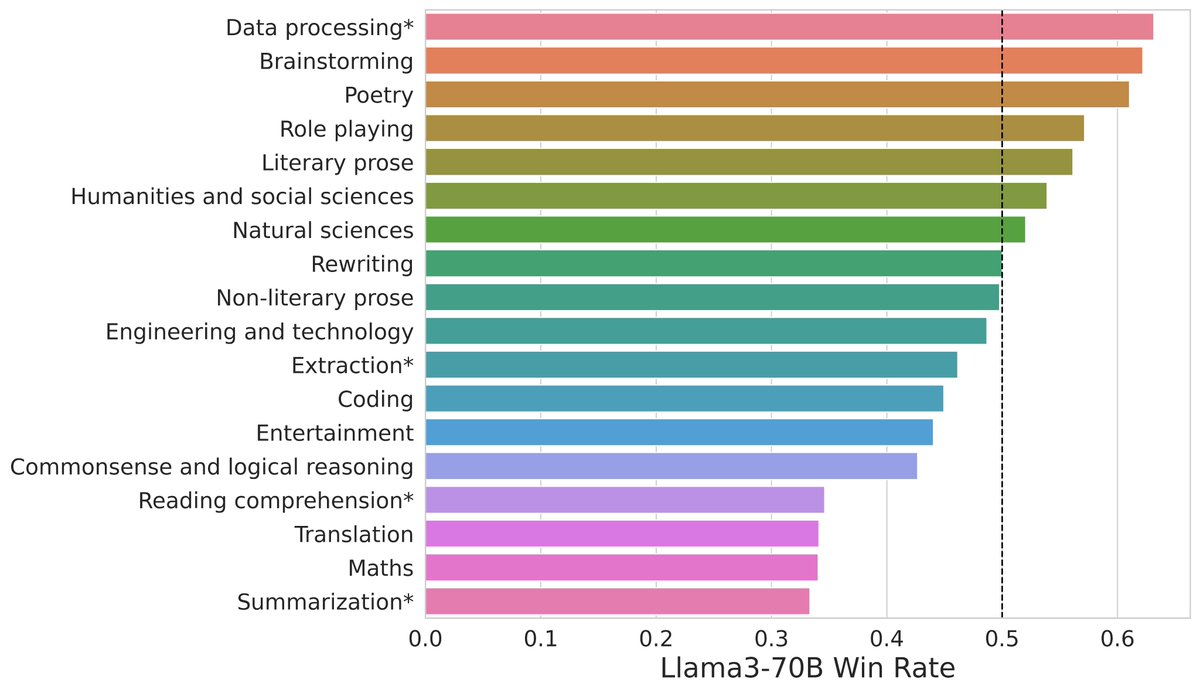

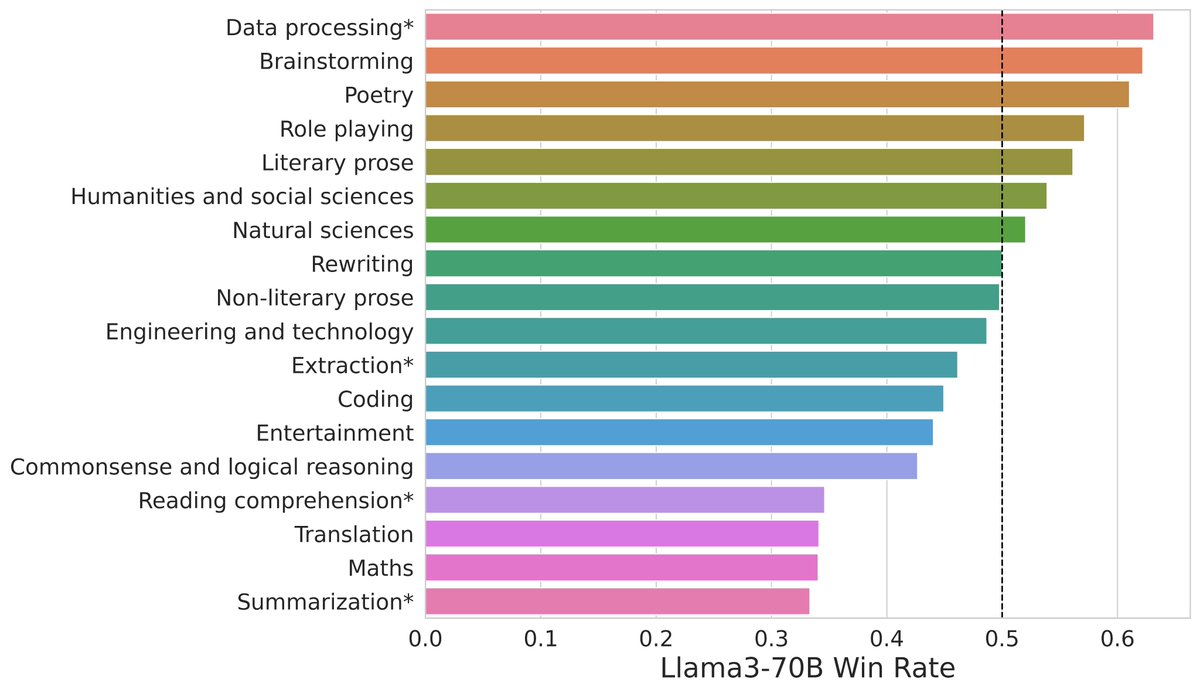

How does Gemma-3 stack up across different categories?

How does Gemma-3 stack up across different categories?

Here you can see @xai Grok-3’s performance across all the top categories:

Here you can see @xai Grok-3’s performance across all the top categories:

You can select organizations to highlight their models in Arena-Price Plot.

You can select organizations to highlight their models in Arena-Price Plot.

In Hard Prompt with Style Control, DeepSeek-R1 ranked joint #1 with o1.

In Hard Prompt with Style Control, DeepSeek-R1 ranked joint #1 with o1.

The Overall leaderboard combines both User Prompts and Pre-generated Prompts, which we provide breakdown in category.

The Overall leaderboard combines both User Prompts and Pre-generated Prompts, which we provide breakdown in category.

Latest ChatGPT-4o remains #1 with Style Control, and improvement across the board.

Latest ChatGPT-4o remains #1 with Style Control, and improvement across the board.

Gemini-Exp-1114 joint #1 in Math Arena, matching o1 performance!

Gemini-Exp-1114 joint #1 in Math Arena, matching o1 performance!

Here are our main takeaways from the leaderboard:

Here are our main takeaways from the leaderboard:

Link:

Link:

We’re rolling out a new "Overview" feature for the leaderboard. @xAI's Grok-2 stands out, ranking at the top across all categories—Math, Hard Prompts, Coding, and Instruction-following.

We’re rolling out a new "Overview" feature for the leaderboard. @xAI's Grok-2 stands out, ranking at the top across all categories—Math, Hard Prompts, Coding, and Instruction-following.

Gemini 1.5 Pro (Experimental 0801) #1 on Vision Leaderboard.

Gemini 1.5 Pro (Experimental 0801) #1 on Vision Leaderboard.

With public data from Chatbot Arena, we trained four different routers using data augmentation techniques to significantly improve router performance.

With public data from Chatbot Arena, we trained four different routers using data augmentation techniques to significantly improve router performance.

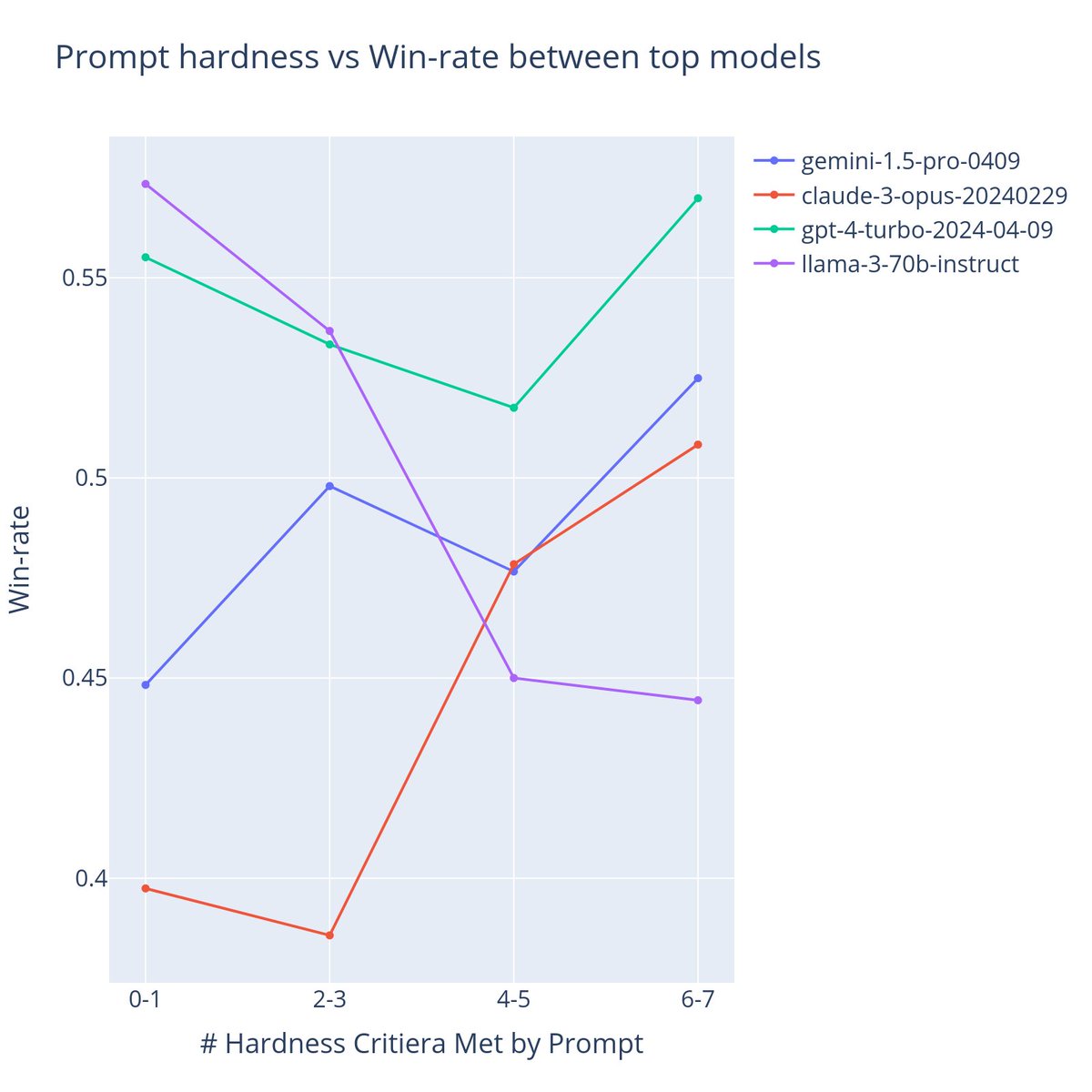

2. As prompts get challenging*, the gap between Llama 3 against top-tier models becomes larger.

2. As prompts get challenging*, the gap between Llama 3 against top-tier models becomes larger.