How to get URL link on X (Twitter) App

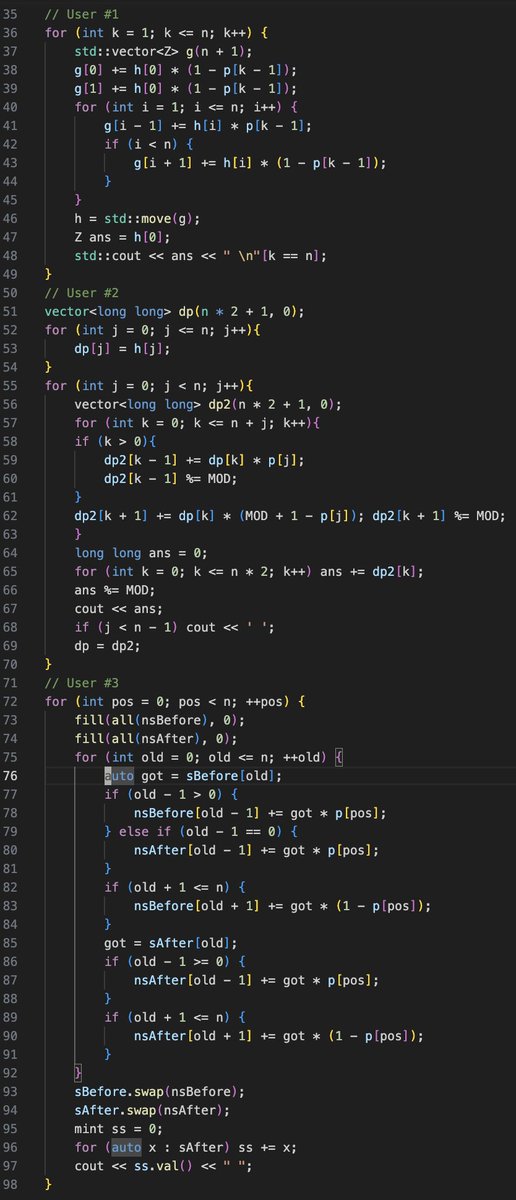

I am somewhat concerned about data leakage, see . This is an AlphaCode2 contributor's response

I am somewhat concerned about data leakage, see . This is an AlphaCode2 contributor's response https://twitter.com/cHHillee/status/1732636161204760863

https://twitter.com/RemiLeblond/status/1732677521290789235

It's admittedly a bit difficult to know for sure since DP problems do sometimes tend to have more formulaic solutions.

It's admittedly a bit difficult to know for sure since DP problems do sometimes tend to have more formulaic solutions.

https://twitter.com/cHHillee/status/1635692008877727745

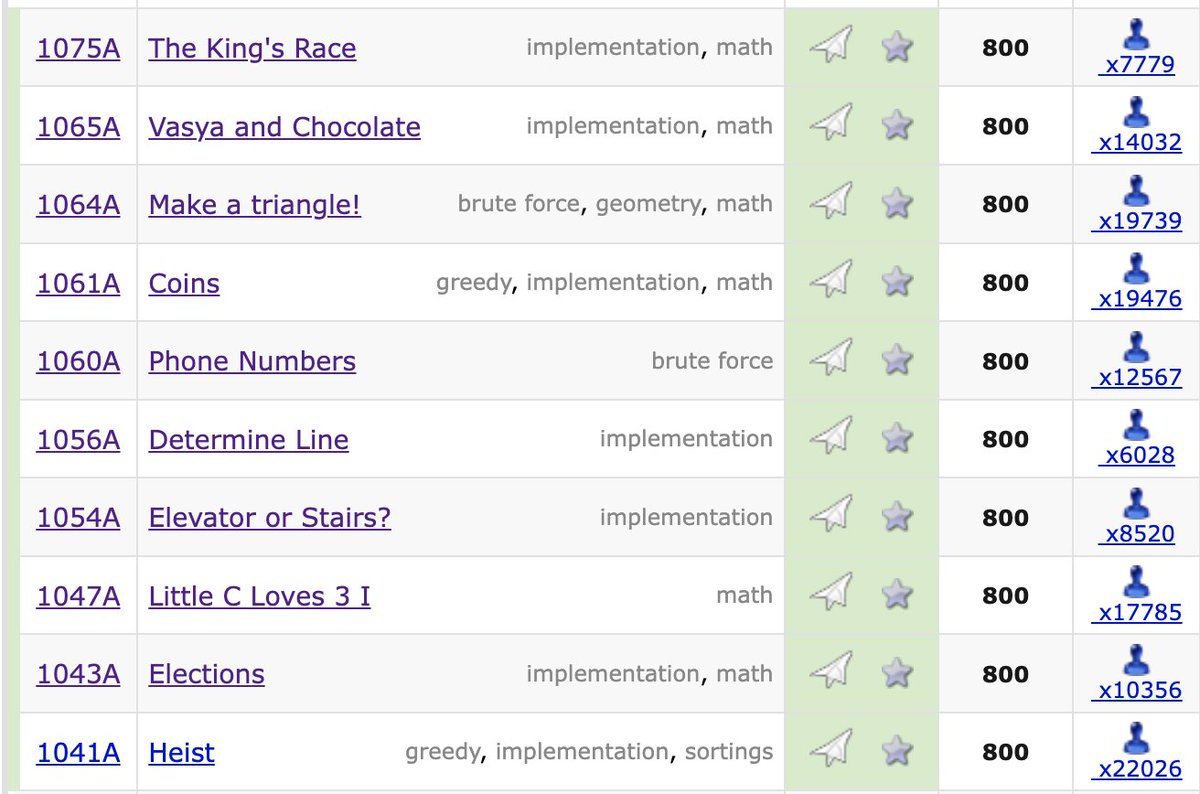

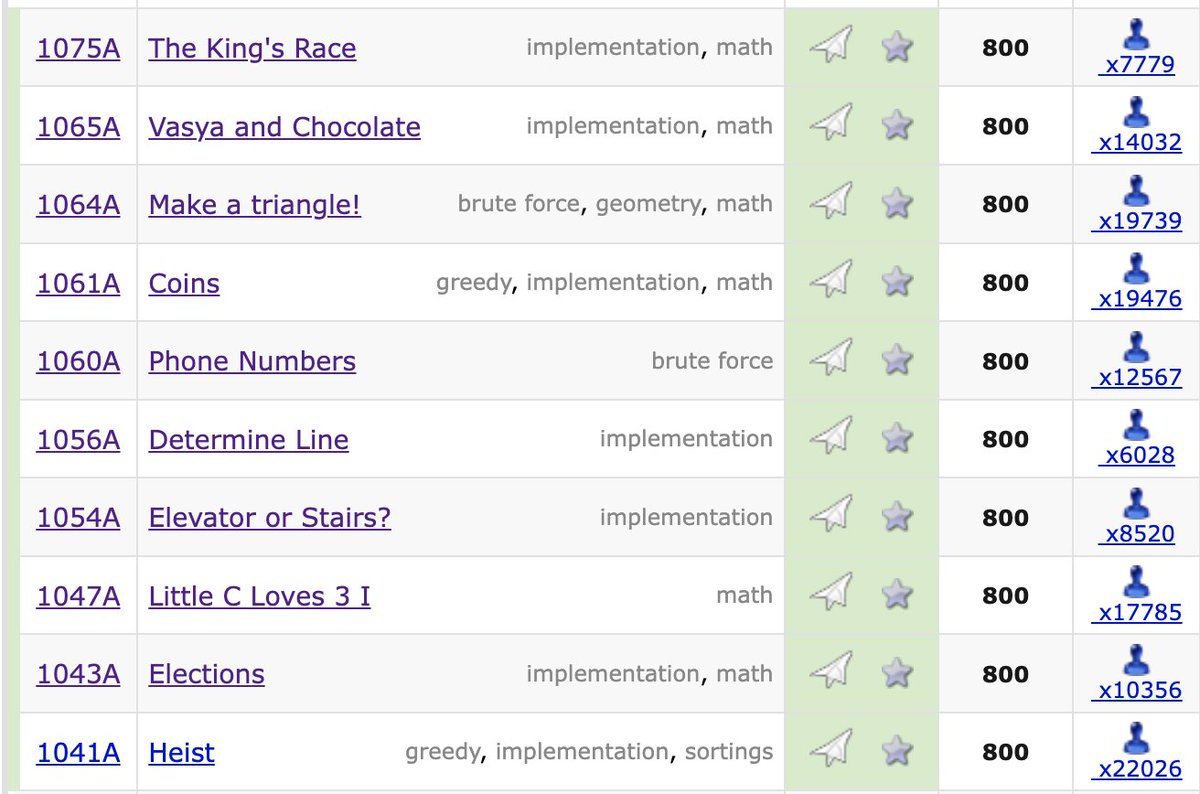

800-rated problems are the easiest problems on Codeforces, and are determined automatically based off of the ratings of the people solving them during the contest. Thus, I would expect that these problems are roughly of "equal" difficulty, and my spot check would agree.

800-rated problems are the easiest problems on Codeforces, and are determined automatically based off of the ratings of the people solving them during the contest. Thus, I would expect that these problems are roughly of "equal" difficulty, and my spot check would agree.

https://twitter.com/karpathy/status/1621578354024677377

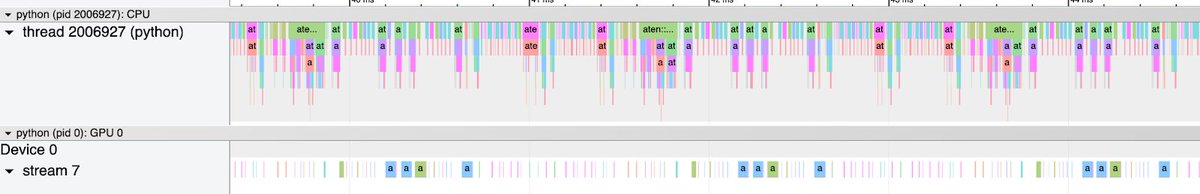

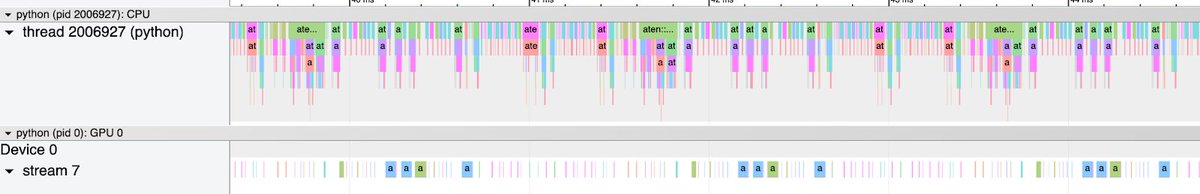

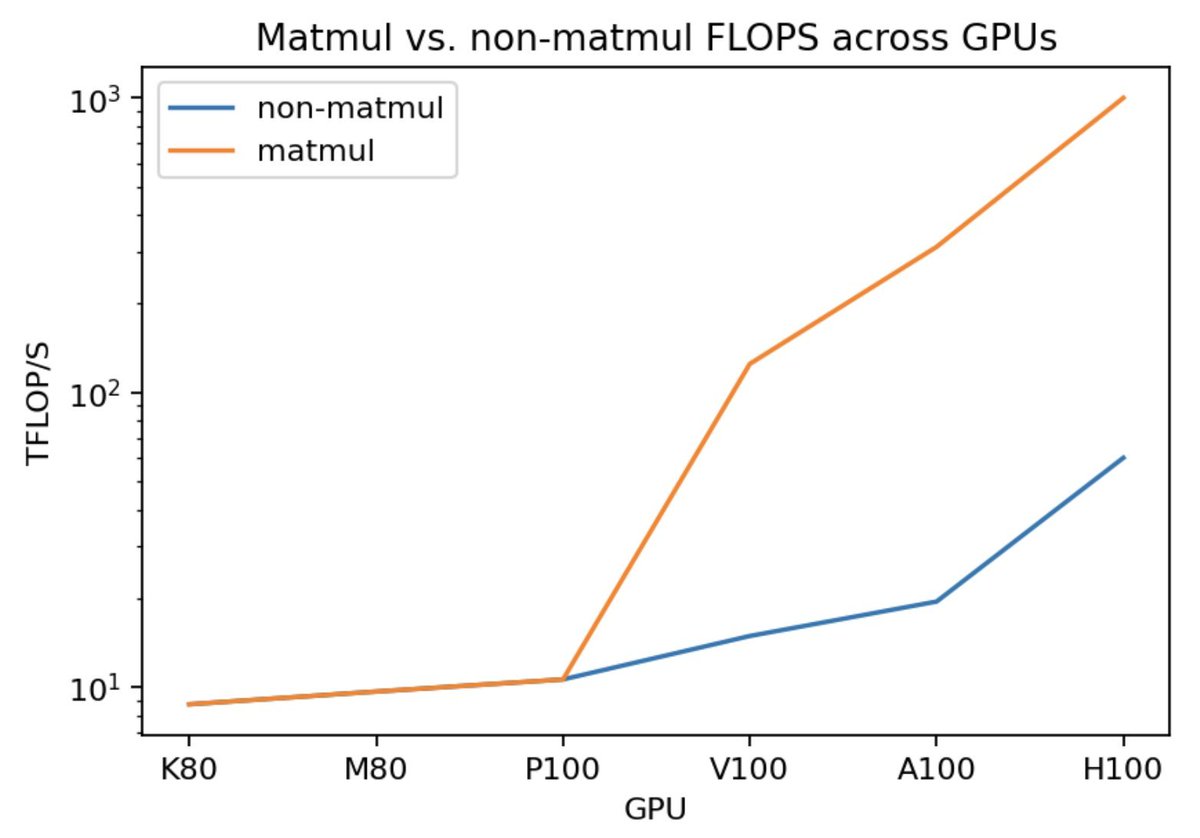

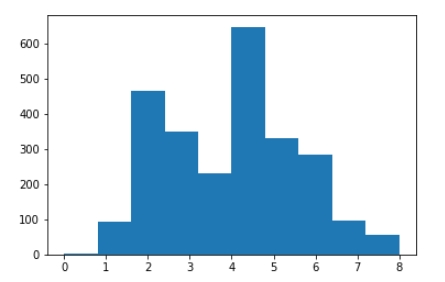

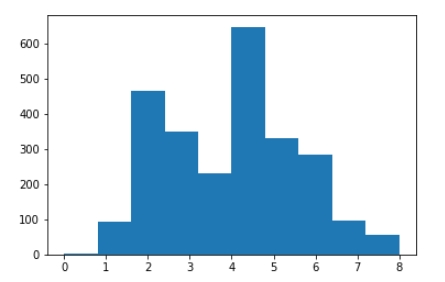

There are 3 concepts needed to explain the above graph - compute intensity, tiling, and wave quantization.

There are 3 concepts needed to explain the above graph - compute intensity, tiling, and wave quantization.

To explain tiling, we first need to understand hardware memory accesses. Memory doesn't transfer elements one at a time - it transfers large "chunks". That is, even if you only only need one element, the GPU will load that element... and the 31 elements next to it.

To explain tiling, we first need to understand hardware memory accesses. Memory doesn't transfer elements one at a time - it transfers large "chunks". That is, even if you only only need one element, the GPU will load that element... and the 31 elements next to it.

Let's look at a simple example - resnet18 inference with a single image. We see that we achieve about 2ms latency - not great for this model.

Let's look at a simple example - resnet18 inference with a single image. We see that we achieve about 2ms latency - not great for this model.

https://twitter.com/stephenroller/status/1579993017234382849(2/7)

Tensor cores, put simply, are "hardware hard-coded for matrix multiplication".

Tensor cores, put simply, are "hardware hard-coded for matrix multiplication".

In neural networks, matmuls usually take up >99% of the computational cost. Everything else is a rounding error.

In neural networks, matmuls usually take up >99% of the computational cost. Everything else is a rounding error.

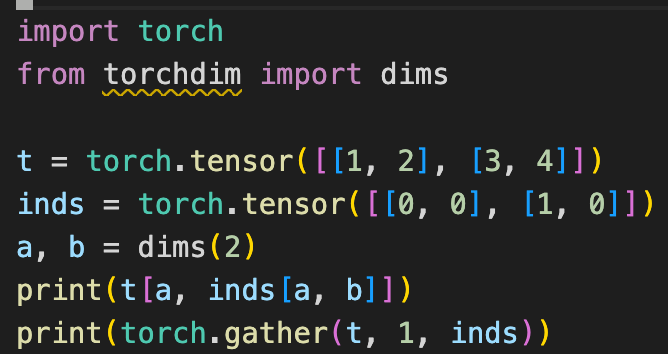

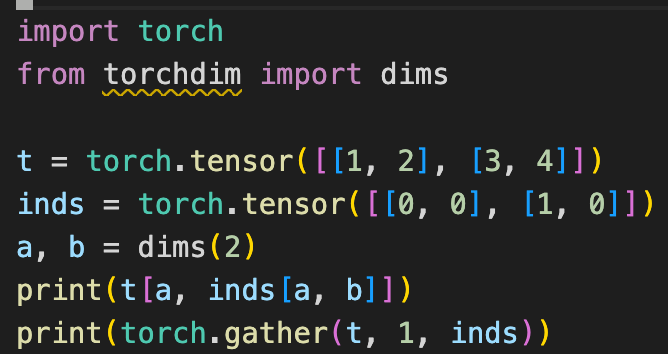

https://twitter.com/Zachary_DeVito/status/1541477000015073280Here's a bunch of examples I found cool.

https://twitter.com/ch402/status/1539774943214178304

@iclr_conf For experience:

@iclr_conf For experience: