Reverse engineering neural networks at @AnthropicAI. Previously @distillpub, OpenAI Clarity Team, Google Brain. Personal account.

5 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/AnthropicAI/status/1905303835892990278(1) After a long side quest, we're getting closer to circuits.

https://twitter.com/kchonyc/status/1870563085796184131As @kchonyc observes, the deep learning job market was in a very strange place circa 2014. Deep learning went from being very obscure in 2012 (probably <<100 people working on it seriously), to being very in demand.

https://twitter.com/AnthropicAI/status/1792935506587656625I've been working on interpretability for more than a decade, significantly motivated by concerns about safety. But it's always been this aspirational goal – I could tell a story for how this work might someday help, but it was far off.

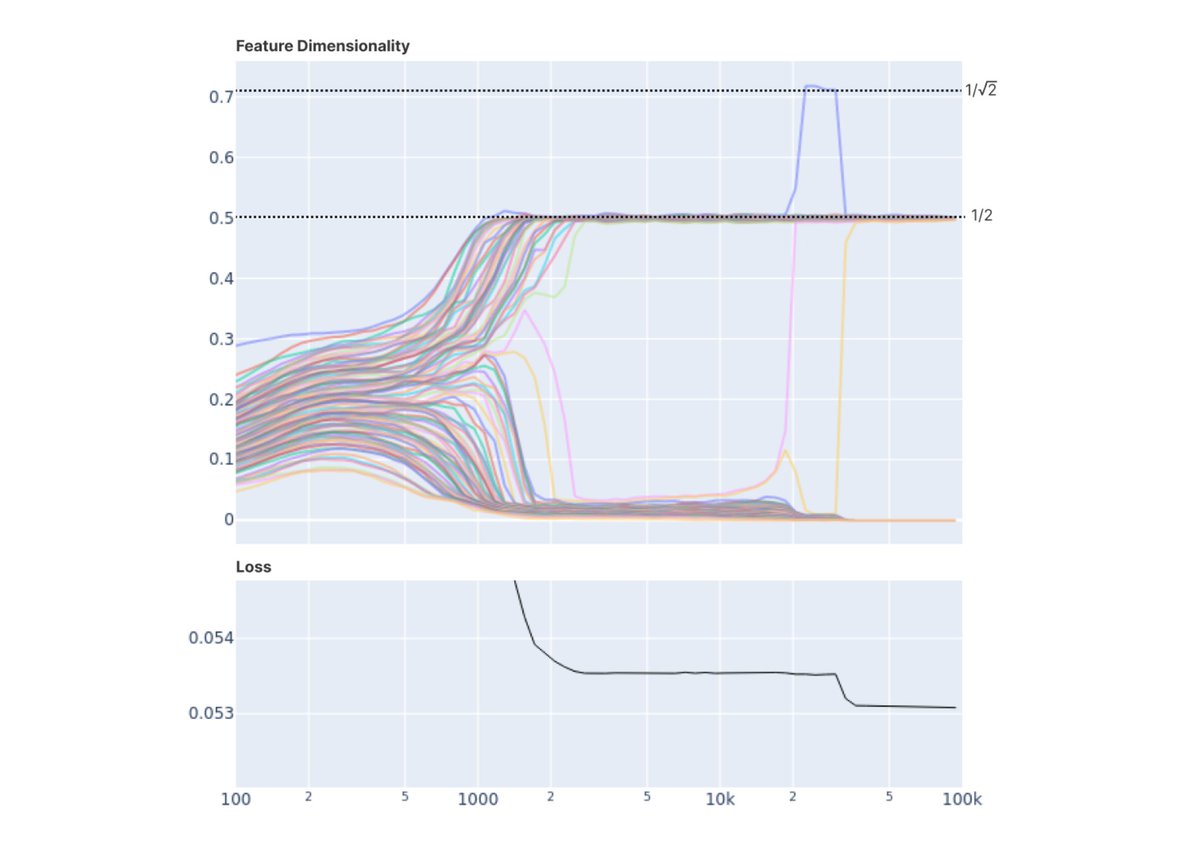

https://twitter.com/AnthropicAI/status/1709986949711200722Well trained sparse autoencoders (scale matters!) can decompose a one-layer model into very nice, interpretable features.

A lot of AI safety discourse focuses on very specific models of AI and AI safety. These are interesting, but I don't know how I could be confident in any one.

A lot of AI safety discourse focuses on very specific models of AI and AI safety. These are interesting, but I don't know how I could be confident in any one.

https://twitter.com/AToliasLab/status/1659236747618680836

When we were working on vision models, we constantly discovered that features we were discovering such as curve detectors (distill.pub/2020/circuits/…) and multimodal neurons (distill.pub/2021/multimoda…) had existing parallels in neuroscience.

When we were working on vision models, we constantly discovered that features we were discovering such as curve detectors (distill.pub/2020/circuits/…) and multimodal neurons (distill.pub/2021/multimoda…) had existing parallels in neuroscience.

https://twitter.com/zeeweeding/status/1638257954398011394(Caveat that I'm not super well read in neuroscience. When working on high-low frequency detectors we asked a number of neuroscientists if they'd seen such neurons before, and they hadn't. But very possible something was missed!)

I ultimately lean towards caution, but I empathize with all three of these stances.

I ultimately lean towards caution, but I empathize with all three of these stances.

https://twitter.com/AnthropicAI/status/1611045993516249088Historically, I think I've often been tempted to think of overfit models as pathological things that need to be trained better in order for us to interpret.

https://twitter.com/NeelNanda5/status/1609283649119322114For background, there's a famous serious of books in math titled "counterexamples in X" (eg. the famous "Counterexamples in Topology") which offer examples of mathematical objects with unusual properties.

This blog has a much more detailed discussion of edible plants in different clades botanistinthekitchen.blog/the-plant-food…

This blog has a much more detailed discussion of edible plants in different clades botanistinthekitchen.blog/the-plant-food…

https://twitter.com/AnthropicAI/status/1570087876053942272Firstly: Superposition by itself is utterly wild if you take it seriously.

https://twitter.com/ch402/status/1564631228166201345(More on this below, but please don't let this thread discourage you from reaching out if you think we might be a good fit! I intend to respond to every sincere message I receive and if we aren't a fit, I'll try to think about whether I know someone who's a better match for you.)

Before diving in – I normally try to tweet about research or things of broad interest, since I know people don’t follow me for my personal life. This thread is really important to me, but apologies for the topic change if it’s unwelcome.

Before diving in – I normally try to tweet about research or things of broad interest, since I know people don’t follow me for my personal life. This thread is really important to me, but apologies for the topic change if it’s unwelcome.

https://twitter.com/AnthropicAI/status/1541468008249364481Our team's goal is to reverse engineer neural networks into human understandable computer programs – see our work at transformer-circuits.pub and some previous work we're inspired by at distill.pub/2020/circuits/ .

https://twitter.com/banburismus_/status/1532747777280593920I used to really want ML to be about complex math and clever proofs. But I've gradually come to think this is really the wrong aesthetic to bring.

https://twitter.com/saykay/status/1480022995393413120From the related work section of Habbema et al (ncbi.nlm.nih.gov/pmc/articles/P…):