gpu enjoyer at @modal. he/him.

ex @full_stack_dl, @weights_biases (acq. @CoreWeave), phd Berkeley @Redwood_Neuro.

try https://t.co/SYWVMCb7OB

How to get URL link on X (Twitter) App

FYI this thread is a summary of a blog post -- head there for a lot more detail!

FYI this thread is a summary of a blog post -- head there for a lot more detail!

The heart of the CUDA stack, IMO, is not anything named CUDA: it’s the humble Parallel Thread eXecution instruction set architecture, the compilation target of the CUDA compiler and the only stable interface to GPU hardware.

The heart of the CUDA stack, IMO, is not anything named CUDA: it’s the humble Parallel Thread eXecution instruction set architecture, the compilation target of the CUDA compiler and the only stable interface to GPU hardware.

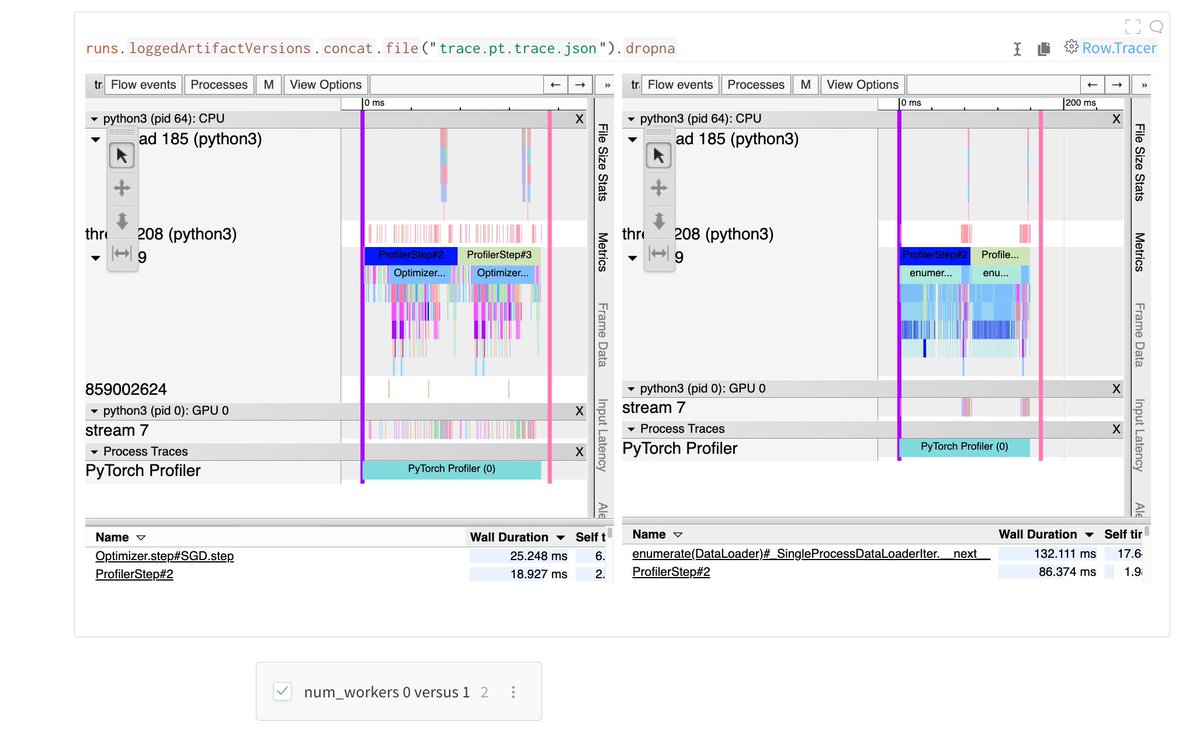

Details of our work and repro code on the Modal blog.

Details of our work and repro code on the Modal blog.

https://twitter.com/npew/status/1598016510588354560

(please do not park your car on a volcano, even if you have an e-brake)

(please do not park your car on a volcano, even if you have an e-brake)

context: A100s are beefy GPUs, and they have enough VRAM to comfortably train models, like Stable Diffusion, that generate images from text

context: A100s are beefy GPUs, and they have enough VRAM to comfortably train models, like Stable Diffusion, that generate images from text

Each report was a post-hoc meta-analysis of post-mortem analyses: which "root causes" come up most often? Which take the most time to resolve?

Each report was a post-hoc meta-analysis of post-mortem analyses: which "root causes" come up most often? Which take the most time to resolve?

this follows the format of really successful MLC interest groups in e.g. NLP (notion.so/MLC-NLP-Paper-…) and Computer Vision (notion.so/MLC-Computer-V…)

this follows the format of really successful MLC interest groups in e.g. NLP (notion.so/MLC-NLP-Paper-…) and Computer Vision (notion.so/MLC-Computer-V…)

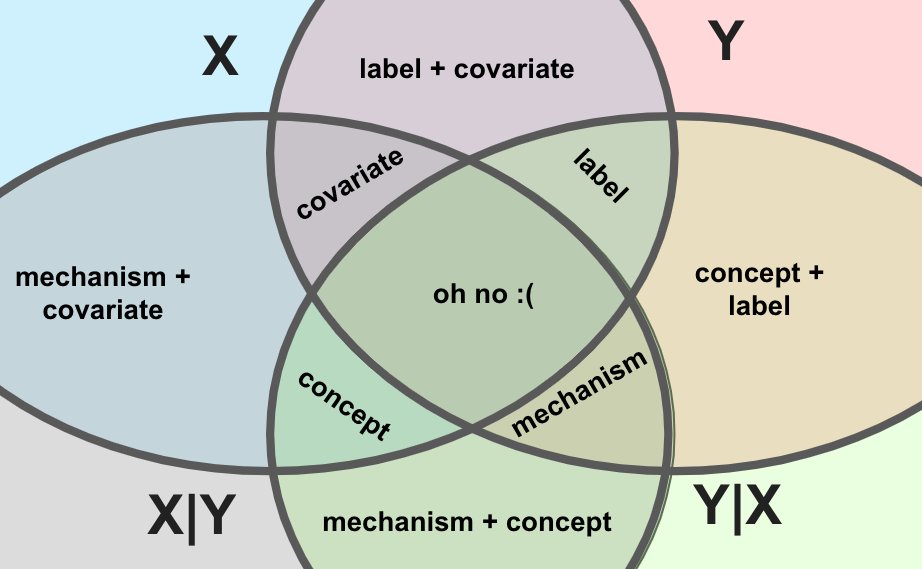

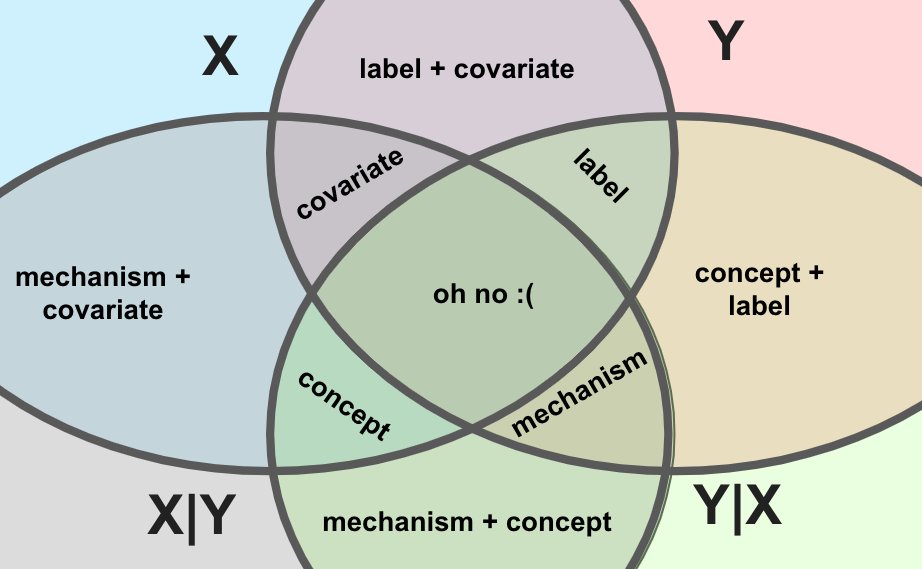

https://twitter.com/james_y_zou/status/1498677901897654280scene: data in real life is non-stationary, meaning P(X,Y) changes over time.

https://twitter.com/chipro/status/1490924046350909442

(inb4 pedantry: the above diagram is an Euler diagram, not a Venn diagram, meaning not all possible joins are represented. that is good, actually, for reasons to be revealed!)

(inb4 pedantry: the above diagram is an Euler diagram, not a Venn diagram, meaning not all possible joins are represented. that is good, actually, for reasons to be revealed!)

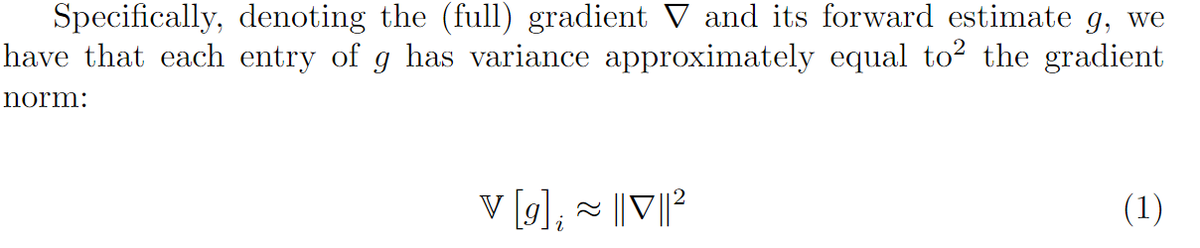

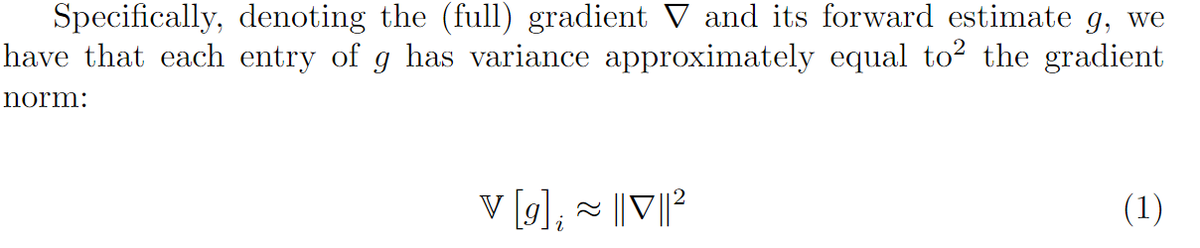

https://twitter.com/arankomatsuzaki/status/1494488254304989228The main result I claim is an extension of Thm 1 in the paper. They prove that the _expected value_ of the gradient estimate is the true gradient, and I worked out the _variance_ of the estimate.

https://twitter.com/charles_irl/status/1457840021772259332?s=20

https://twitter.com/DrLukeOR/status/1289305330027921408@realSharonZhou: sees opportunities in medicine for with "democratization" of design of e.g. web interfaces.

https://twitter.com/WomenInStat/status/1285612667839885312tl;dr: the basic idea of the SVD works for _any_ function.