AI Engineering: https://t.co/94dv4uTU1H

Designing ML Sys: https://t.co/G81hL2dWmr

Entanglements: https://t.co/W27aXeiySY

@aisysbooks

15 subscribers

How to get URL link on X (Twitter) App

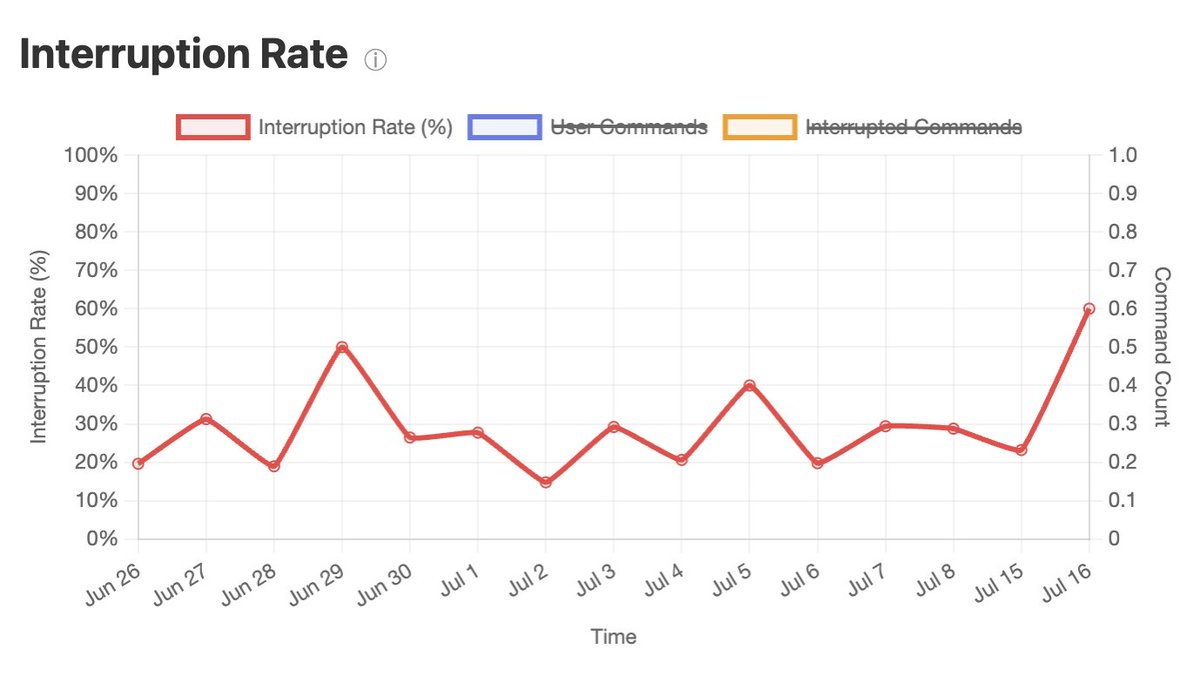

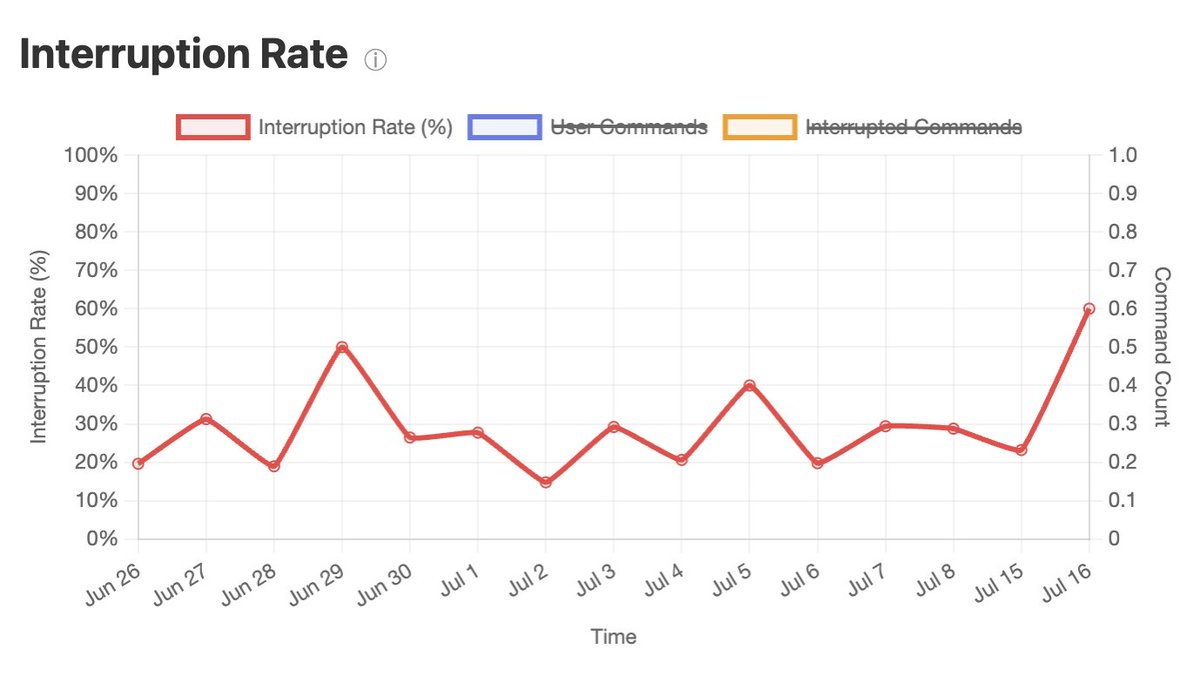

2. Sacrificing throughput for latency

2. Sacrificing throughput for latency