Generative models, musical expression for all. Assistant professor at CMU CSD. Part time research at Google Magenta (views my own)

How to get URL link on X (Twitter) App

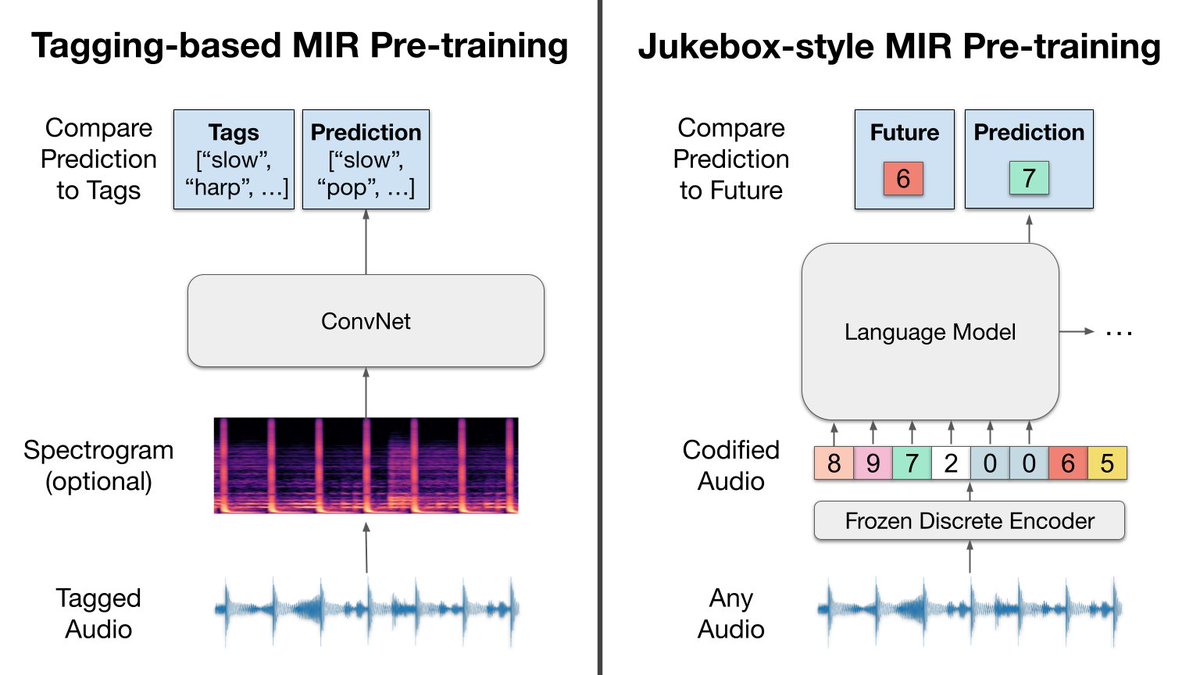

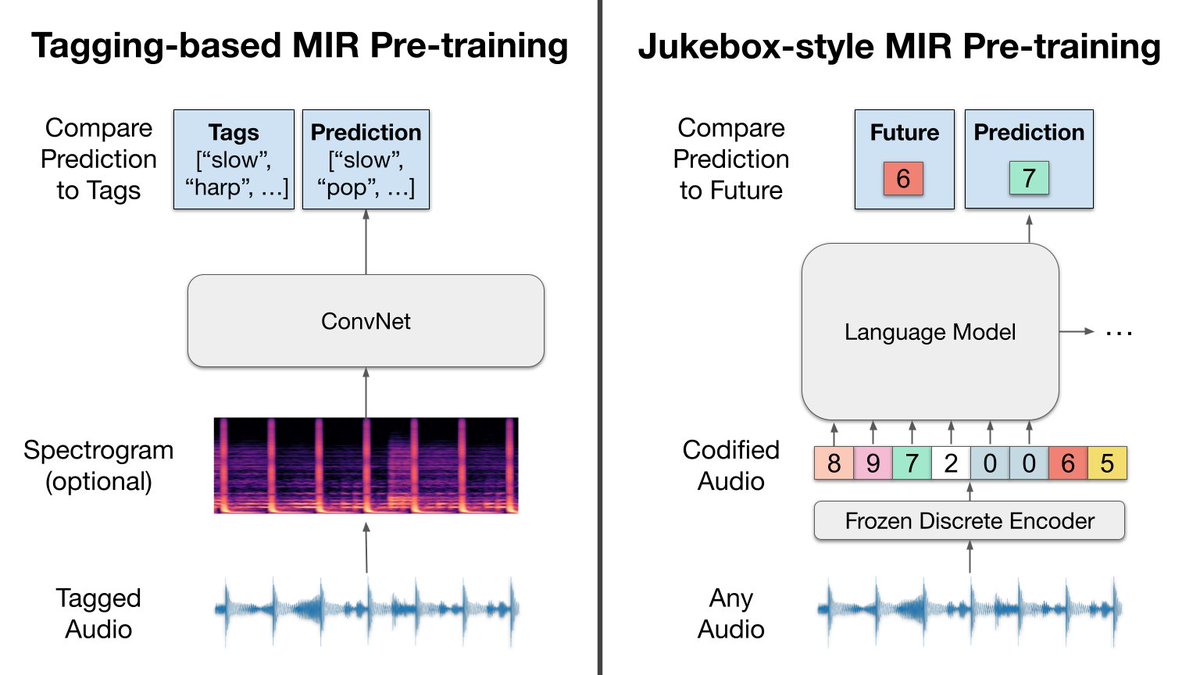

@percyliang @ismir2021 Core result: across four MIR tasks (music tagging, genre classification, key detection, emotion recognition), probing representations from Jukebox yields 30% stronger performance on average relative to probing representations from conventional pre-trained models for MIR. [2/8]

@percyliang @ismir2021 Core result: across four MIR tasks (music tagging, genre classification, key detection, emotion recognition), probing representations from Jukebox yields 30% stronger performance on average relative to probing representations from conventional pre-trained models for MIR. [2/8]