Ed.D. | Founder @networkdefense @RuralTechFund | Former @Mandiant, DoD | Author: Intrusion Detection Honeypots, Practical Packet Analysis, Applied NSM

7 subscribers

How to get URL link on X (Twitter) App

In the course, you’ll learn how to use YARA to detect malware, triage compromised systems, and collect threat intelligence. No prior YARA experience is required.

In the course, you’ll learn how to use YARA to detect malware, triage compromised systems, and collect threat intelligence. No prior YARA experience is required.

https://twitter.com/chrissanders88/status/1633121837336121345A lot of great responses this week so I won't rehash every path, but there's an opportunity to explore the disposition and prevalence of the client IP, the timing of the rule creation versus AD auth, potential outgoing spam activity,

https://twitter.com/chrissanders88/status/1595070232426467328Something we know from research is that the initial path (“opening move”) matters.

https://twitter.com/DanielOfService/status/1590019965121630208?s=20&t=KshSZ_yYFQ3IhqU5Kmp2hw

Even when a workplace is accessible to someone with a disability (and despite the ADA, many are not), the commute there may not be. Eliminating. that commute opens up a lot of possibilities.

Even when a workplace is accessible to someone with a disability (and despite the ADA, many are not), the commute there may not be. Eliminating. that commute opens up a lot of possibilities.

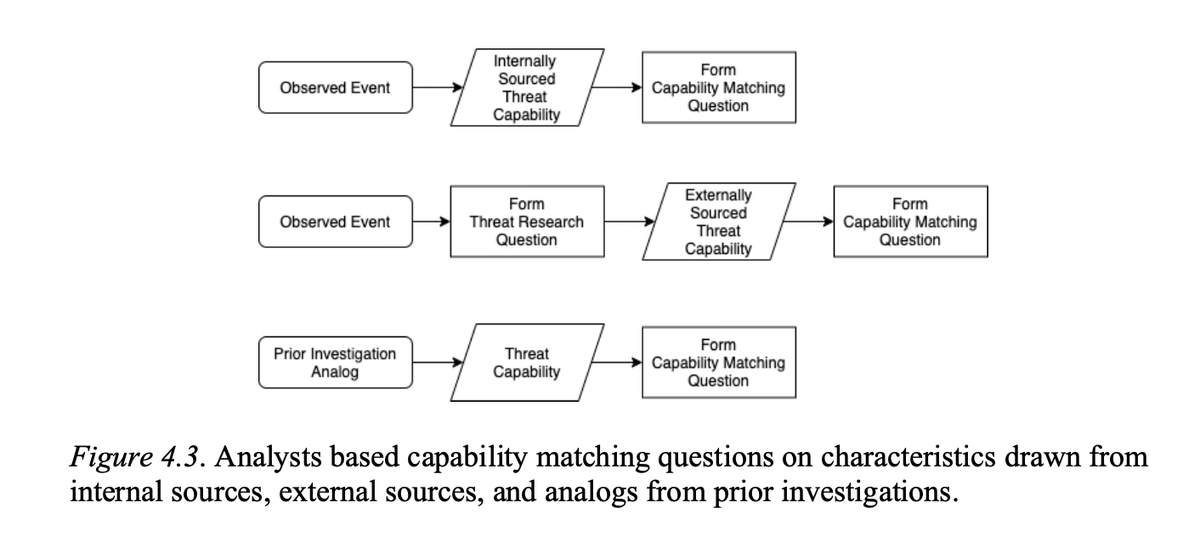

https://twitter.com/RuralTechFund/status/1531646135223427072One of our goals was to make our impact even clear and visible on our home page and a dedicated page: ruraltechfund.org/impact/.

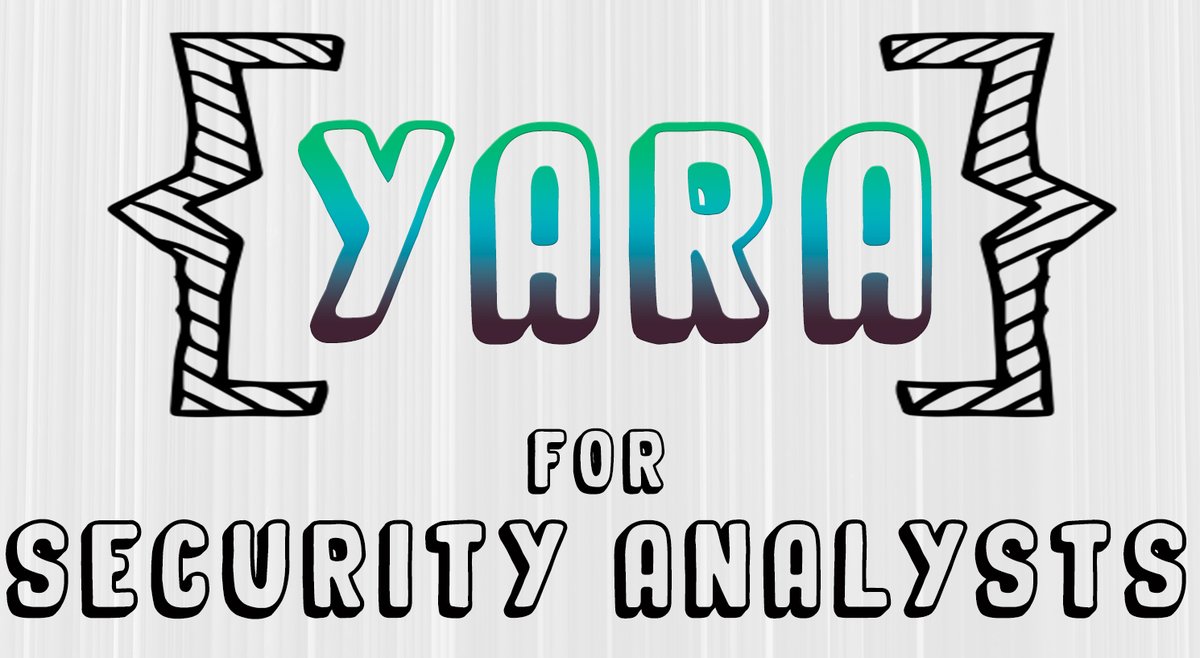

Breaking that down further, you're asking:

Breaking that down further, you're asking:

My research in security analysis has shown how decisions reached from deliberate thought are typically higher quality than those reached intuitively, even by experts. But, why do we often rely on intuition so much? 2/

My research in security analysis has shown how decisions reached from deliberate thought are typically higher quality than those reached intuitively, even by experts. But, why do we often rely on intuition so much? 2/

I think most all perspectives are useful, but few conclusions are. I like qualitative studies that include the voice of participants because you get more of that perspective, and there's a lot of it to be found here. 2/

I think most all perspectives are useful, but few conclusions are. I like qualitative studies that include the voice of participants because you get more of that perspective, and there's a lot of it to be found here. 2/