Professional AI Engineer.

Sharing what I'm currently learning, mostly about AI, LLMs, RAG, building AI powered software, AI automation etc.

How to get URL link on X (Twitter) App

@LoganMarkewich @llama_index Passage and sentence based retrieval has their limitations.

@LoganMarkewich @llama_index Passage and sentence based retrieval has their limitations.

@llama_index @Redisinc We need to pass the following arguments to the ingestion pipeline:

@llama_index @Redisinc We need to pass the following arguments to the ingestion pipeline:

@llama_index First let’s start with some simple stuff.

@llama_index First let’s start with some simple stuff.

@llama_index For adapters, we pull apart every single layer of the transformer and add randomly initialized new weights.

@llama_index For adapters, we pull apart every single layer of the transformer and add randomly initialized new weights.

@llama_index First we load the documents.

@llama_index First we load the documents.

@llama_index Finetuning means updating the model weights themselves over a set of data corpus to make the model work better for specific use-cases.

@llama_index Finetuning means updating the model weights themselves over a set of data corpus to make the model work better for specific use-cases.

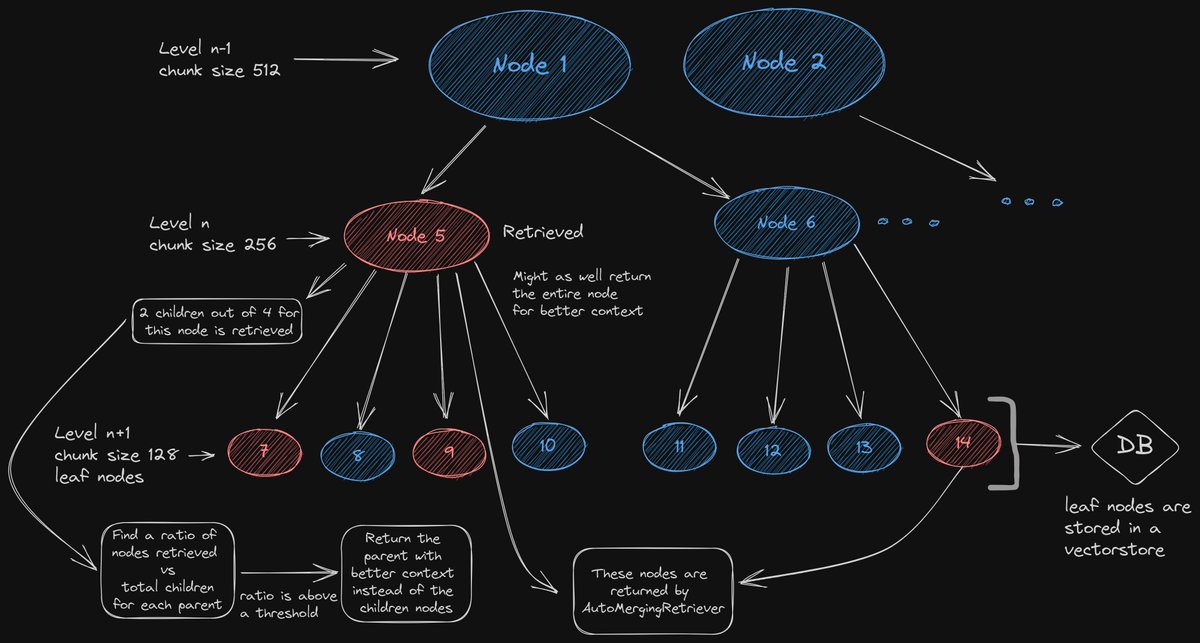

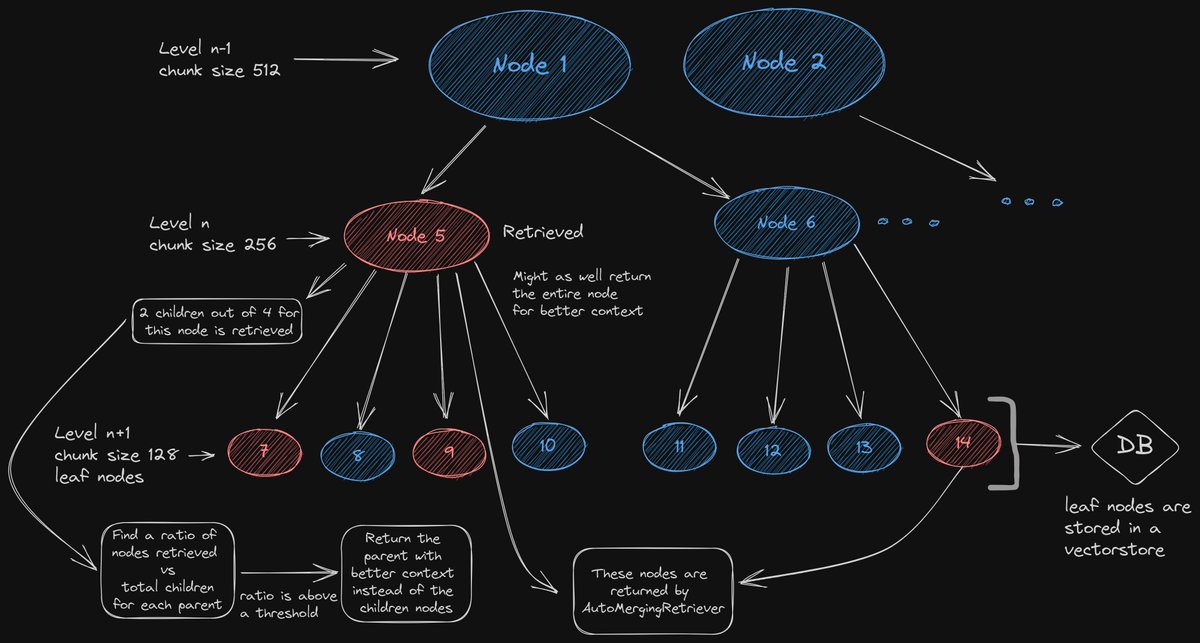

Architecture:

Architecture:

The first step here is parsing via the HierarchicalNodeParser.

The first step here is parsing via the HierarchicalNodeParser.

@LangChainAI ParentDocumentRetriever automatically creates the small chunks and links their parent document id.

@LangChainAI ParentDocumentRetriever automatically creates the small chunks and links their parent document id.https://twitter.com/1355239433432403968/status/1691143792831639556

The issue:

The issue: