How to get URL link on X (Twitter) App

Command R+ offers best-in-class advanced Retrieval-Augmented Generation (RAG) capabilities to provide accurate, enterprise-ready solutions with citations to reduce hallucinations.

Command R+ offers best-in-class advanced Retrieval-Augmented Generation (RAG) capabilities to provide accurate, enterprise-ready solutions with citations to reduce hallucinations.

@hima_lakkaraju 2/ 🎓 Harvard Prof. Lakkaraju demonstrates TalkToModel, an interactive dialogue system that explains ML models through conversations. 🗣️ This system shows a compelling conversational explainable user interface (XAI).

@hima_lakkaraju 2/ 🎓 Harvard Prof. Lakkaraju demonstrates TalkToModel, an interactive dialogue system that explains ML models through conversations. 🗣️ This system shows a compelling conversational explainable user interface (XAI).

@JayAlammar A natural place to start is an AI tech stack made up of these three layers: Application, Models, and Cloud Platform.

@JayAlammar A natural place to start is an AI tech stack made up of these three layers: Application, Models, and Cloud Platform.

@LangChainAI With Cohere, you get access to large language models (LLMs) via a simple API without needing the machine learning know-how.

@LangChainAI With Cohere, you get access to large language models (LLMs) via a simple API without needing the machine learning know-how.

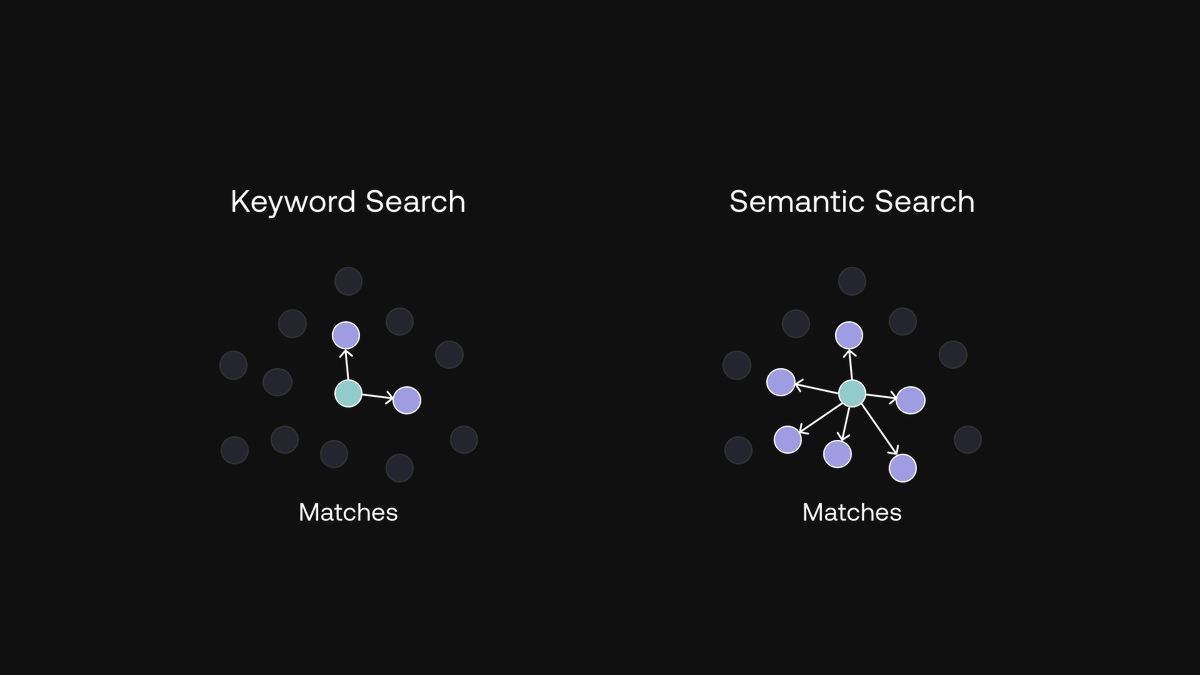

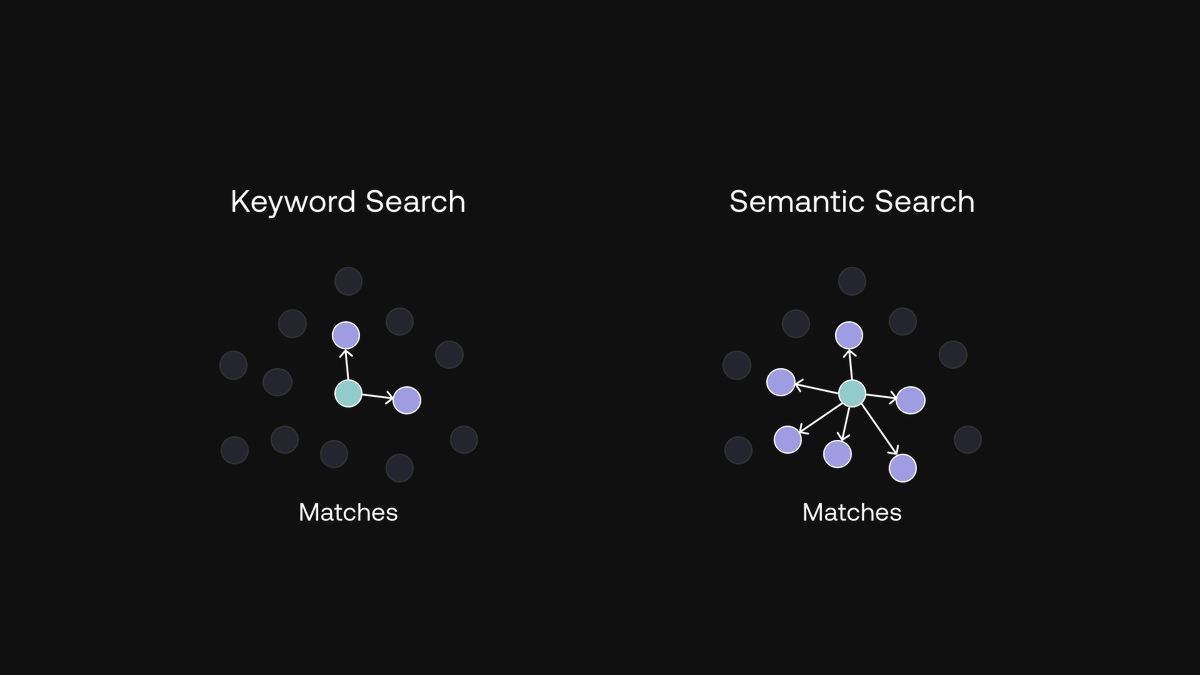

Before semantic search, the most popular way of searching was keyword search.

Before semantic search, the most popular way of searching was keyword search.

First, let’s see why and when you might want to create a custom model.

First, let’s see why and when you might want to create a custom model.

The quintessential task of natural language processing (NLP) is to understand human language.

The quintessential task of natural language processing (NLP) is to understand human language.

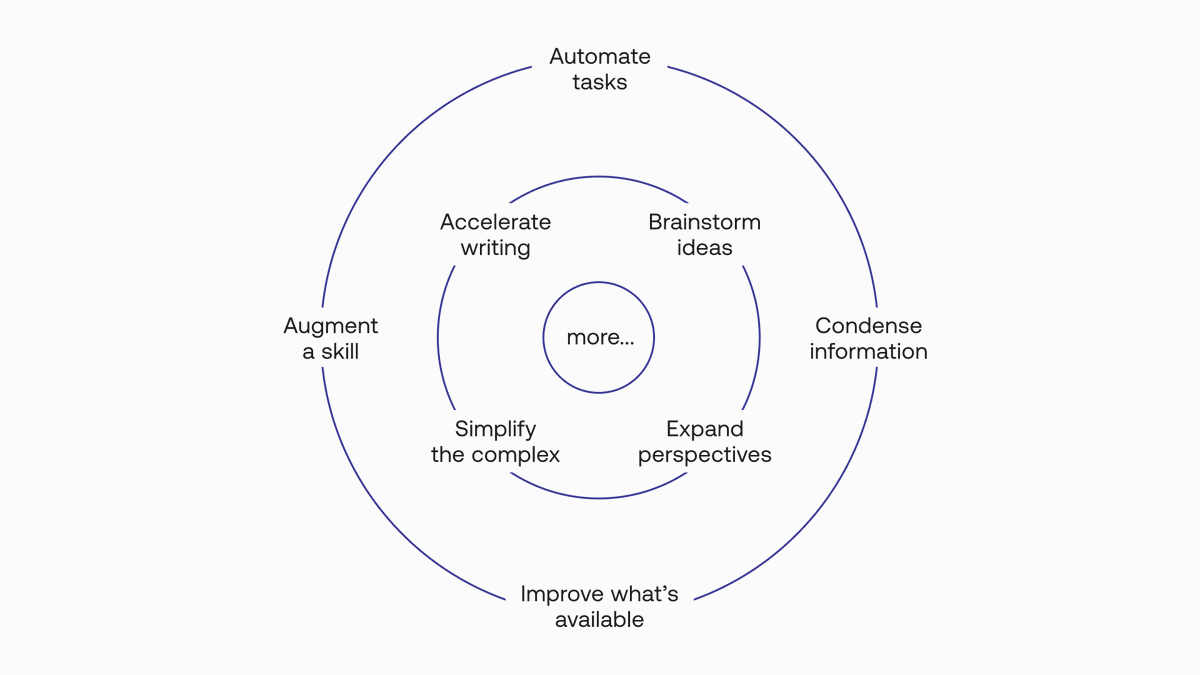

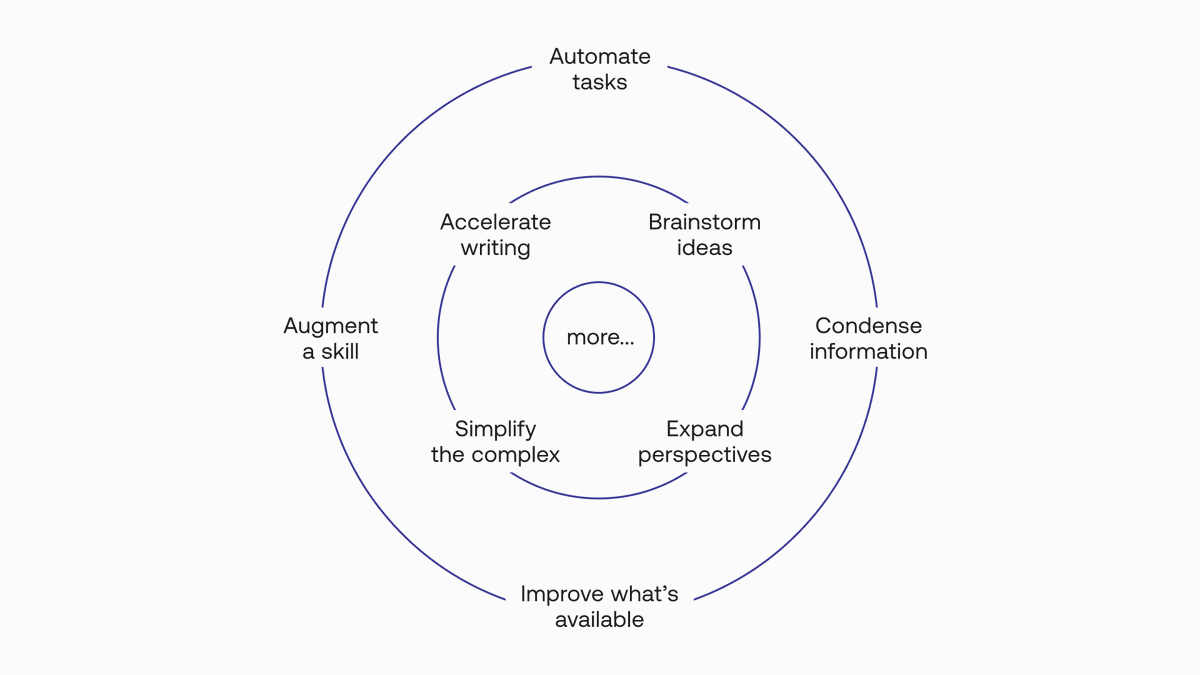

1. Automate repetitive tasks

1. Automate repetitive tasks