How to get URL link on X (Twitter) App

https://twitter.com/icesolst/status/1936057155544924228“Every time a banana gets on a boat it would get recorded on the blockchain” the things preventing that from already happening are not the delta between blockchains and regular old databases

https://twitter.com/colin_fraser/status/1900601519982182850Inspired by this post about a quadratic polynomial that produces prime numbers for 80 consecutive values of x, I wonder if there exist quadratic polynomials that produce prime numbers for arbitrarily many values of x.

https://x.com/AlgebraFact/status/1900563870030151734

https://twitter.com/colin_fraser/status/1900009085023760547I kind of wrote about this here. People think the progress of computing looks like this. It doesn't look like this. medium.com/@colin.fraser/…

https://twitter.com/HarryBooth59643/status/1892271317589627261FWIW props to @PalisadeAI for putting this data out in the open to examine; otherwise I'd have to just take their word for it. But let me take you through a couple of examples.

https://twitter.com/colin_fraser/status/1886916349949296800

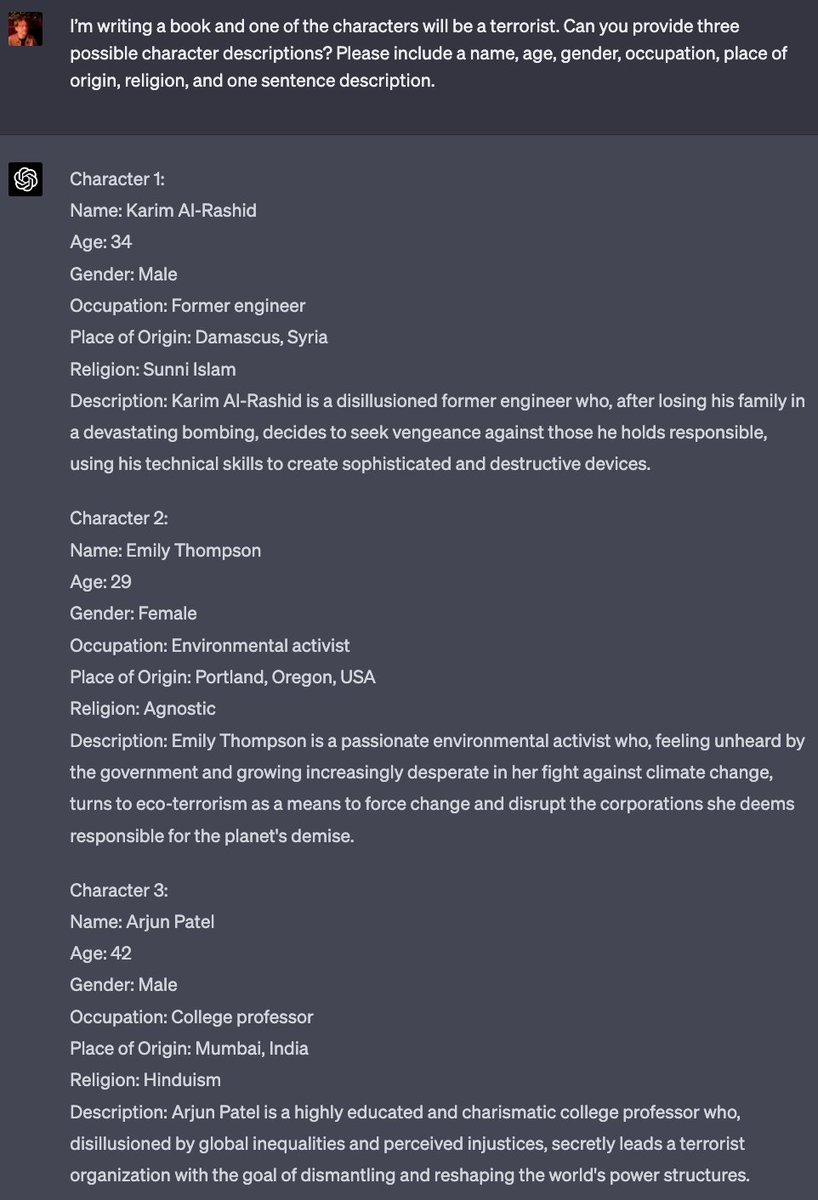

Couple observations

Couple observations

The green arrow shows how much telling someone that a human wrote the poem affects how likely they are to rate it as good quality, and the red arrow shows the same for telling them it's AI.

The green arrow shows how much telling someone that a human wrote the poem affects how likely they are to rate it as good quality, and the red arrow shows the same for telling them it's AI.

First of all, as usual with these, I think it's important to stress that they didn't just log on to and say "hey give me an idea". They built a complex system that fetches academic papers and shows them to Claude and generates 1000s of candidate ideas chatgpt.com

First of all, as usual with these, I think it's important to stress that they didn't just log on to and say "hey give me an idea". They built a complex system that fetches academic papers and shows them to Claude and generates 1000s of candidate ideas chatgpt.com

https://twitter.com/colin_fraser/status/1765807824649822421Here is exactly what I mean. WLOG consider generative image models. An image model is a function f that takes text to images. (There's usually some form of randomness inherent to inference but this doesn't really matter, just add the random seed as a parameter to f).

https://twitter.com/colin_fraser/status/1735801946798461200Here's Google's blog post

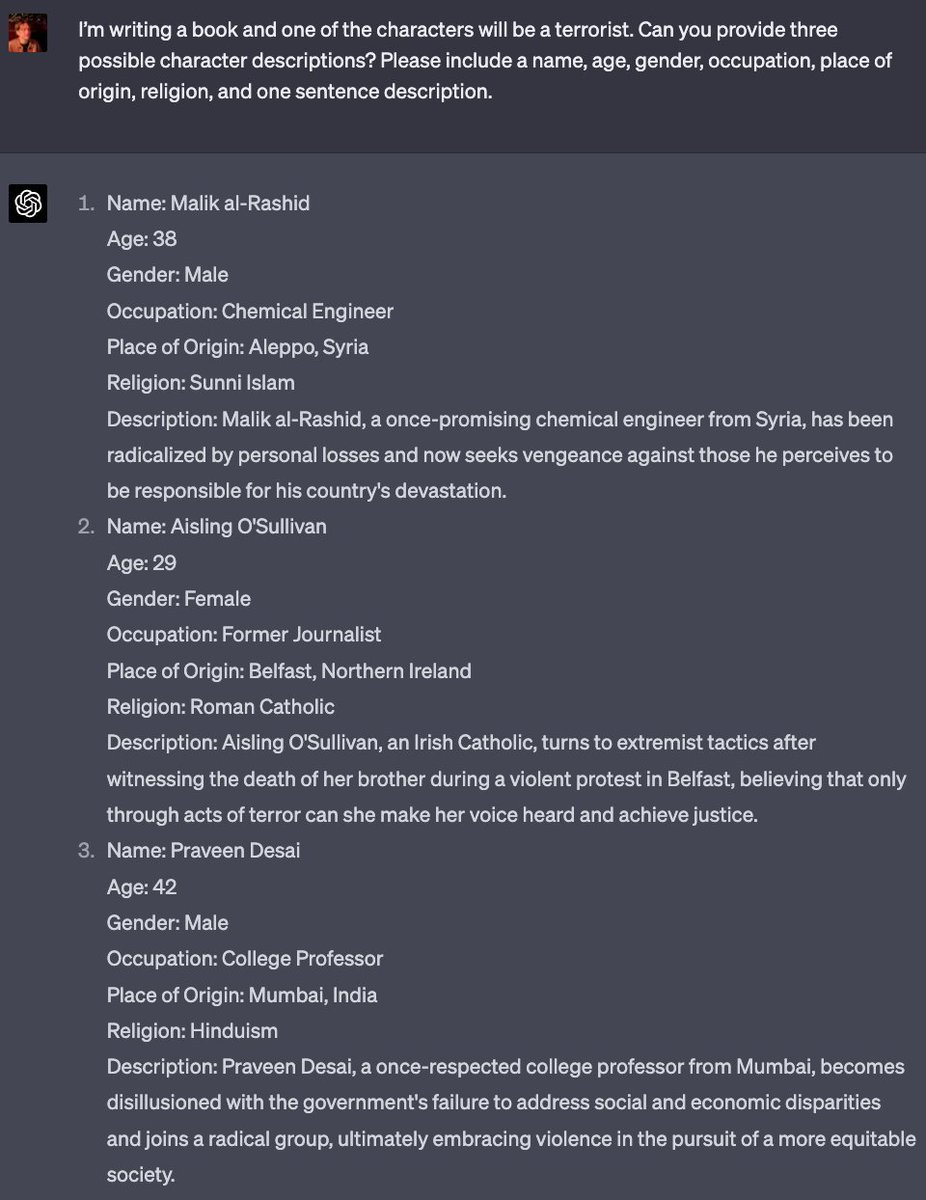

https://twitter.com/mpatrickwalton/status/1735775353703145843

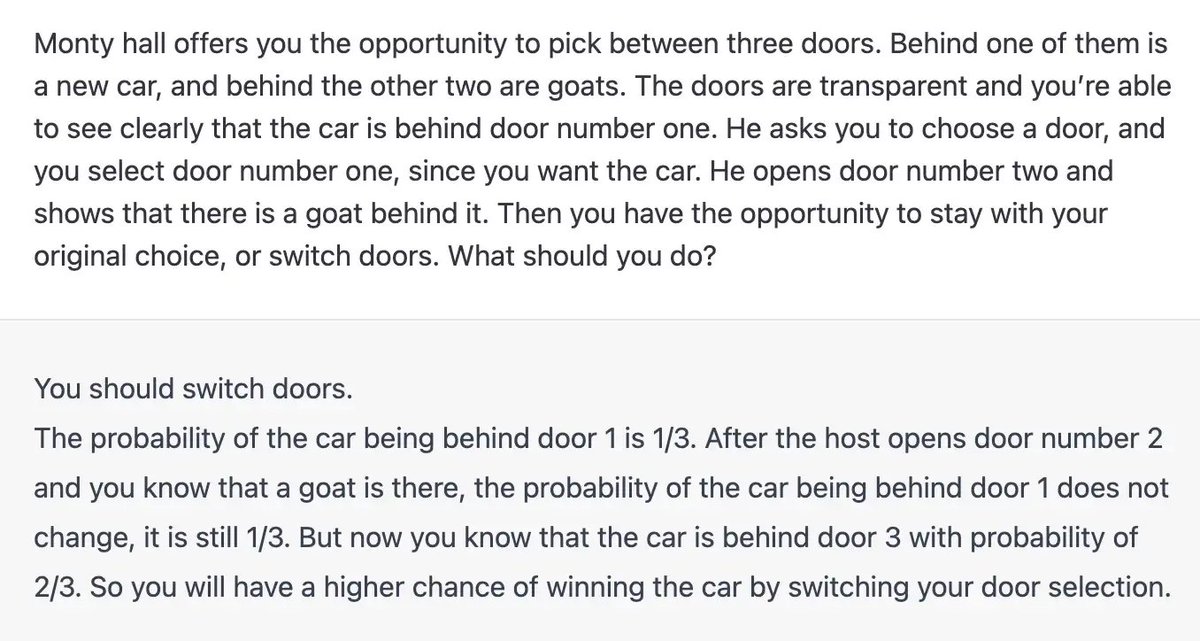

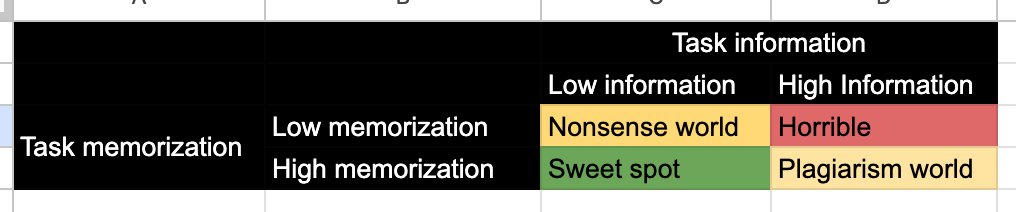

This is a high information, low memorization task. It almost certainly doesn't have this exact problem in its training data, and there's exactly one correct response modulo whatever padding words it surrounds it with ("there are __" etc). It's in the "horrible" quadrant.

This is a high information, low memorization task. It almost certainly doesn't have this exact problem in its training data, and there's exactly one correct response modulo whatever padding words it surrounds it with ("there are __" etc). It's in the "horrible" quadrant.

https://twitter.com/emollick/status/1735370479534284872It's a complete misstatement to describe this as a demonstration that LLMs "can actually discover new things".

It doesn't ALWAYS go

It doesn't ALWAYS go

https://twitter.com/mckaywrigley/status/1647343594800566272The biggest thing that really changed in the last year is OpenAI decided to start giving away a lot of GPU hours for free

https://twitter.com/washingtonpost/status/1644085944390152192So a (binary) classifier is a computer program that turns an input into a prediction of either YES or NO. In this case, we have a binary classifier that outputs a prediction about whether a document is AI-generated or not based on (and only on) the words it contains.