📈 I summarise Machine Learning, AI and Time Series concepts in an easy and visual way • 💊Follow me in https://t.co/oYXkuSnQeD

👉 Inquiries by PM

3 subscribers

How to get URL link on X (Twitter) App

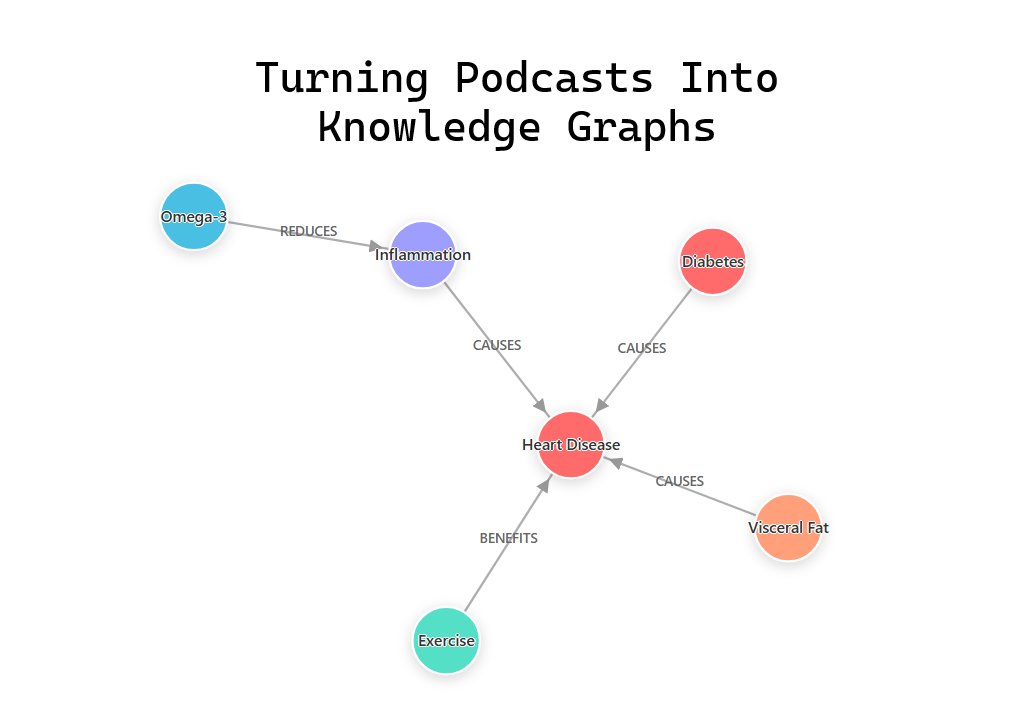

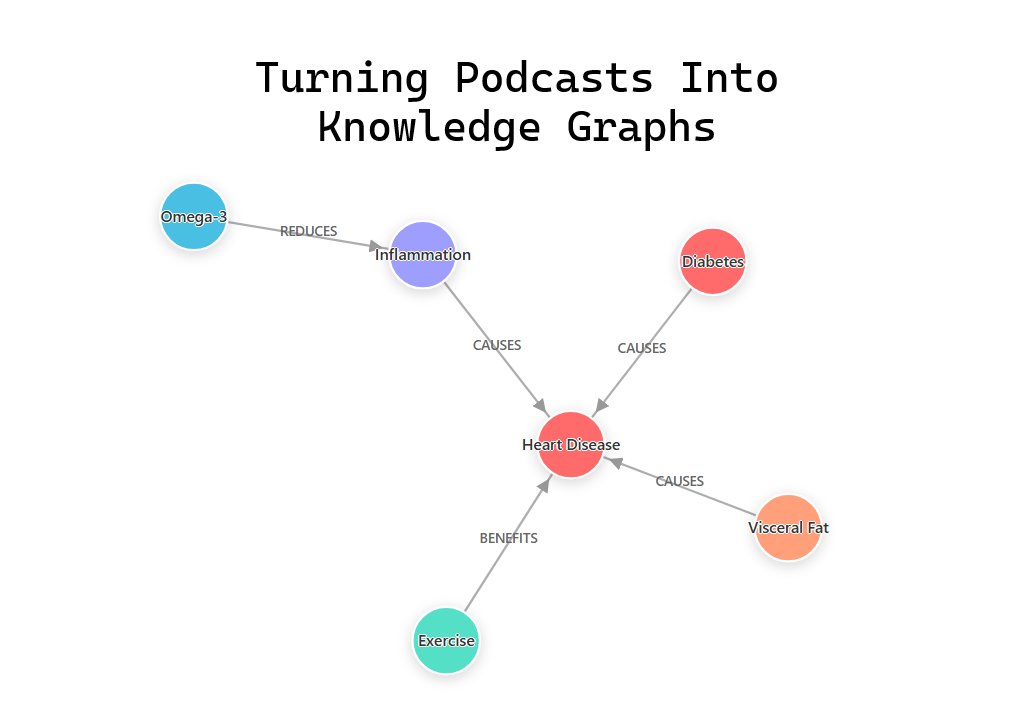

The core is knowledge extraction using LangChain's LLMGraphTransformer with gpt-4o.

The core is knowledge extraction using LangChain's LLMGraphTransformer with gpt-4o.

🟢 Early layers focus on low-level features extracted directly from pixel intensities.

🟢 Early layers focus on low-level features extracted directly from pixel intensities.

RAG helps bridge the gap between large language models and external data sources, allowing AI systems to generate relevant and informed responses by leveraging knowledge from existing documents and databases.

RAG helps bridge the gap between large language models and external data sources, allowing AI systems to generate relevant and informed responses by leveraging knowledge from existing documents and databases.

5️⃣ Exponential Distribution:

5️⃣ Exponential Distribution:

1️⃣ Normal Distribution:

1️⃣ Normal Distribution:

RAG helps bridge the gap between large language models and external data sources, allowing AI systems to generate relevant and informed responses by leveraging knowledge from existing documents and databases.

RAG helps bridge the gap between large language models and external data sources, allowing AI systems to generate relevant and informed responses by leveraging knowledge from existing documents and databases.

✦ They empower similarity searches vital for LLMs in tasks like semantic search and recommendation systems.

✦ They empower similarity searches vital for LLMs in tasks like semantic search and recommendation systems.

In SVM, the kernel trick is a clever way to perform complex calculations in a higher-dimensional feature space without explicitly transforming the original data into that space.

In SVM, the kernel trick is a clever way to perform complex calculations in a higher-dimensional feature space without explicitly transforming the original data into that space.

The trend component represents the overall direction data moves over an extended period.

The trend component represents the overall direction data moves over an extended period.

Steps:

Steps:

1️⃣ Model Interpretability

1️⃣ Model Interpretability