Researching effects of automated AI R&D | pro-America, pro-tech, & pro-AI safety

How to get URL link on X (Twitter) App

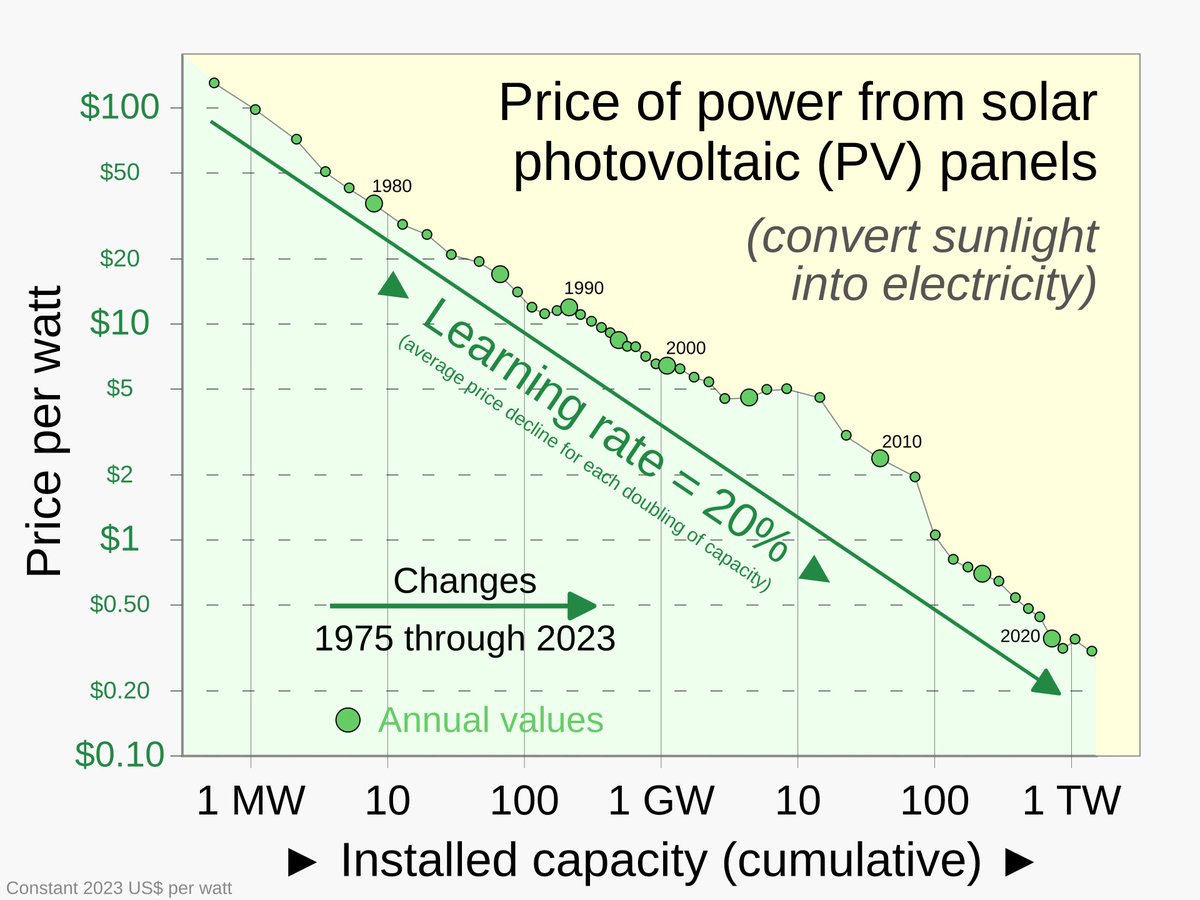

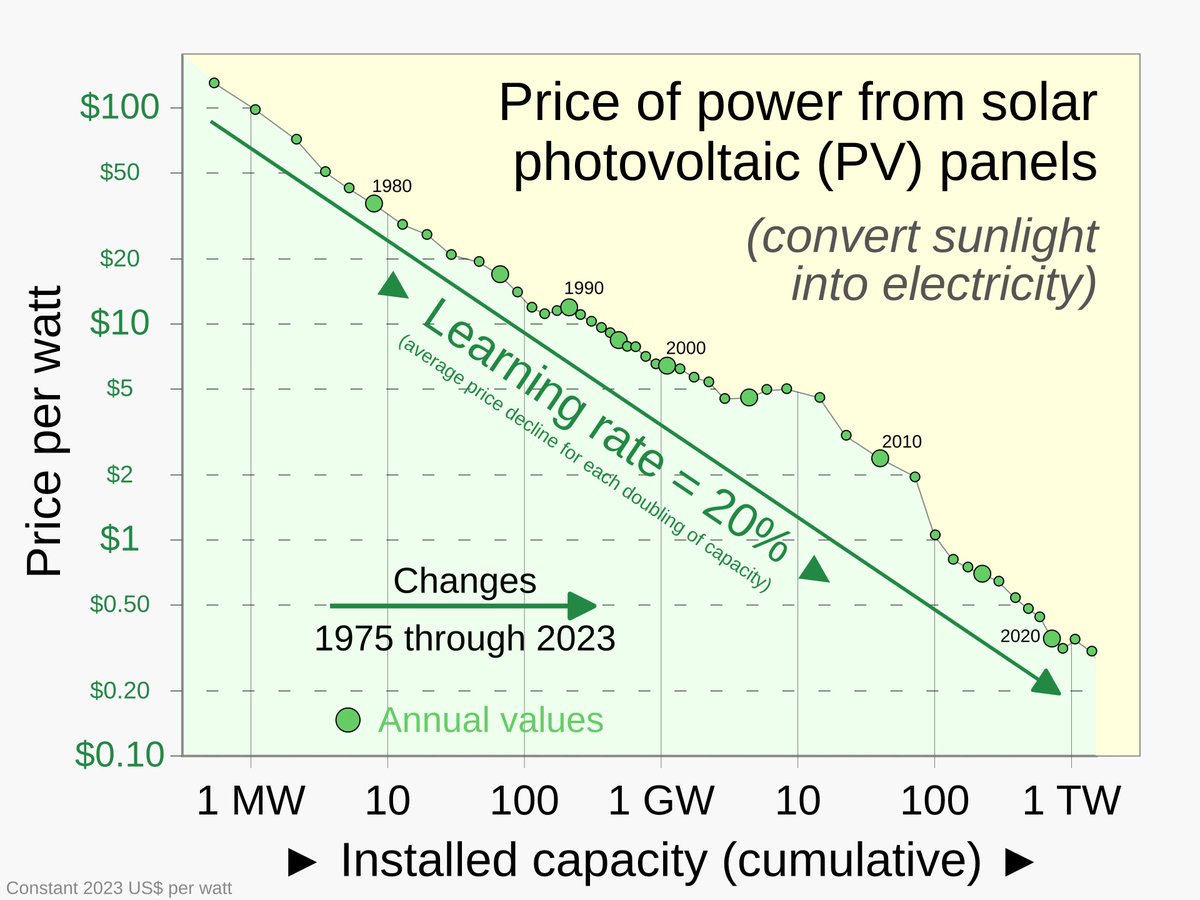

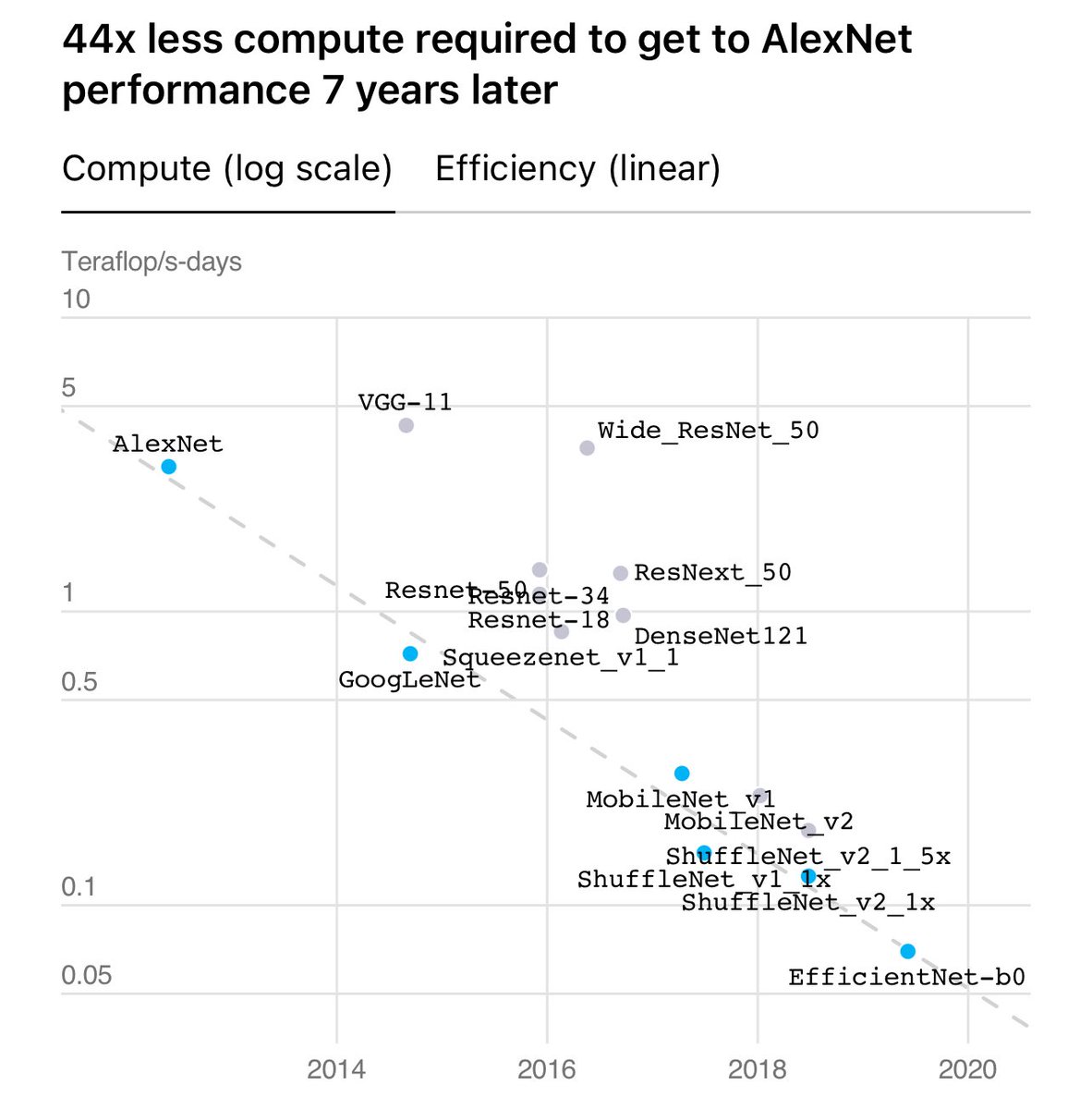

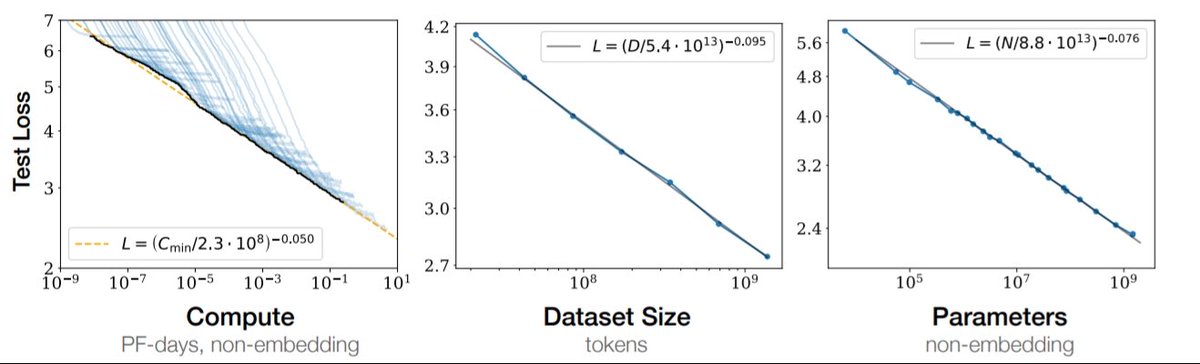

https://twitter.com/joel_bkr/status/1959293800855351693Why should there be a power law? We actually see this sort of dynamic come up all the time in technological progress - from experience curve effects (think declining PV prices) to GDP growth to efficiency improvements in various AI domains over time to AI scaling laws

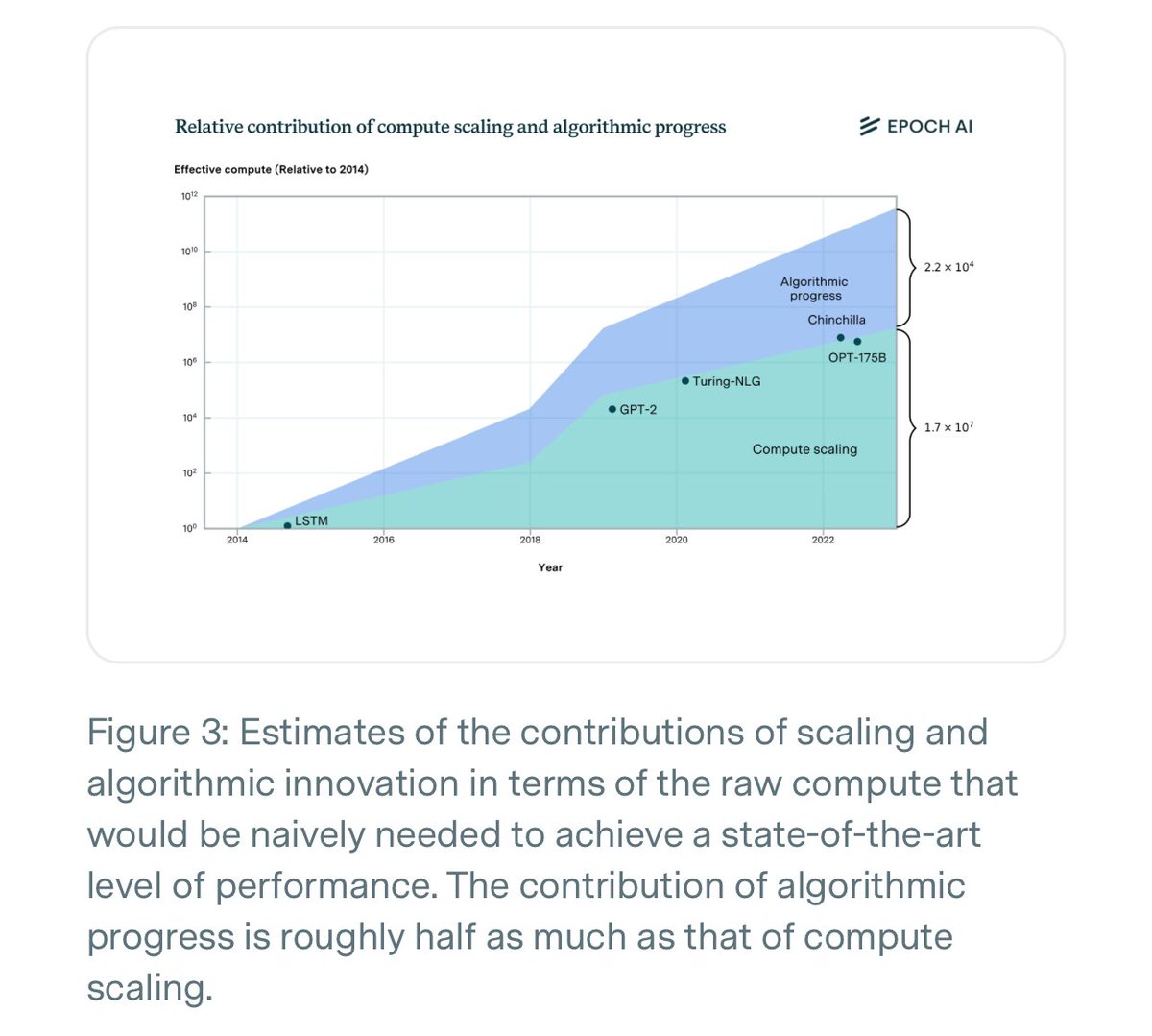

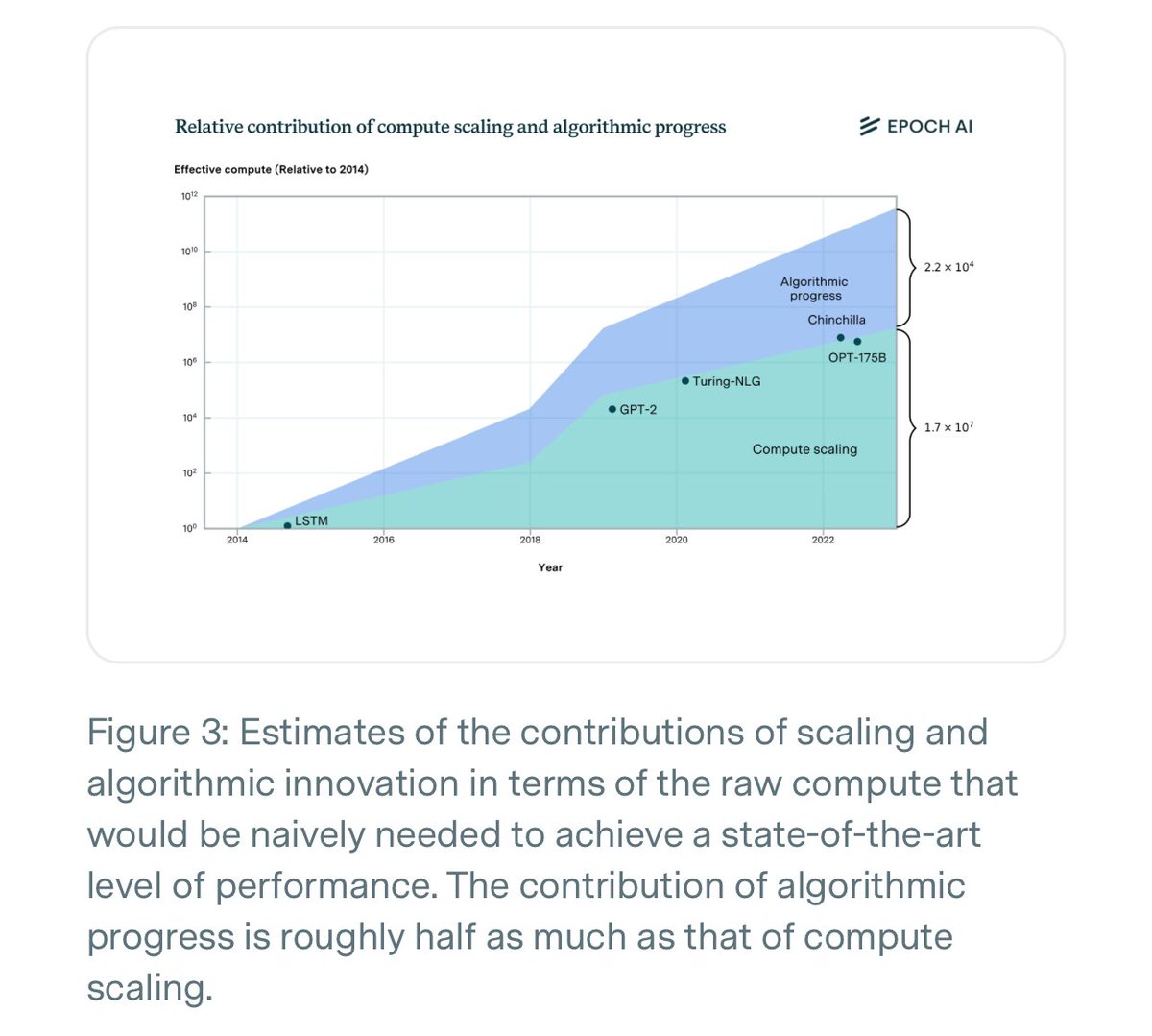

https://twitter.com/dwarkesh_sp/status/1926364571104653555On the first point - Epoch finds that in language models, pretraining algorithmic progress has been around half as impactful as compute scale up. Naively, if compute scale up stopped, progress would slow down by 3x. This is a decent amount, but not enough to say “2030 or bust”

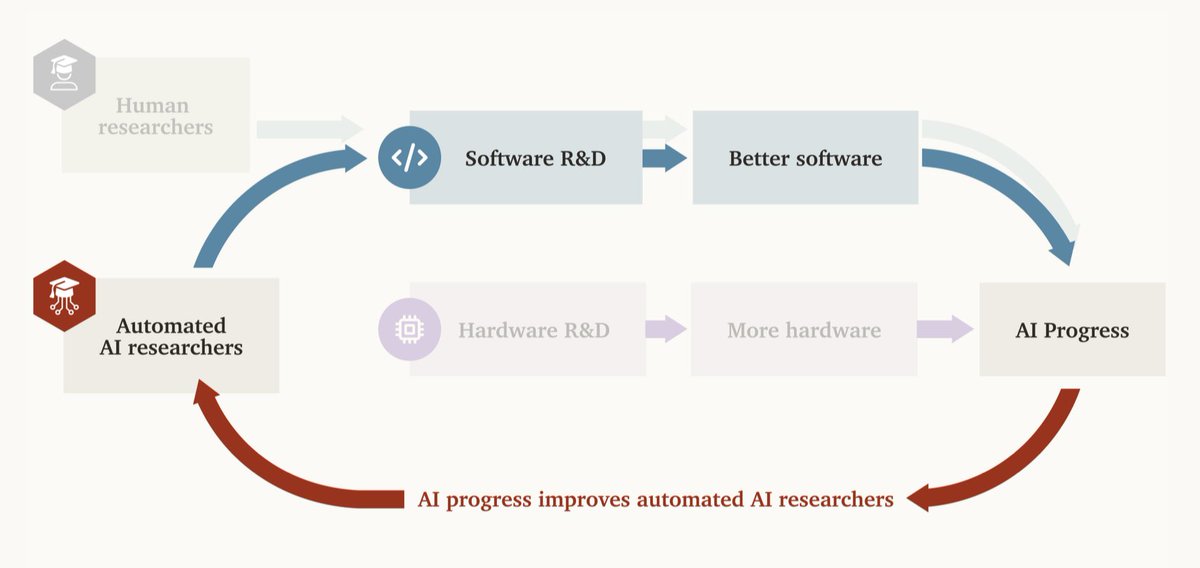

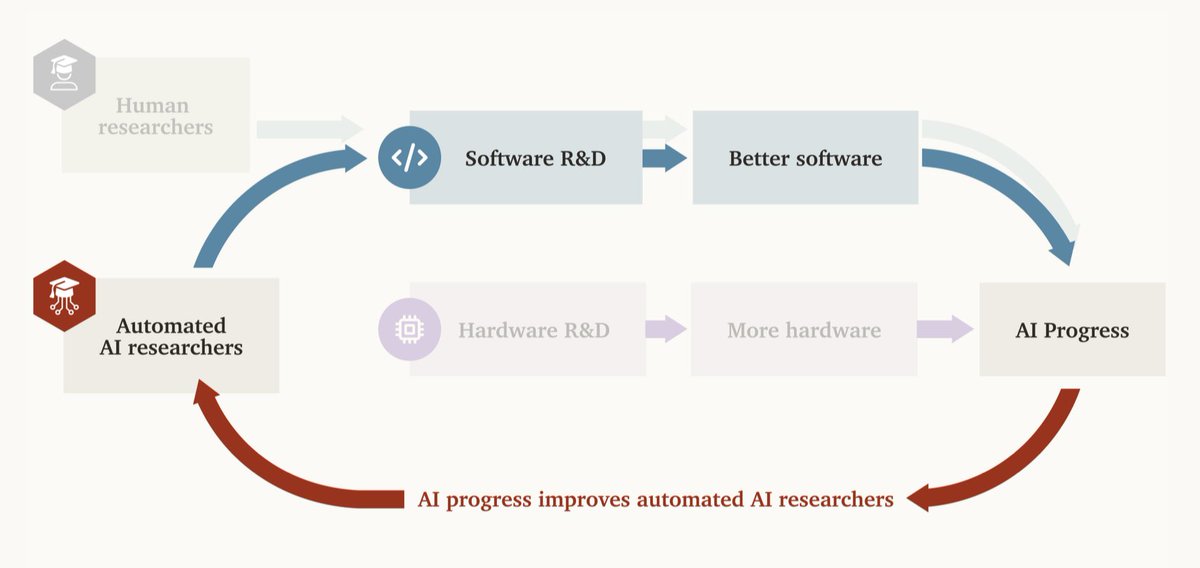

If AI R&D is fully automated, there will be a positive feedback loop: AI performs AI R&D -> AI progress -> better AI does AI R&D -> etc.

If AI R&D is fully automated, there will be a positive feedback loop: AI performs AI R&D -> AI progress -> better AI does AI R&D -> etc.

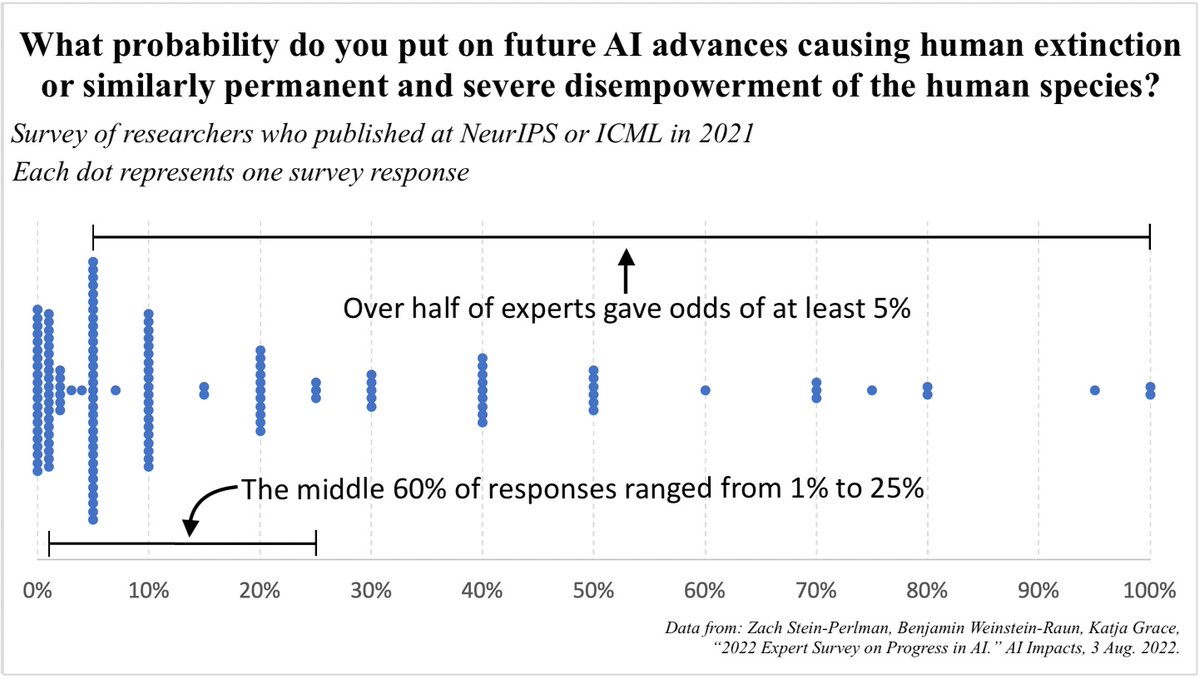

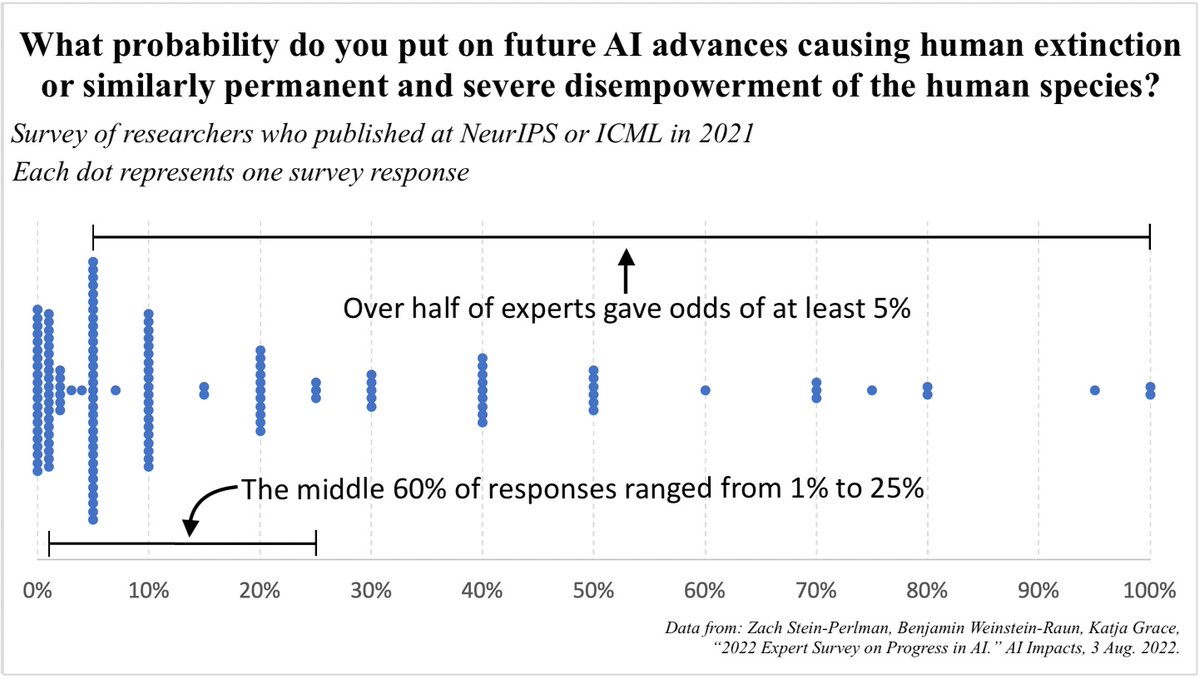

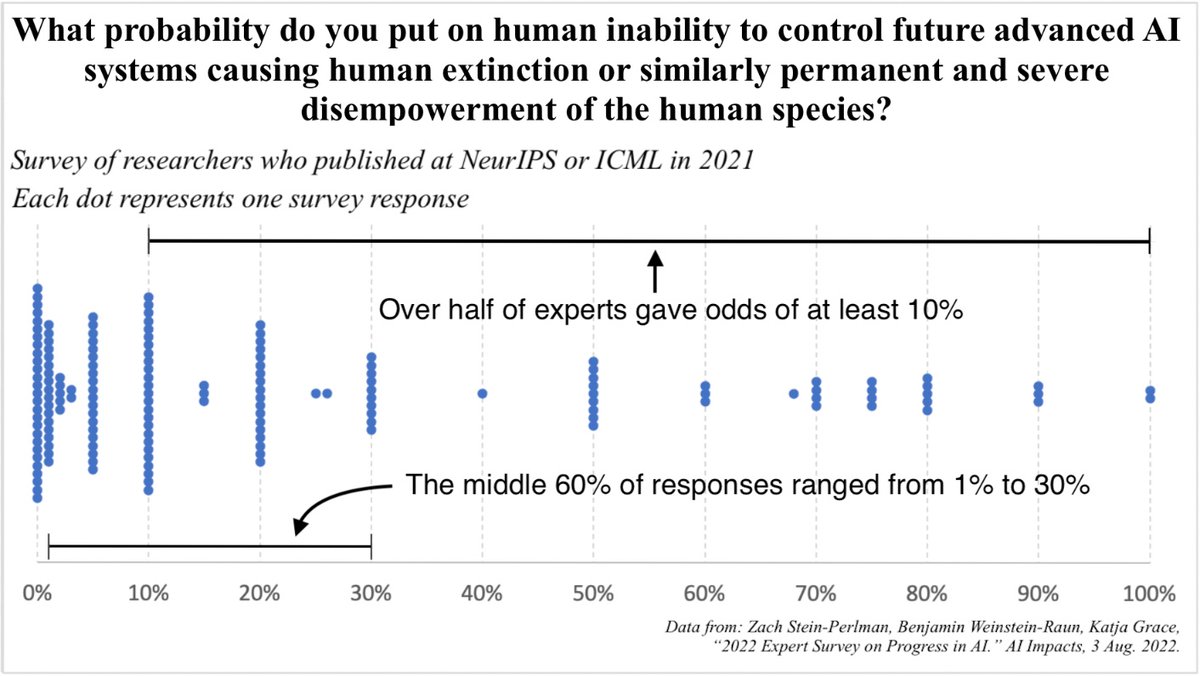

@benwr @KatjaGrace @AIImpacts Here's the graph for the other wording:

@benwr @KatjaGrace @AIImpacts Here's the graph for the other wording:

https://twitter.com/daniel_eth/status/1537284413393776640?s=20

"Yes, maybe you'll get them to do *something* about AI, but it's such a complicated issue that they'll totally misunderstand the issues at play"

"Yes, maybe you'll get them to do *something* about AI, but it's such a complicated issue that they'll totally misunderstand the issues at play"