AGI research @DeepMind. Ex cofounder & CTO @vicariousai (acqd by Alphabet) and @Numenta. Triply EE (BTech IIT-Mumbai, MS&PhD Stanford). #AGIComics

How to get URL link on X (Twitter) App

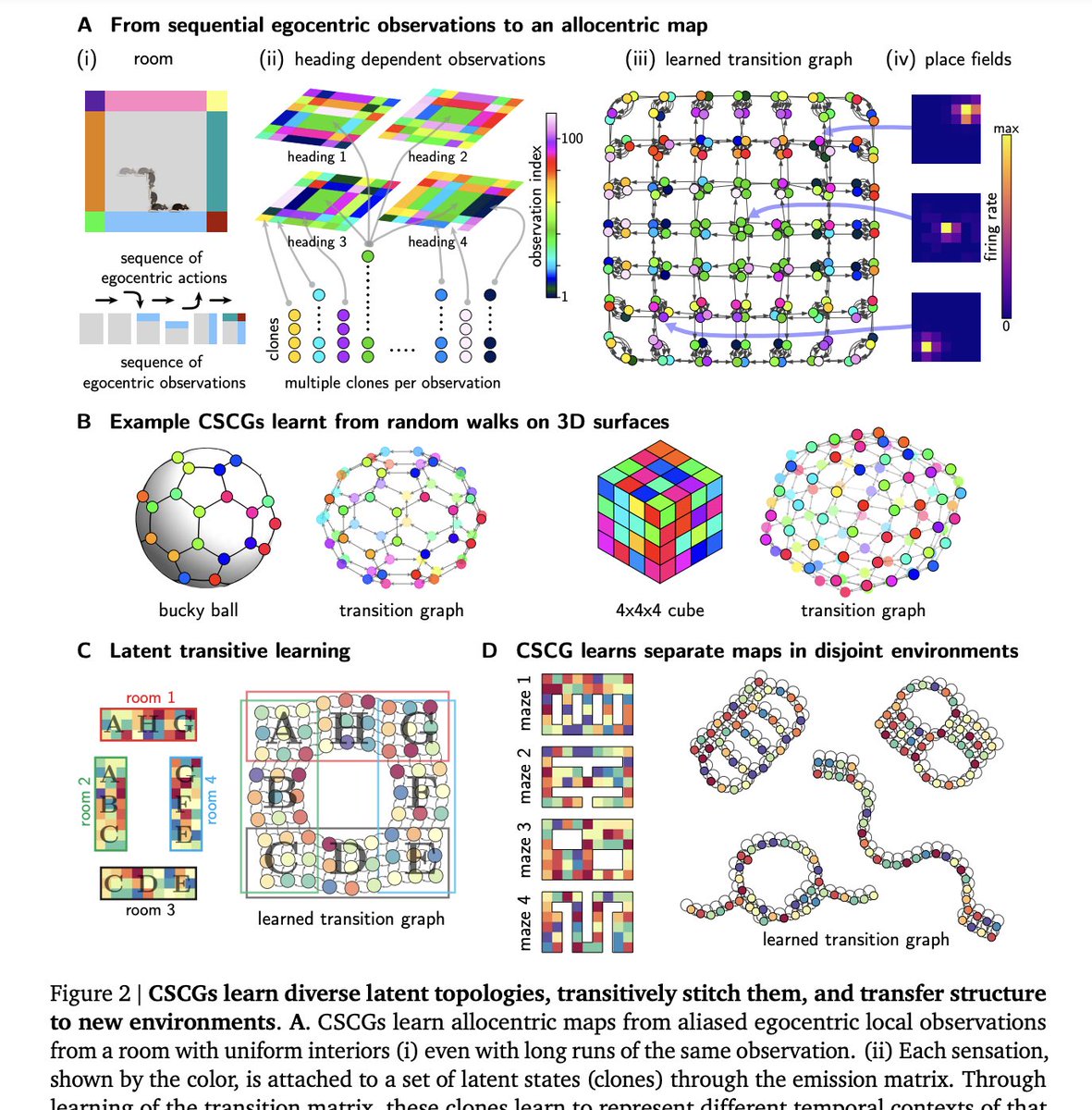

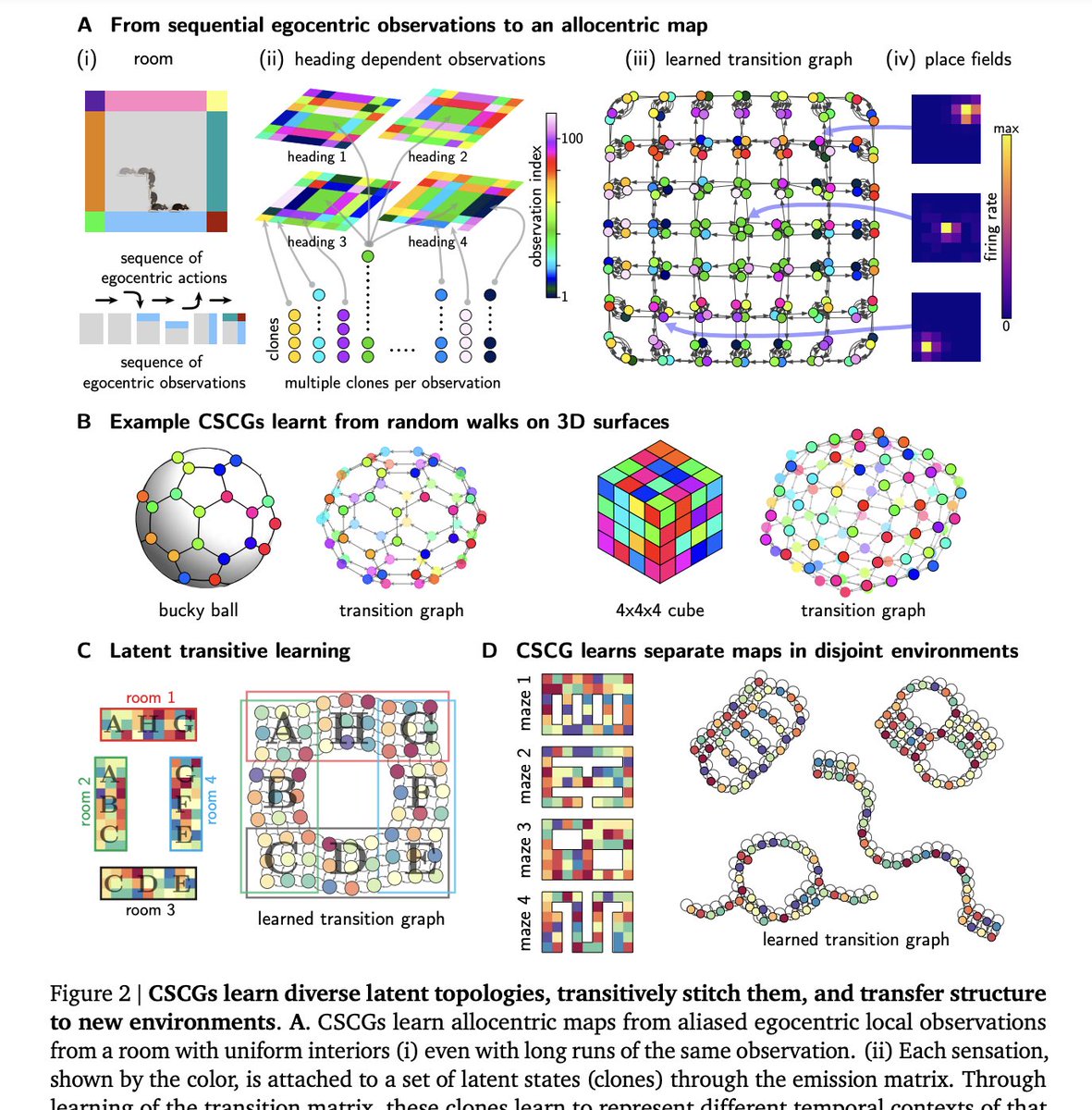

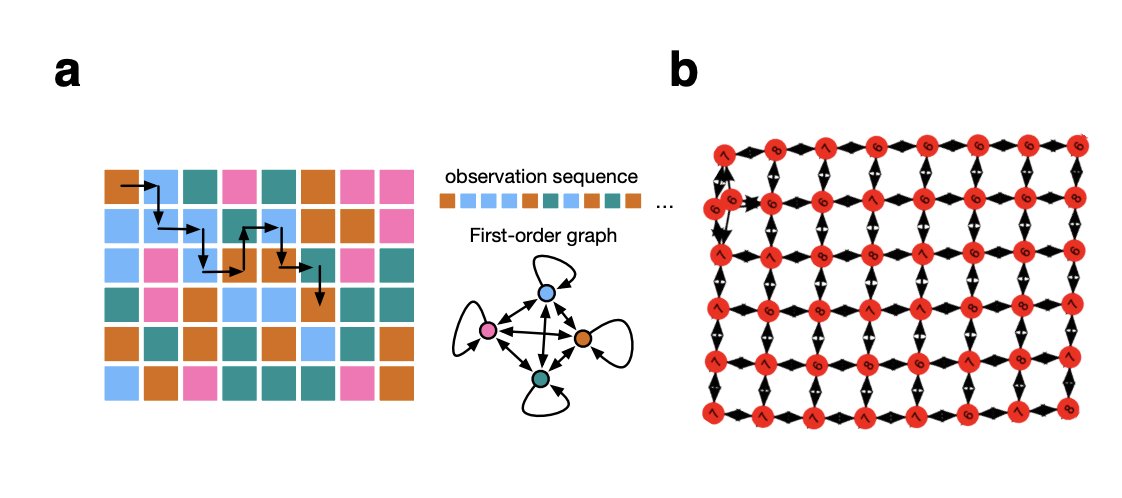

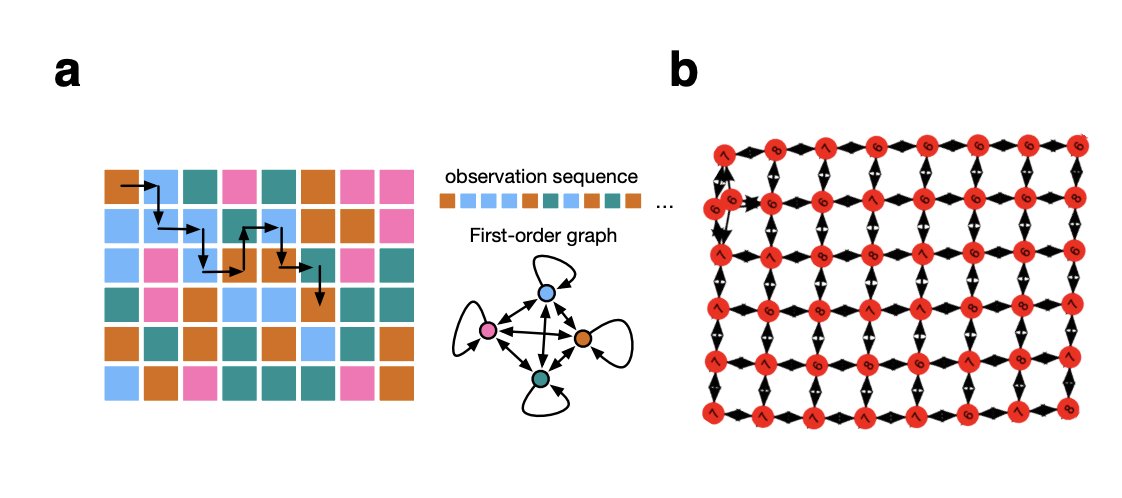

We do this by showing that another interpretable recurrent sequence model, Clone-structured causal graphs (CSCG), shows ICL via the introduction of fast rebinding. CSCGs were used earlier in learning spatial structure from pure sequential observations. 2/arxiv.org/abs/2212.01508

We do this by showing that another interpretable recurrent sequence model, Clone-structured causal graphs (CSCG), shows ICL via the introduction of fast rebinding. CSCGs were used earlier in learning spatial structure from pure sequential observations. 2/arxiv.org/abs/2212.01508

https://twitter.com/vicariousai/status/1386708676879151109The core idea "space is a sequence" is discussed in this talk. Warning...it will change the way you think about the hippocampus :-) 2/

https://twitter.com/dileeplearning/status/1373191886907699203

https://twitter.com/biorxiv_neursci/status/1304287389167300610

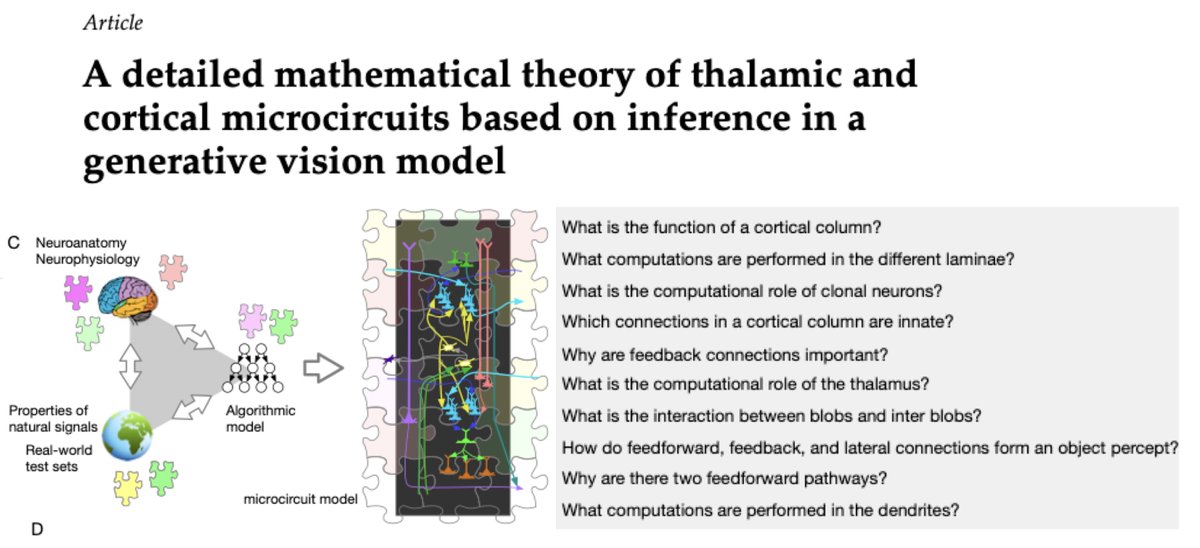

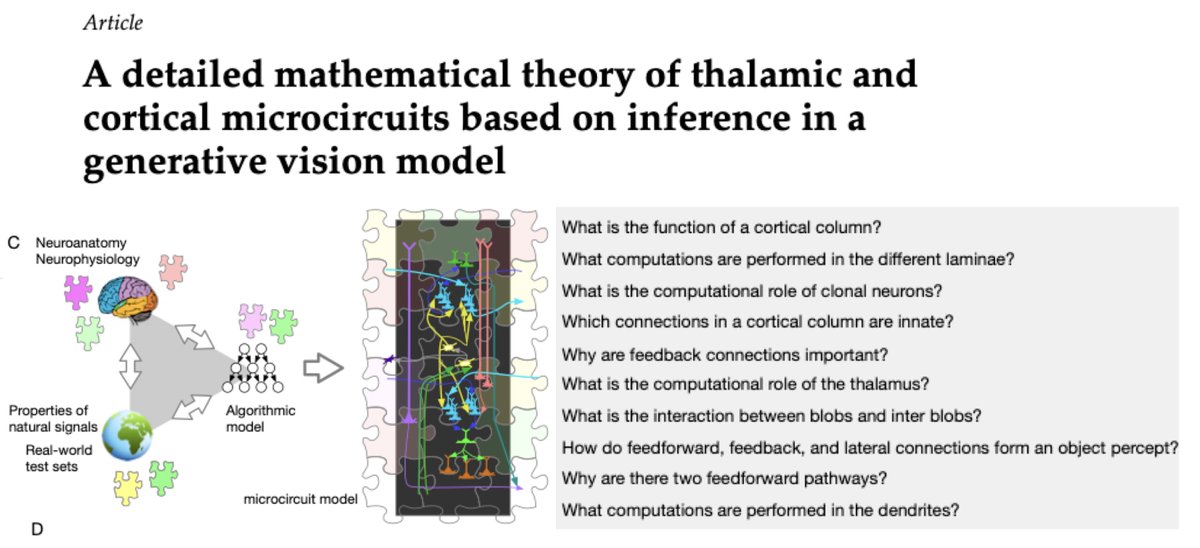

We start with RCN, our previously published neuroscience-inspired generative vision model (science.sciencemag.org/content/358/63…) , and triangulate the inference computations in that model with data from neuroanatomy and physiology. The mutual constraints help slot in the puzzle pieces. 2/

We start with RCN, our previously published neuroscience-inspired generative vision model (science.sciencemag.org/content/358/63…) , and triangulate the inference computations in that model with data from neuroanatomy and physiology. The mutual constraints help slot in the puzzle pieces. 2/

https://twitter.com/vicariousai/status/1203063863123464192As @yael_niv pointed out in her recent article, learning context specific representations from aliased observations is a challenge. Our agent can learn the layout of a room from severely aliased random walk sequences, only 4 unique observations in the room!

The paper asks the very important question -- given unlimited time (or "compute", as it is called these days) and training data, what sorts of tasks can be learned. Are there limits to such brute-force approaches? (2)

The paper asks the very important question -- given unlimited time (or "compute", as it is called these days) and training data, what sorts of tasks can be learned. Are there limits to such brute-force approaches? (2)

Cognitive programs is inspired by Barsalou's Perceptual Symbol Systems (PSS) theory, which is also relevant for discussions on symbolic-connectionist integration. @GaryMarcus citeseerx.ist.psu.edu/viewdoc/downlo…

Cognitive programs is inspired by Barsalou's Perceptual Symbol Systems (PSS) theory, which is also relevant for discussions on symbolic-connectionist integration. @GaryMarcus citeseerx.ist.psu.edu/viewdoc/downlo…