Product Marketing @CerebrasSystems

Prev: Nvidia, Ark Invest, 21co

10 subscribers

How to get URL link on X (Twitter) App

The Ethereum blockchain gives rewards to computers that validate transactions. If you hold ETH, you can validate transactions. The easiest way to do this is to use a service like Lido. The yield is currently ~6%. lido.fi

The Ethereum blockchain gives rewards to computers that validate transactions. If you hold ETH, you can validate transactions. The easiest way to do this is to use a service like Lido. The yield is currently ~6%. lido.fi

There are three ways of paying for software infrastructure:

There are three ways of paying for software infrastructure:

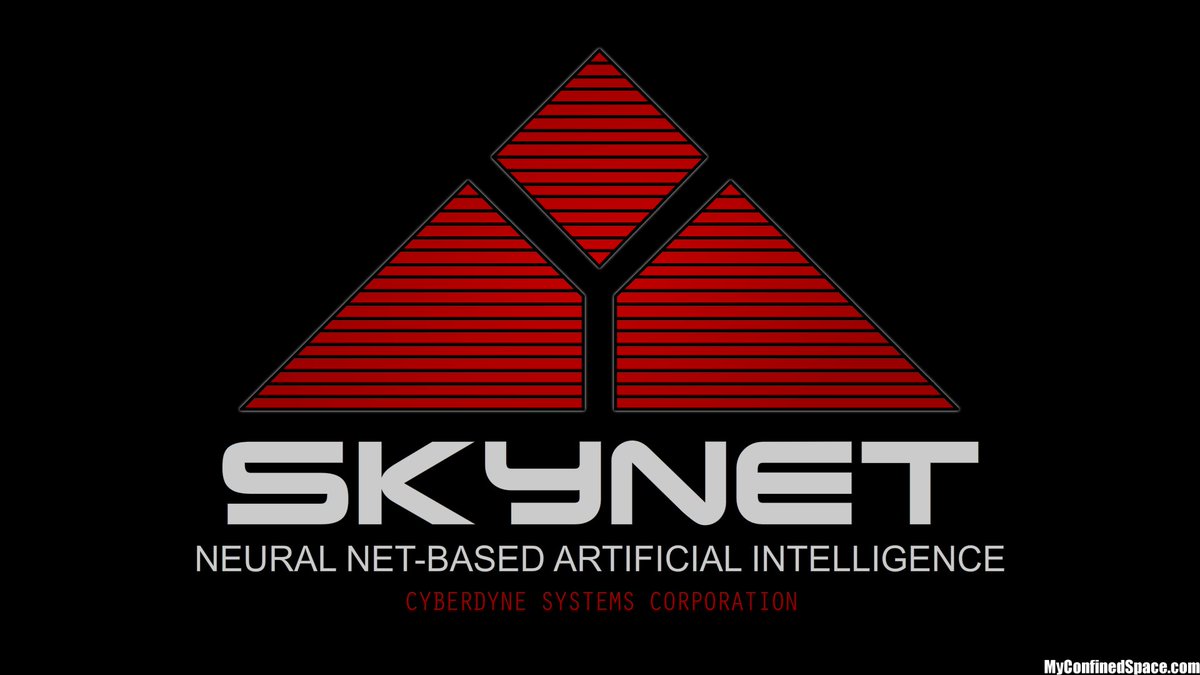

2/ This is the chip that powers the T-800. Based on its appearance and commentary from chief architect Miles Dyson, the movie makes three predictions about future processors: 1) neural net acceleration 2) multi-core design 3) 3D fabrication.

2/ This is the chip that powers the T-800. Based on its appearance and commentary from chief architect Miles Dyson, the movie makes three predictions about future processors: 1) neural net acceleration 2) multi-core design 3) 3D fabrication.

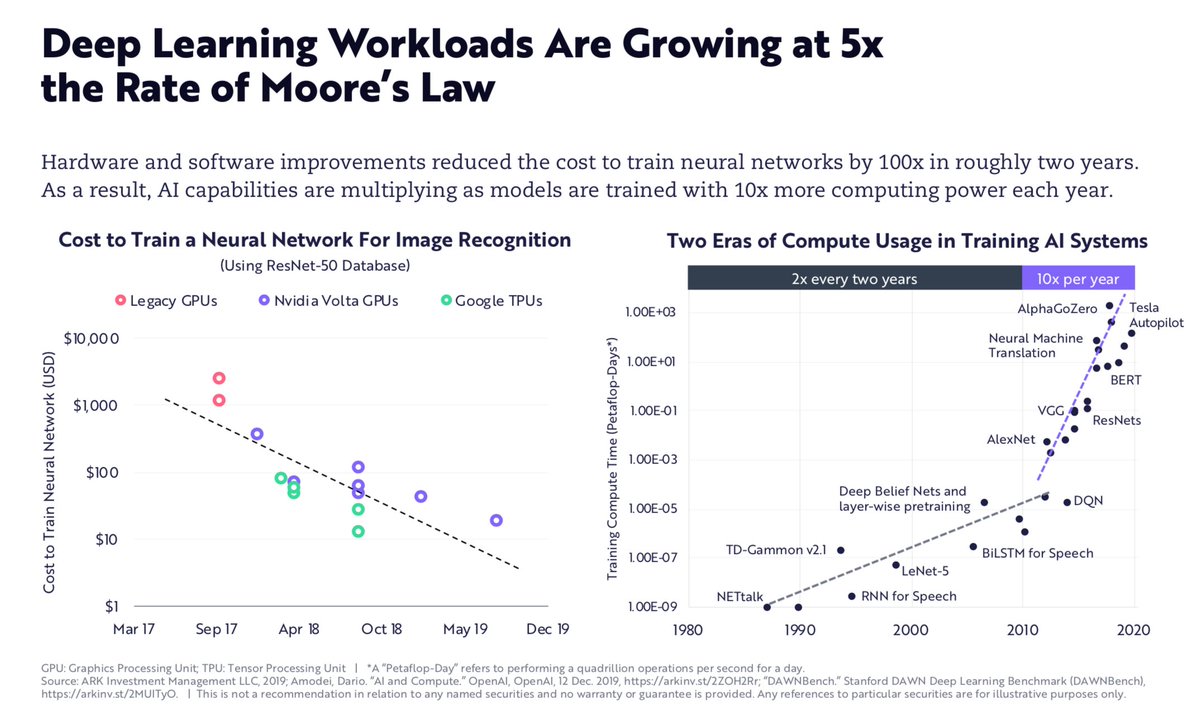

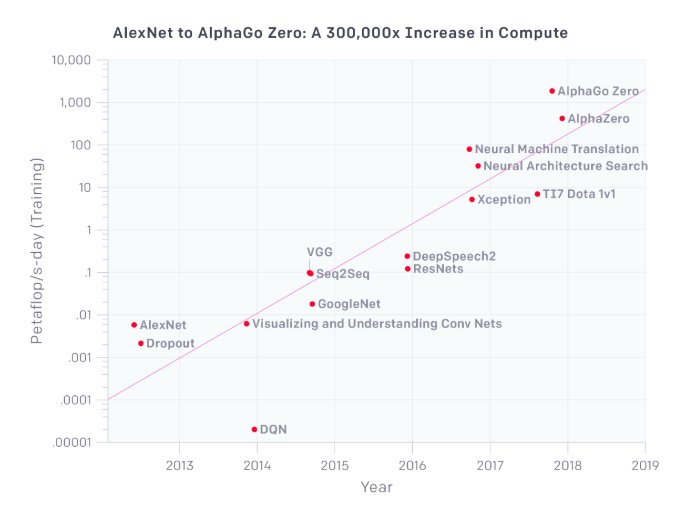

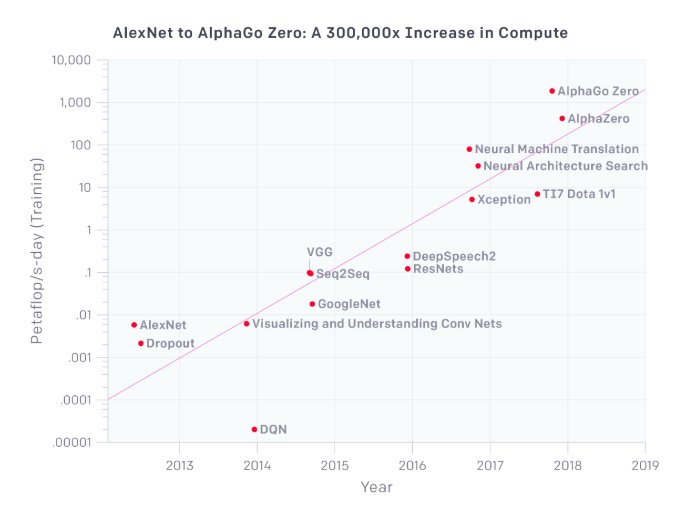

Deep learning continues to improve at astounding rates. In 2017 it costed $1,000 to train ResNet50 on the cloud, now it costs $10.

Deep learning continues to improve at astounding rates. In 2017 it costed $1,000 to train ResNet50 on the cloud, now it costs $10.

10/ Large models like Google’s Neural Machine Translation don’t even fit in one GPU’s external memory. Often they have to be split up across dozens of GPUs/servers. This increases latency by another 10-100x.

10/ Large models like Google’s Neural Machine Translation don’t even fit in one GPU’s external memory. Often they have to be split up across dozens of GPUs/servers. This increases latency by another 10-100x.

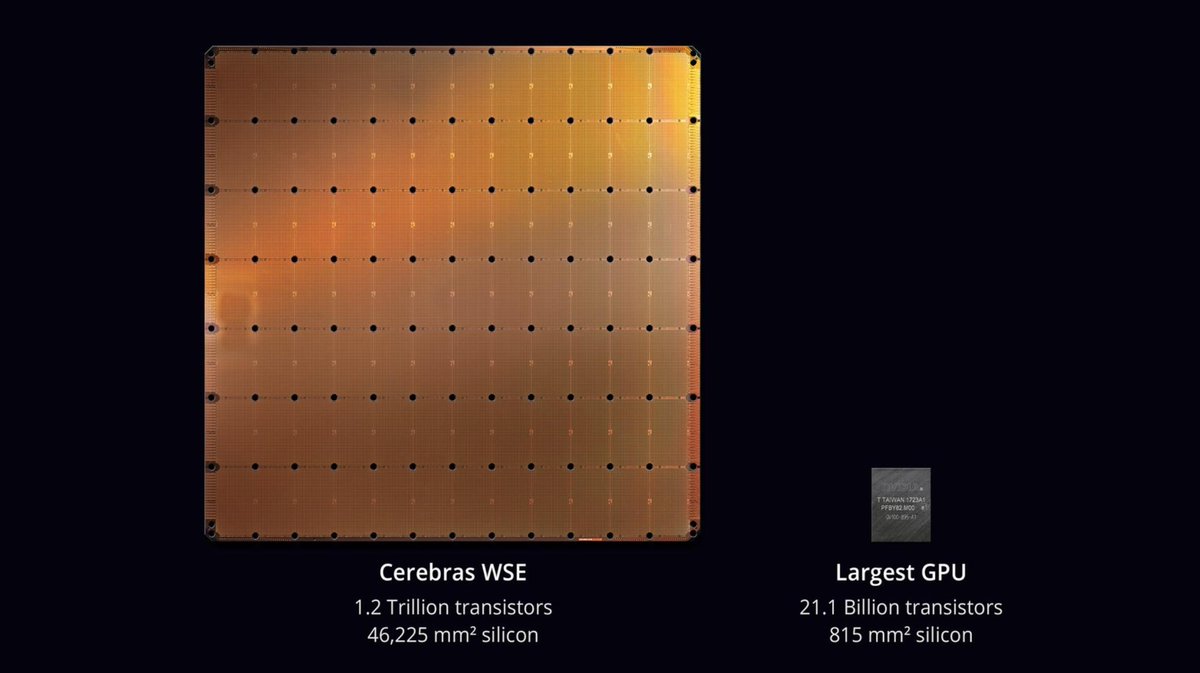

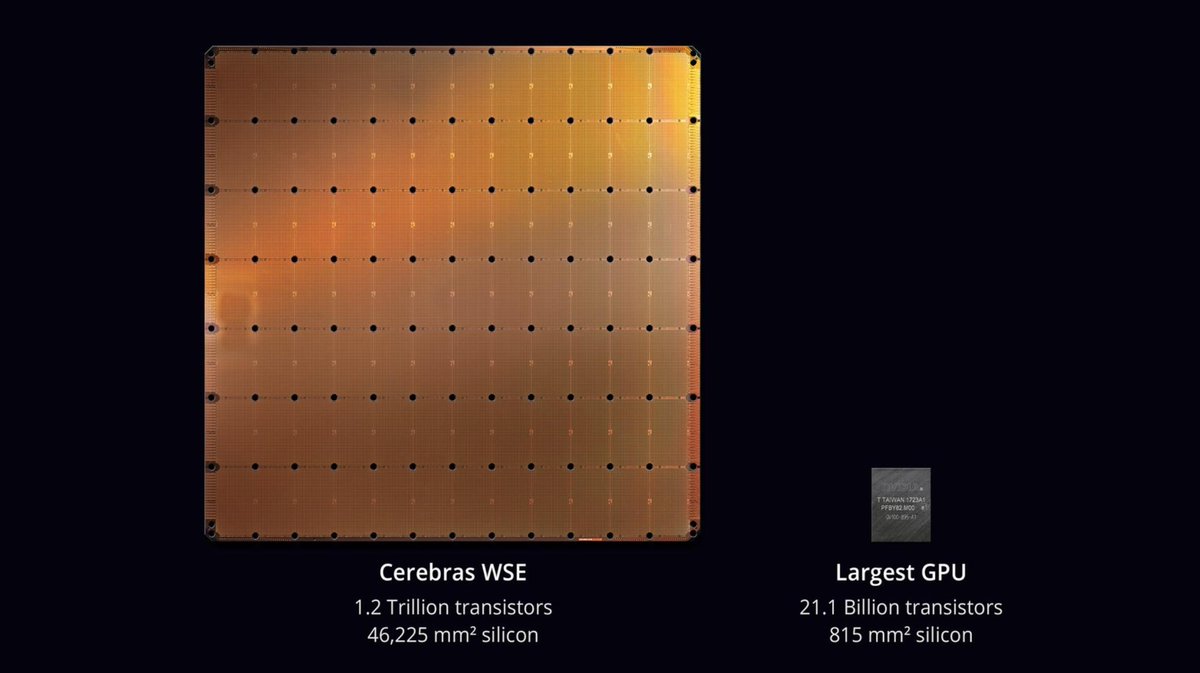

2/ It’s no coincidence that the fastest AI chip today—Nvidia’s V100—is also the largest @ 815mm^2. More area = more cores and memory.

2/ It’s no coincidence that the fastest AI chip today—Nvidia’s V100—is also the largest @ 815mm^2. More area = more cores and memory.

2/ The pure cloud strategy is clearly not working for Google—you only enable your competitors when you have no other option. Google is trying to win over customers by being the most open, by being "multi-cloud", by being a meta-layer to orchestrate all workloads.

2/ The pure cloud strategy is clearly not working for Google—you only enable your competitors when you have no other option. Google is trying to win over customers by being the most open, by being "multi-cloud", by being a meta-layer to orchestrate all workloads.

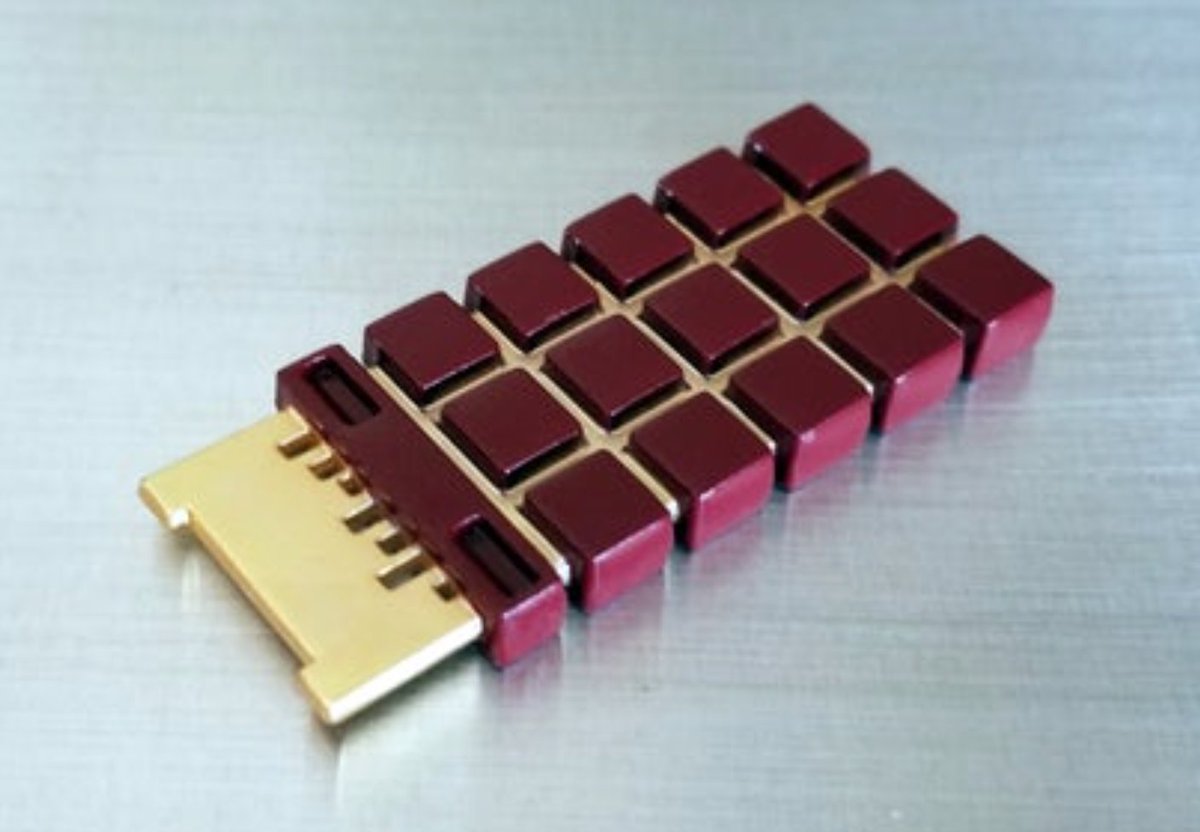

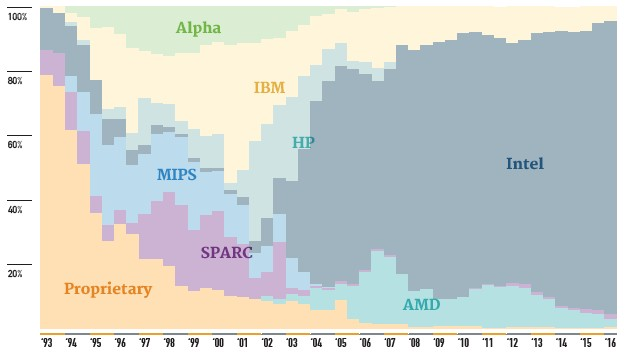

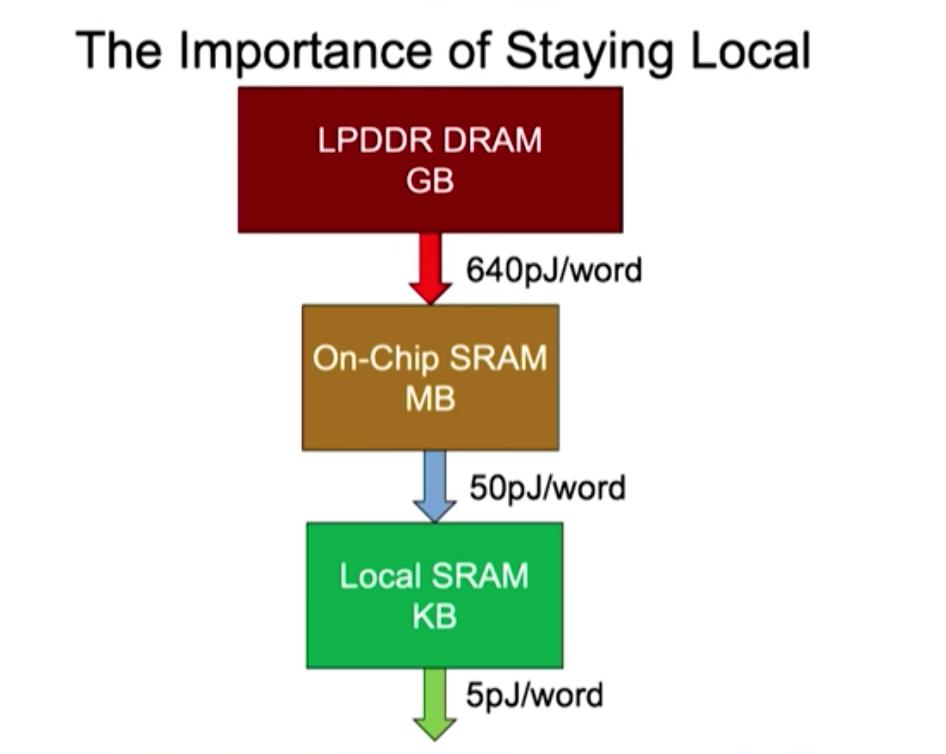

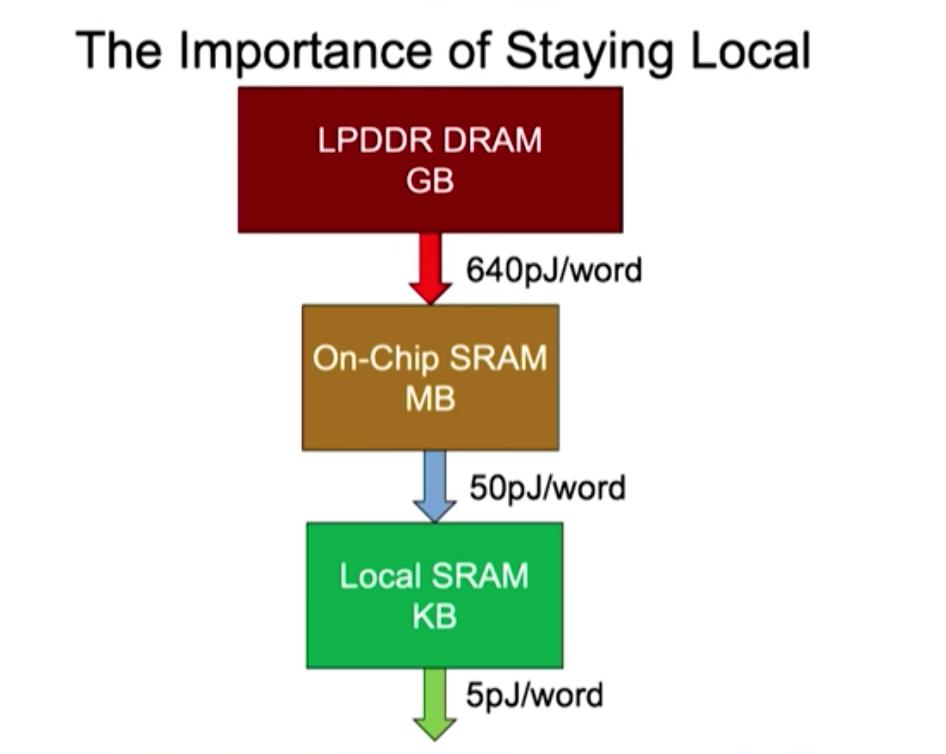

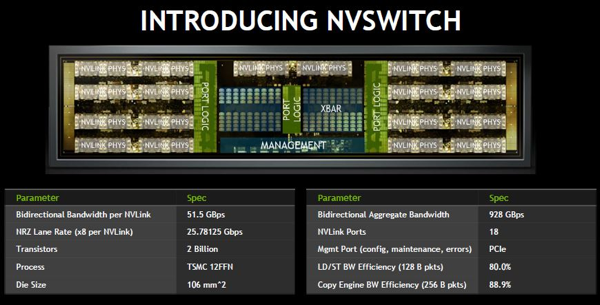

2/ There are three factors that drive system performance: transistor scaling, chip architecture, and chip count. Let’s see how these have changed since 2012.

2/ There are three factors that drive system performance: transistor scaling, chip architecture, and chip count. Let’s see how these have changed since 2012.

2/ Today GPUs have a monopoly position on deep learning training. But that’s set to change: DL ASICs could boost performance by 10-100x.

2/ Today GPUs have a monopoly position on deep learning training. But that’s set to change: DL ASICs could boost performance by 10-100x.