Associate Professor of Psychology @UniOslo | Behavioral neuroendocrinology, psychophysiology & meta-science | @hertzpodcast producer/co-host

5 subscribers

How to get URL link on X (Twitter) App

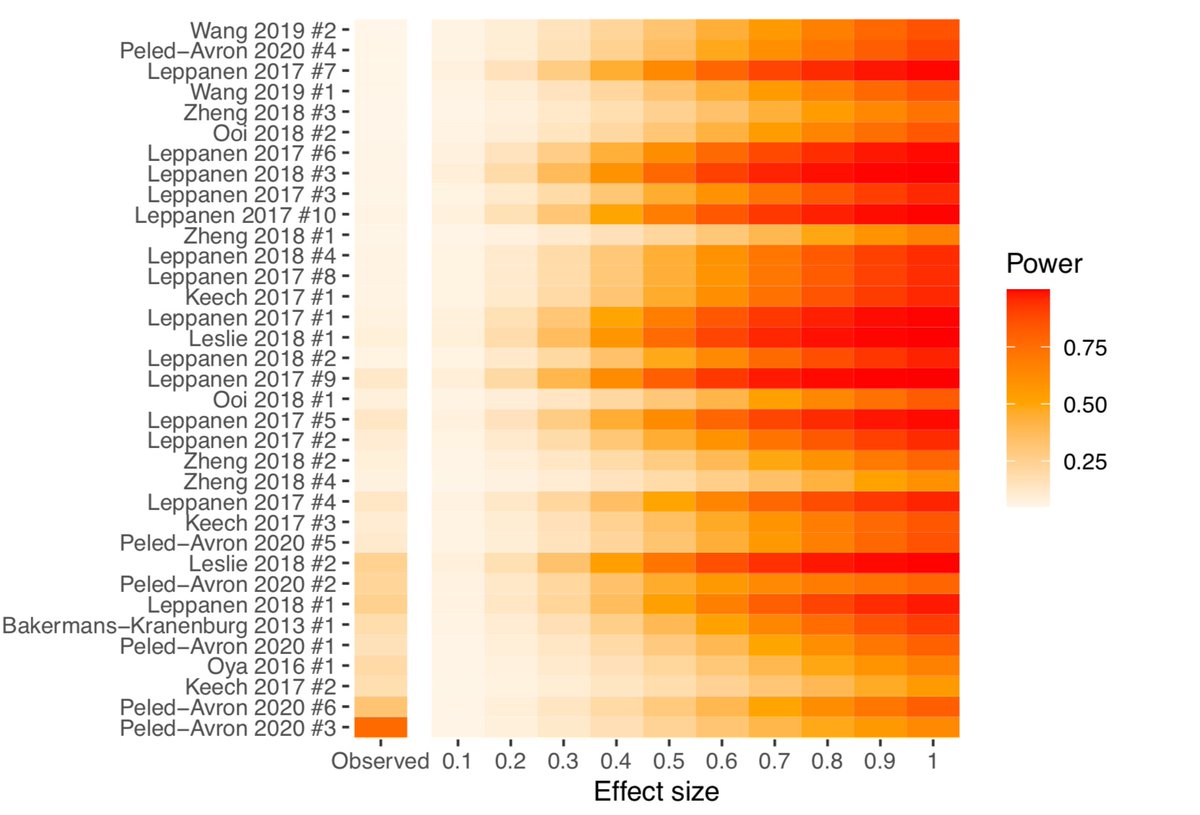

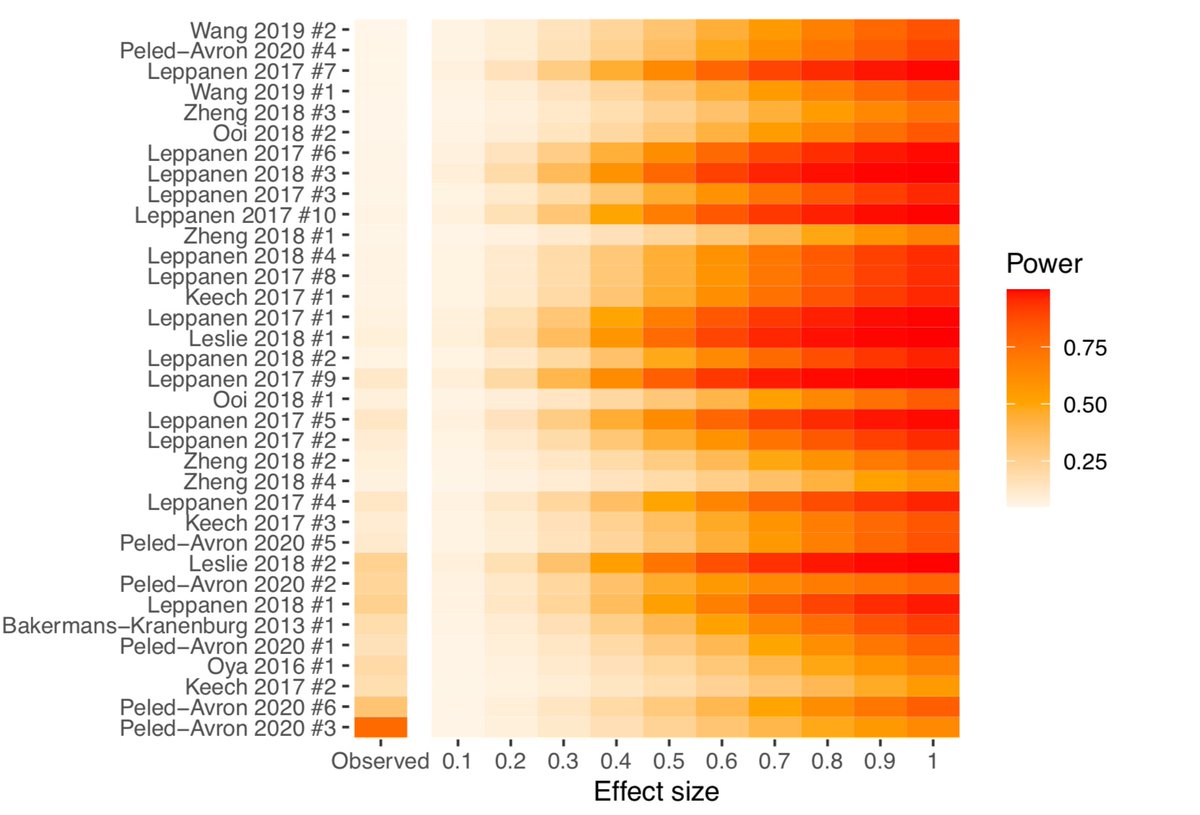

And here's your annual reminder that a "study" cannot be underpowered, but rather, a *design and test combination* can be underpowered for detecting hypothetical effect sizes of interest towardsdatascience.com/why-you-should…

And here's your annual reminder that a "study" cannot be underpowered, but rather, a *design and test combination* can be underpowered for detecting hypothetical effect sizes of interest towardsdatascience.com/why-you-should…

There's been a lot of talk recently about the quality of studies that are included in meta-analyses—how useful is a meta-analysis if it's just made up of studies with low evidential value?

There's been a lot of talk recently about the quality of studies that are included in meta-analyses—how useful is a meta-analysis if it's just made up of studies with low evidential value? https://twitter.com/GidMK/status/1641291679096512514?s=20

https://twitter.com/dsquintana/status/1540231786634121217When I was an undergraduate psychology student there was no discussion of this—all the studies we were taught just worked 🪄

One thing I want to add: the psychological sciences need to broadly adopt practices that help us determine when we're wrong. The standard NHST p-value approach cannot be used to provide support for absence of an effect. This paper provides two solutions academic.oup.com/psychsocgeront…

One thing I want to add: the psychological sciences need to broadly adopt practices that help us determine when we're wrong. The standard NHST p-value approach cannot be used to provide support for absence of an effect. This paper provides two solutions academic.oup.com/psychsocgeront…

https://twitter.com/dsquintana/status/14517974156322938881. The bottomless soup bowl study 🍜🍜🍜🍜

So, let's begin with some background.

So, let's begin with some background.

I learnt this from reading this super interesting book from @GarethLeng and @RhodriLeng mitpress.mit.edu/books/matter-f…

I learnt this from reading this super interesting book from @GarethLeng and @RhodriLeng mitpress.mit.edu/books/matter-f…

Was a pleasure working with Alex, @sallyagrace, @DirkScheele85, Yina, and @bn_becker on this paper, which we first proposed over a few beers at conference last year 🍻

Was a pleasure working with Alex, @sallyagrace, @DirkScheele85, Yina, and @bn_becker on this paper, which we first proposed over a few beers at conference last year 🍻

Let's say you designed your study and paired-samples t-test to reliably detect an effect size δ = 0.3.

Let's say you designed your study and paired-samples t-test to reliably detect an effect size δ = 0.3. https://twitter.com/statsepi/status/958650955158913024This thread has some absolutely BONKERS evidence pyramids, so have a scroll through if you're in need of a laugh

First we'll install the package via Github and then load it.

First we'll install the package via Github and then load it.

Most folks are aware that oxytocin administration studies are underpowered, but the paper that's usually cited for this was published in 2015, which analysed studies from 3 meta-analyses published in 2012 & 2013 ncbi.nlm.nih.gov/pmc/articles/P…

Most folks are aware that oxytocin administration studies are underpowered, but the paper that's usually cited for this was published in 2015, which analysed studies from 3 meta-analyses published in 2012 & 2013 ncbi.nlm.nih.gov/pmc/articles/P…

I'm hesitant about this plot because it makes the top set of studies look "worse" just because it happens to have more studies. A ratio would be more accurate, but I think it's important to also show the raw numbers.

I'm hesitant about this plot because it makes the top set of studies look "worse" just because it happens to have more studies. A ratio would be more accurate, but I think it's important to also show the raw numbers.

This is poll is an example of a reverse Keynesian beauty contest en.wikipedia.org/wiki/Keynesian…

This is poll is an example of a reverse Keynesian beauty contest en.wikipedia.org/wiki/Keynesian…