How to get URL link on X (Twitter) App

https://twitter.com/arpitrage/status/1998202294412992782

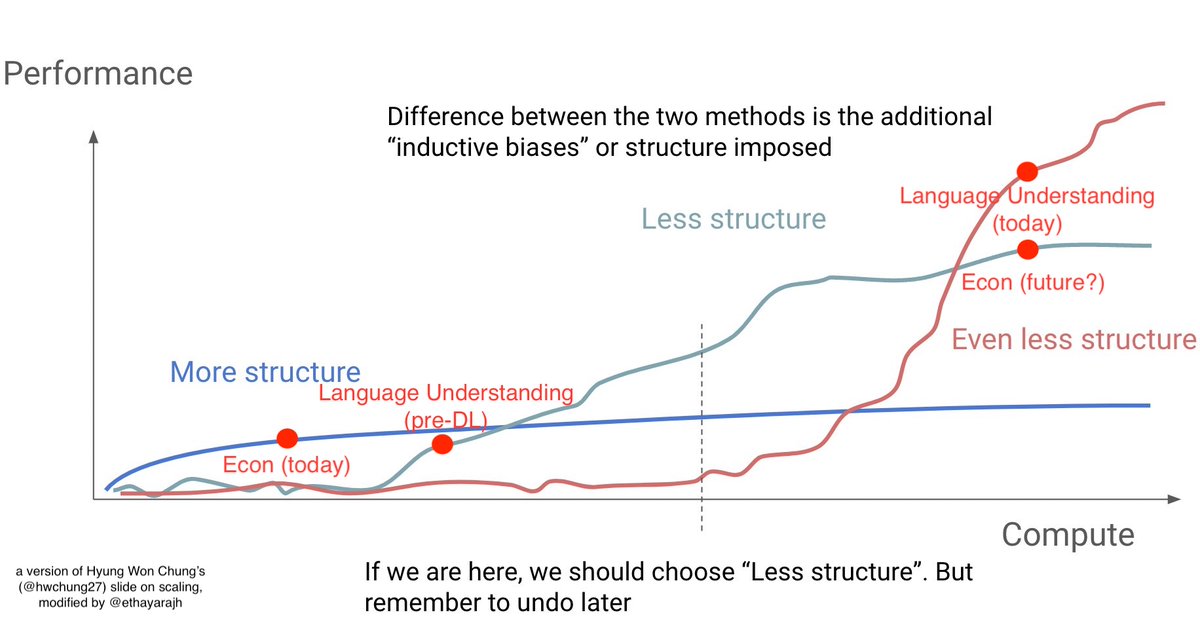

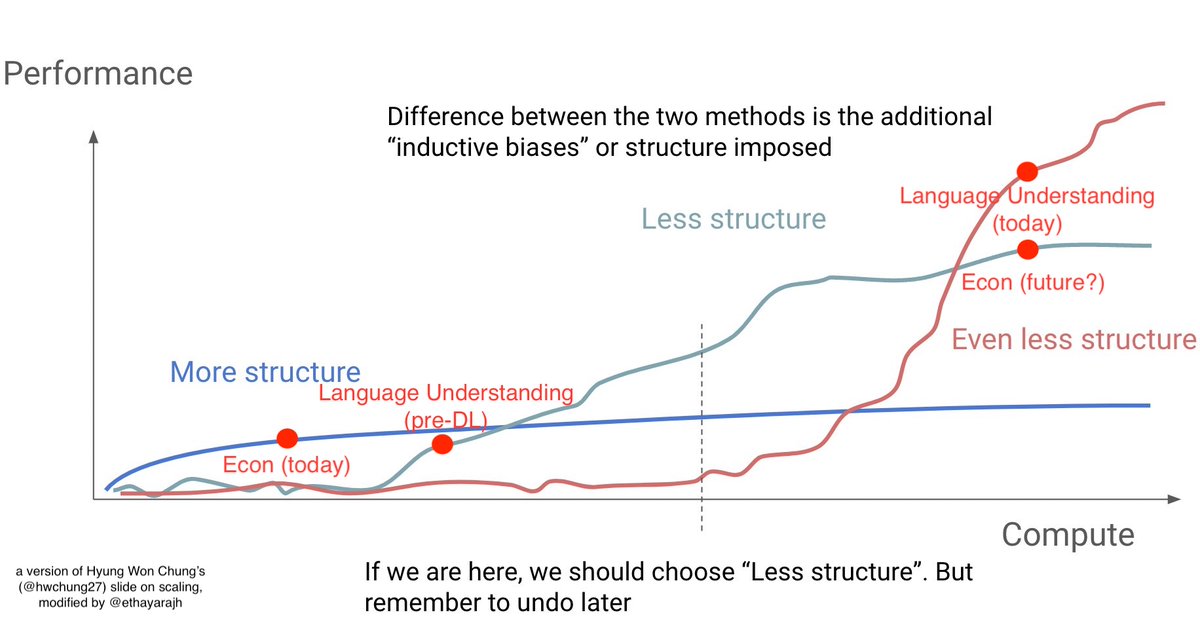

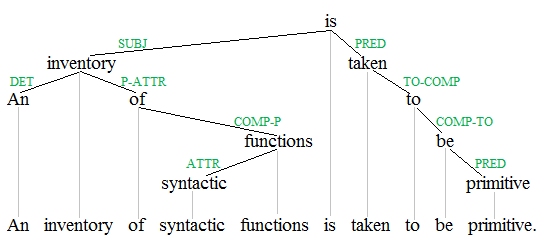

For a long time, our models of language were very structured. You would create trees of sentences based on their grammatical structure (e.g., dependency parsing), catalog all the different senses of words (e.g,. WordNet), etc. We did this for three reasons:

For a long time, our models of language were very structured. You would create trees of sentences based on their grammatical structure (e.g., dependency parsing), catalog all the different senses of words (e.g,. WordNet), etc. We did this for three reasons:

But first, what makes alignment work? Among methods that directly optimize preferences, the majority of gains <30B come from SFT.

But first, what makes alignment work? Among methods that directly optimize preferences, the majority of gains <30B come from SFT.

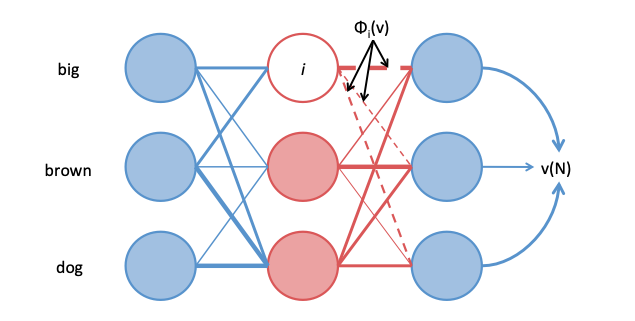

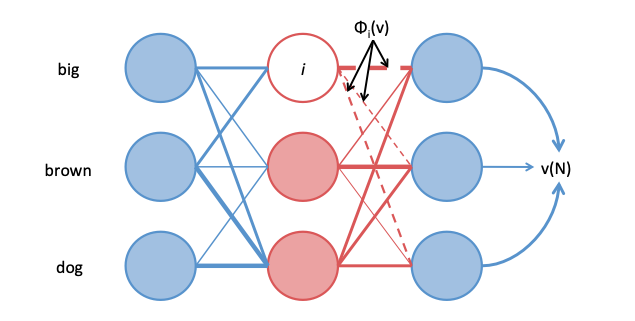

Shapley Values are a solution to the credit assignment problem in cooperative games -- if 10 people work together to win some reward, how can it be equitably distributed?

Shapley Values are a solution to the credit assignment problem in cooperative games -- if 10 people work together to win some reward, how can it be equitably distributed?https://twitter.com/kevin_scott/status/1308438898553638912My guess is that only MS will have access to the underlying model, while everyone will have to go through the API and be at the whims of whatever terms are set by OpenAI.

https://twitter.com/yoavgo/status/1282087972553338880

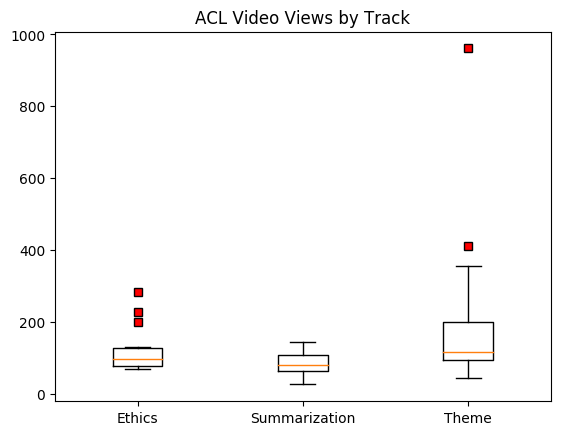

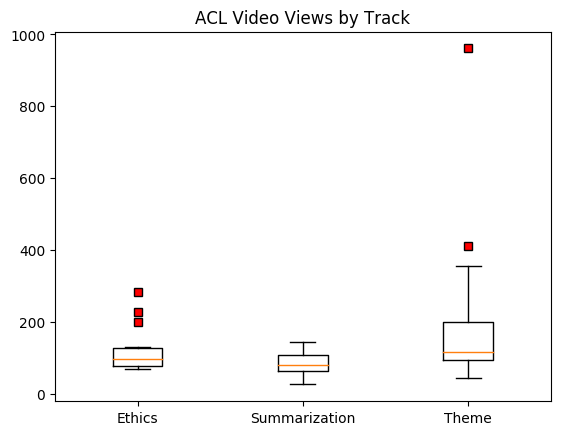

1. Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data by @emilymbender and @alkoller (961 views)

1. Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data by @emilymbender and @alkoller (961 views)

Key findings:

Key findings:

Key Takeaways:

Key Takeaways:

paper: arxiv.org/abs/1810.04882

paper: arxiv.org/abs/1810.04882