Principal Applied Scientist @ Amazon. Led ML @ Alibaba, Lazada, Healthtech Series A. Writing @ https://t.co/DEUfIuYC47.

How to get URL link on X (Twitter) App

Here's a GitHub gist if you want to try it yourself: gist.github.com/eugeneyan/1d2e…

Here's a GitHub gist if you want to try it yourself: gist.github.com/eugeneyan/1d2e…

Yea, there were one-click deploys, rollbacks, A/B tests—you name it.

Yea, there were one-click deploys, rollbacks, A/B tests—you name it.

https://twitter.com/eugeneyan/status/1637562031233708032

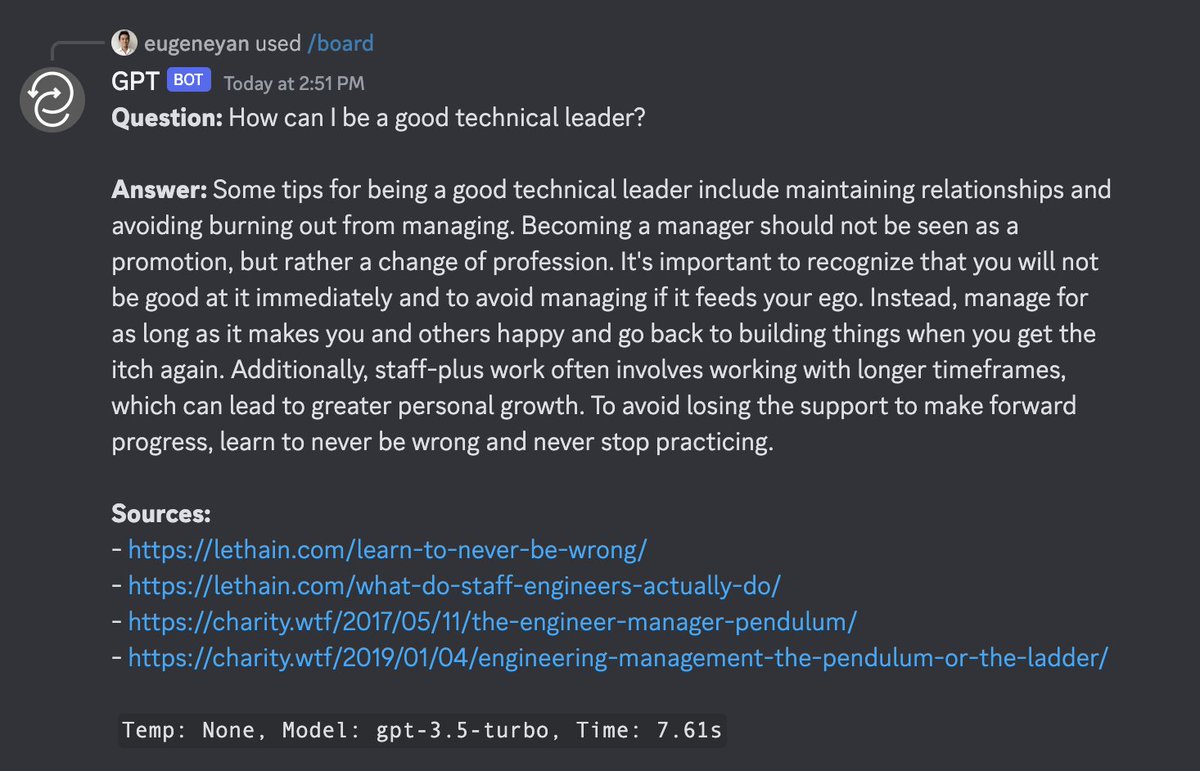

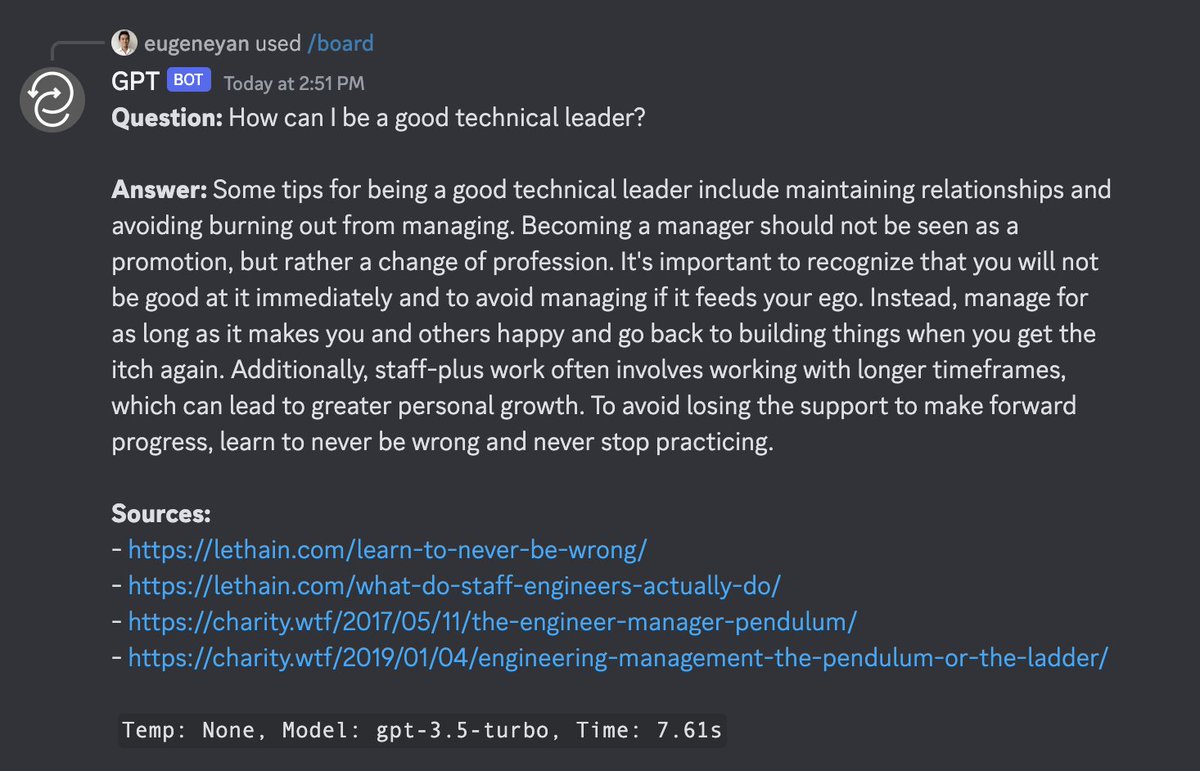

`/ask-ey` does something similar for my own site, eugeneyan.com. And because I'm more familiar with my own writing, I can better spot shortfalls such as not answering based on a source when expected, or when a source is irrelevant.

`/ask-ey` does something similar for my own site, eugeneyan.com. And because I'm more familiar with my own writing, I can better spot shortfalls such as not answering based on a source when expected, or when a source is irrelevant.

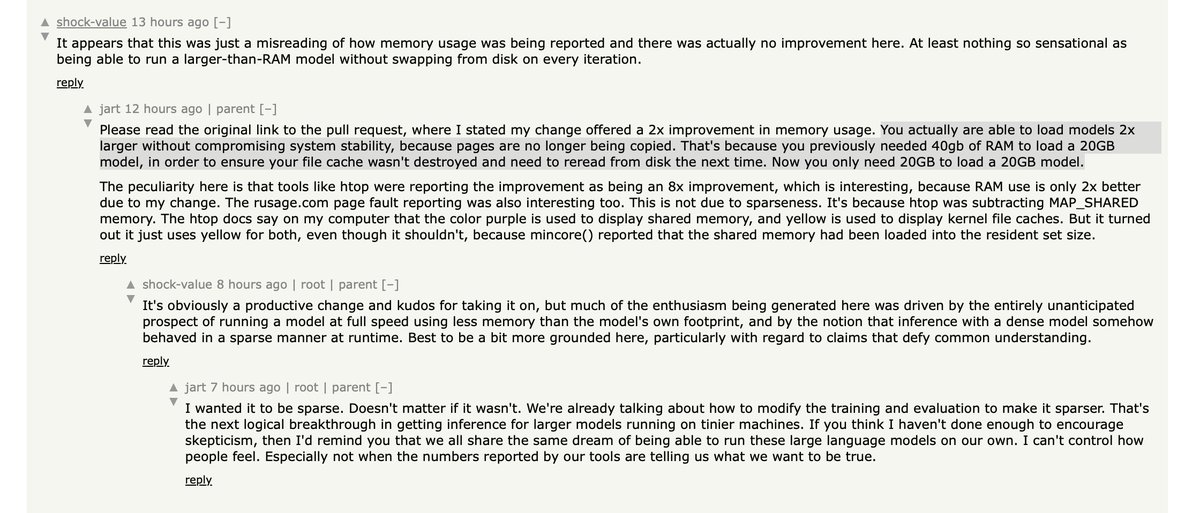

Correction: I guess all we can say is that it now (only) uses the actual memory required instead of 2x memory required.

Correction: I guess all we can say is that it now (only) uses the actual memory required instead of 2x memory required.

https://twitter.com/_brohrer_/status/1553811938886520842Adding tweets on this theme, starting with this.

https://twitter.com/rharang/status/1554168497298903040

(1/4) Here's how a Zettelkasten works:

(1/4) Here's how a Zettelkasten works: