Expert in AI and Datascience at https://t.co/QpIXDy0xRG / Founder at https://t.co/Q9wpElUUH9 / Advisor at Center for Humane Technology / Ex-Google

Opinions are my own

4 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/gchaslot/status/1334615032617897990

https://twitter.com/jeremybmerrill/status/1190002705793781761Right after publication of the article, @YouTube added a warning on the Chinese video that it's "inappropriate for some users"

https://mobile.twitter.com/jkeefe/status/1190287659215867904

https://twitter.com/coffeebreak_YT/status/1130854871370878977Having worked at YouTube, I know they don't intentionally bias algorithms, but they can pick a metric and not investigate enough to see if it creates bias.

https://twitter.com/veritasium/status/11302396017616445451/ Creators having to chase the algorithm is a disaster for independent ones, because it gives bigger companies a huge advantage (e.g., TheSoul publishing company creates 1,500 videos per month)

https://twitter.com/MaxLenormand/status/1128450668744720385Heroin. Until everybody dies.

https://twitter.com/beccalew/status/1128176807981674496Note that YouTube similarly encourages and monetizes hatred of Christians, but it’s often not in English so we have no idea about it.

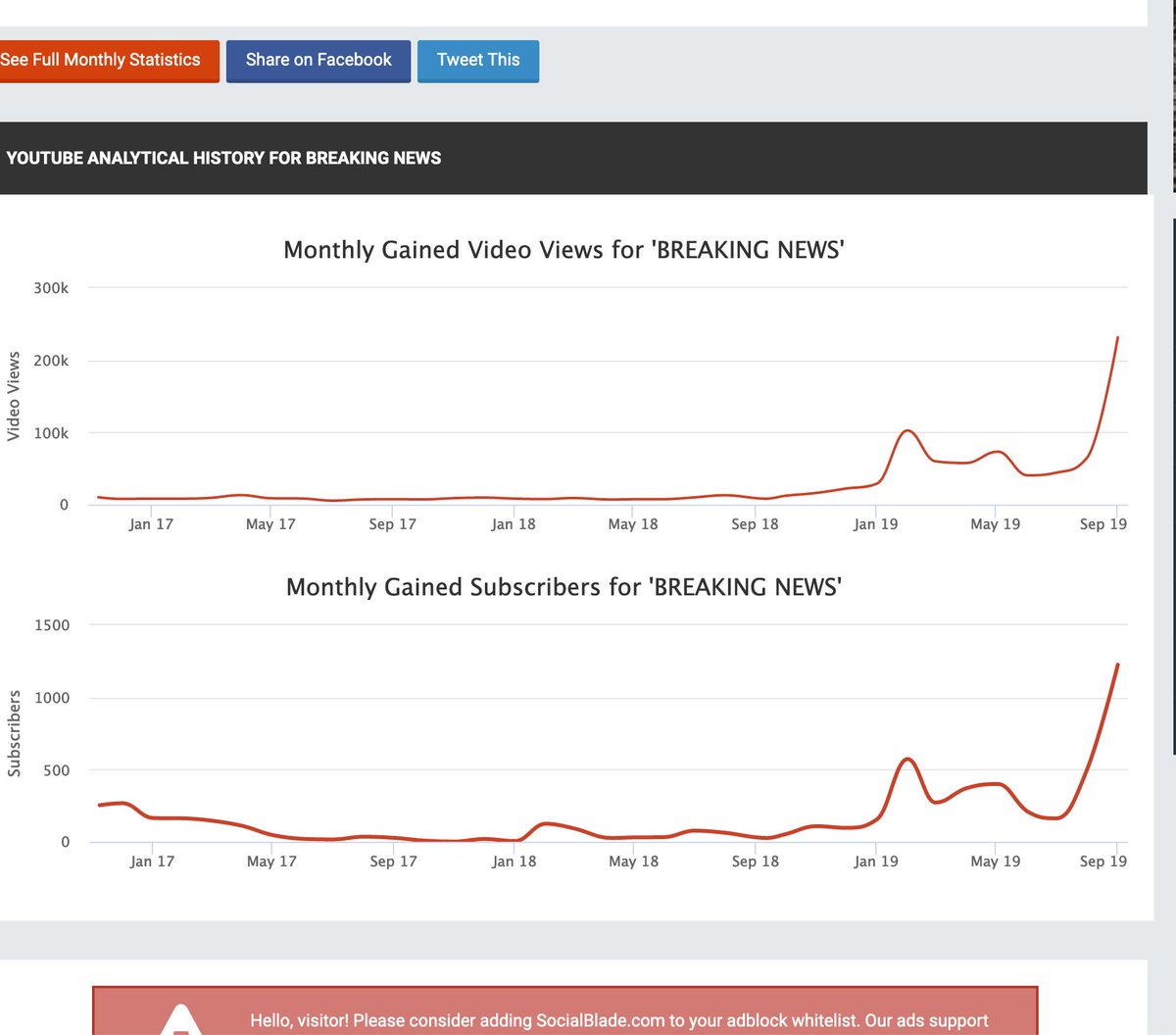

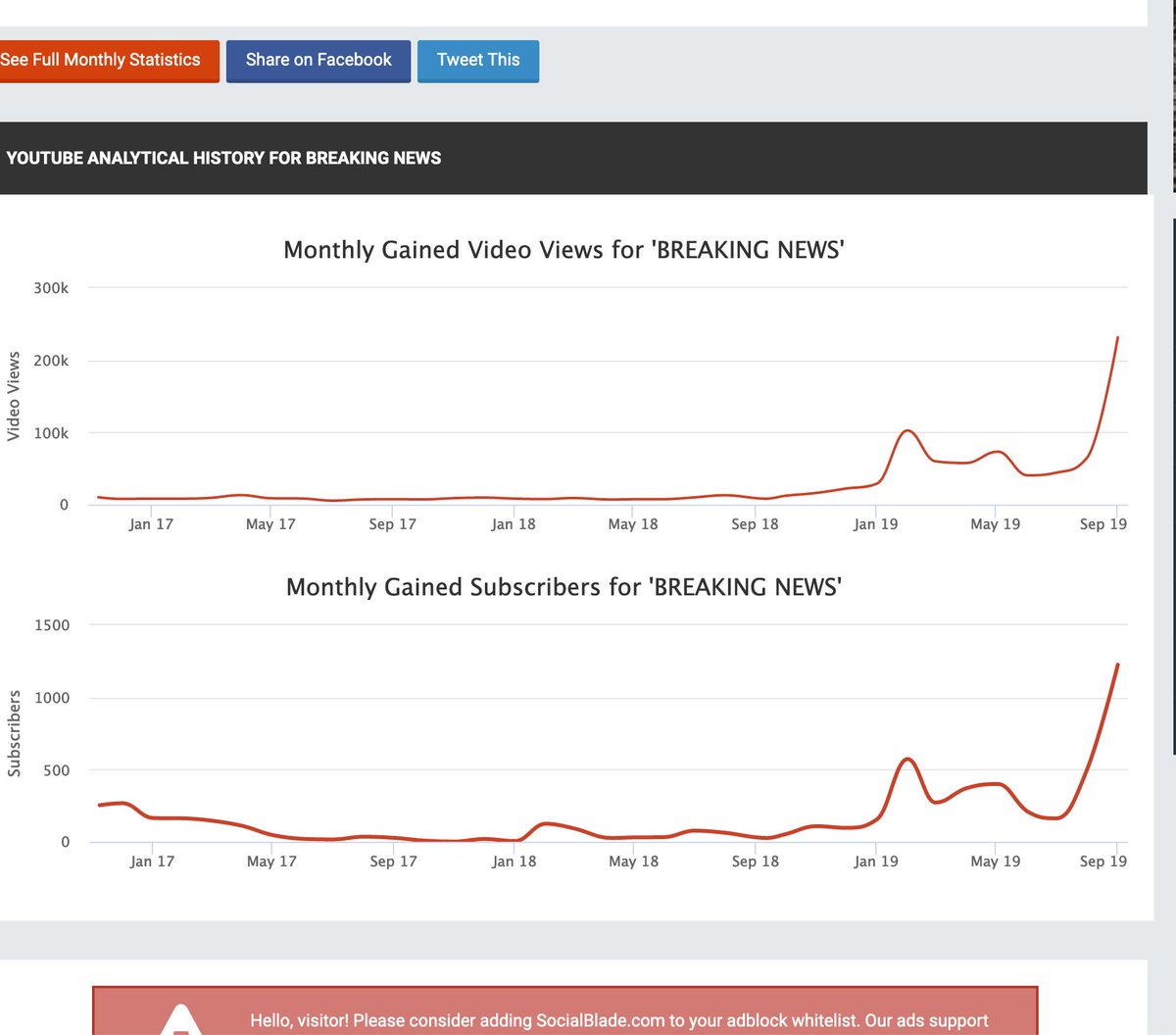

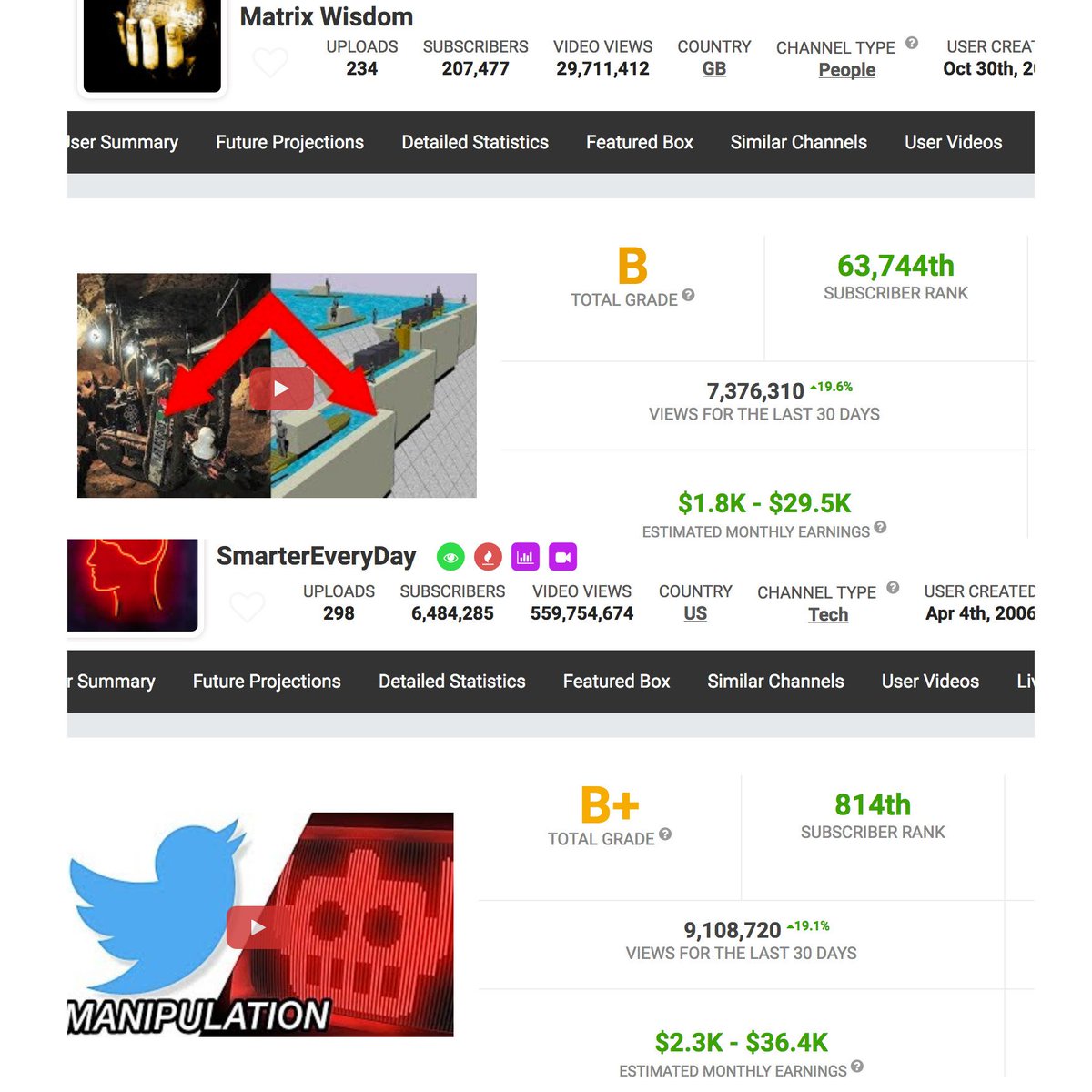

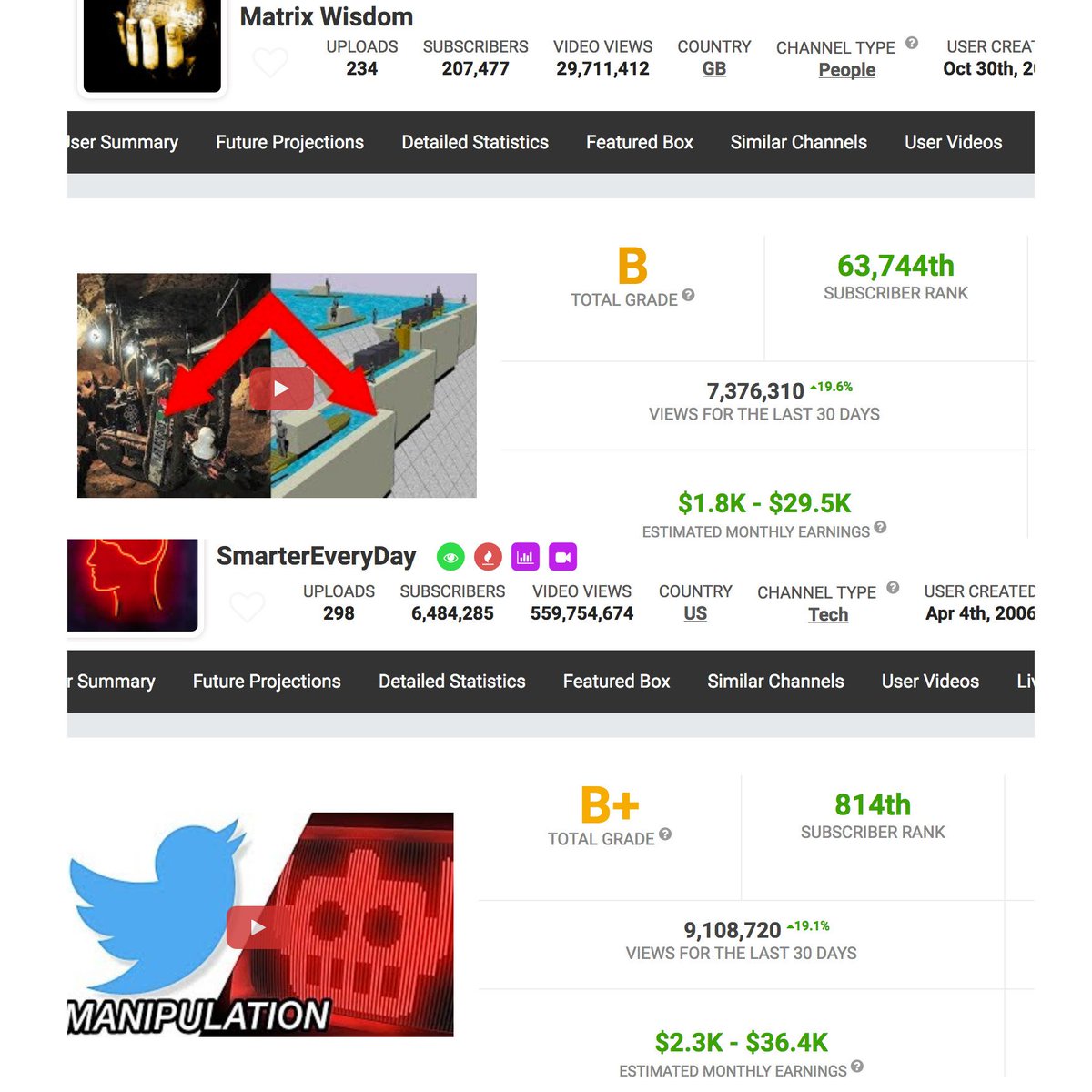

https://twitter.com/cadale/status/1116409436015190016Let's look at the owner of that YouTube video, the conspiracy channel "Matrix Wisdom"

https://twitter.com/kevinroose/status/1111679087855259648Context: @zeynep, @beccalew and many other have shown how YouTube often recommends more and more extreme content, which is radicalizing people:

https://twitter.com/RealSaavedra/status/10876277398618972161/ My personal favorite is Dr. @mathbabedotorg's, the world's expert on the topic, and yet her answer is benevolent and humble:

https://twitter.com/mathbabedotorg/status/1088127203420692480

https://twitter.com/RawStory/status/1064175265704747008This spring at SxSW, @SusanWojcicki promised "Wikipedia snippets" on debated videos. But they didn't put them on flat earth videos, and instead @YouTube is promoting merchandising such as "NASA lies - Never Trust a Snake". 2/