How to get URL link on X (Twitter) App

Why can't we use regular LoRA for pre-training? Because it only does optimization in a small low-rank subspace of the model parameters. It is enough for fine-tuning, but you don't want to have rank restrictions during pre-training.

Why can't we use regular LoRA for pre-training? Because it only does optimization in a small low-rank subspace of the model parameters. It is enough for fine-tuning, but you don't want to have rank restrictions during pre-training.

https://twitter.com/guitaricet/status/1641121969696391187We found that (AI)3 by @liu_haokun, Derek Tam, @Muqeeth10, and @colinraffel is one of the hidden gems of PEFT. It is simple, trains very few parameters, and outperforms strong methods like LoRa and Compacter. Let's quickly go over how it works.

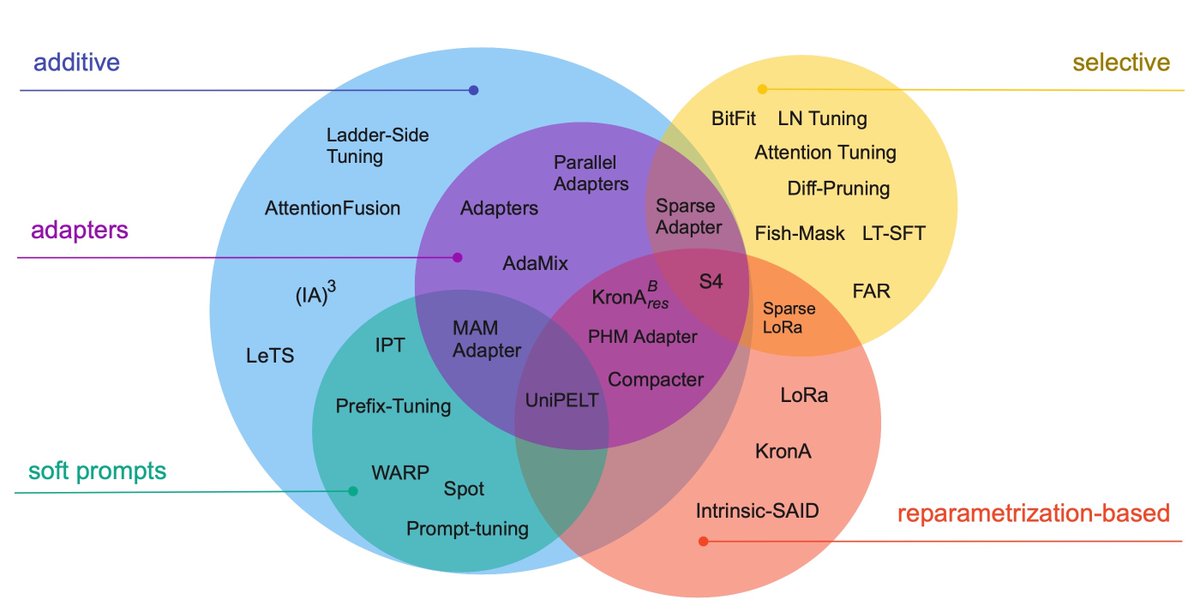

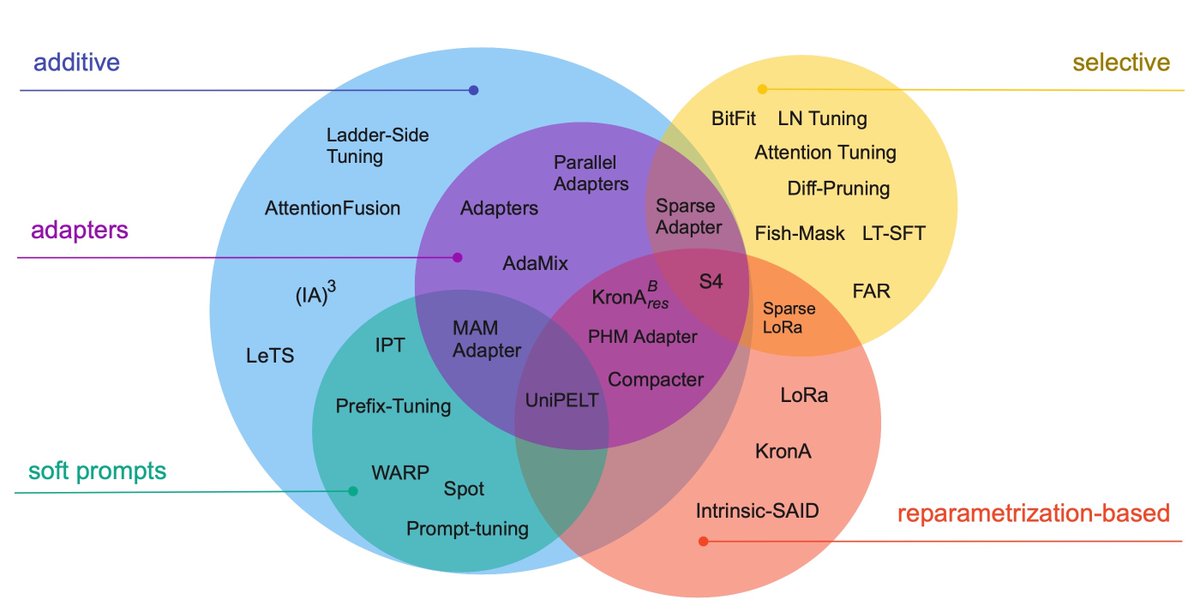

PEFT methods can target several things: storage efficiency, multitask inference efficiency, and memory efficiency are among them. We are interested in the case of fine-tuning large models, so memory efficiency is a must.

PEFT methods can target several things: storage efficiency, multitask inference efficiency, and memory efficiency are among them. We are interested in the case of fine-tuning large models, so memory efficiency is a must.