I am a human being, I am your equal ✊🏿✊🏾✊🏻 Research Scientist @AIatMeta Identity ∈ ℂ ; Borders ∈ 𝕀 , mytweets ∉ anybody else .

How to get URL link on X (Twitter) App

The focus in the presentation will be on Conditional language generation

The focus in the presentation will be on Conditional language generation

"Task-oriented dialogue" is the setup we are discussing now because it gives us success notion to the dialogue analyse

"Task-oriented dialogue" is the setup we are discussing now because it gives us success notion to the dialogue analyse

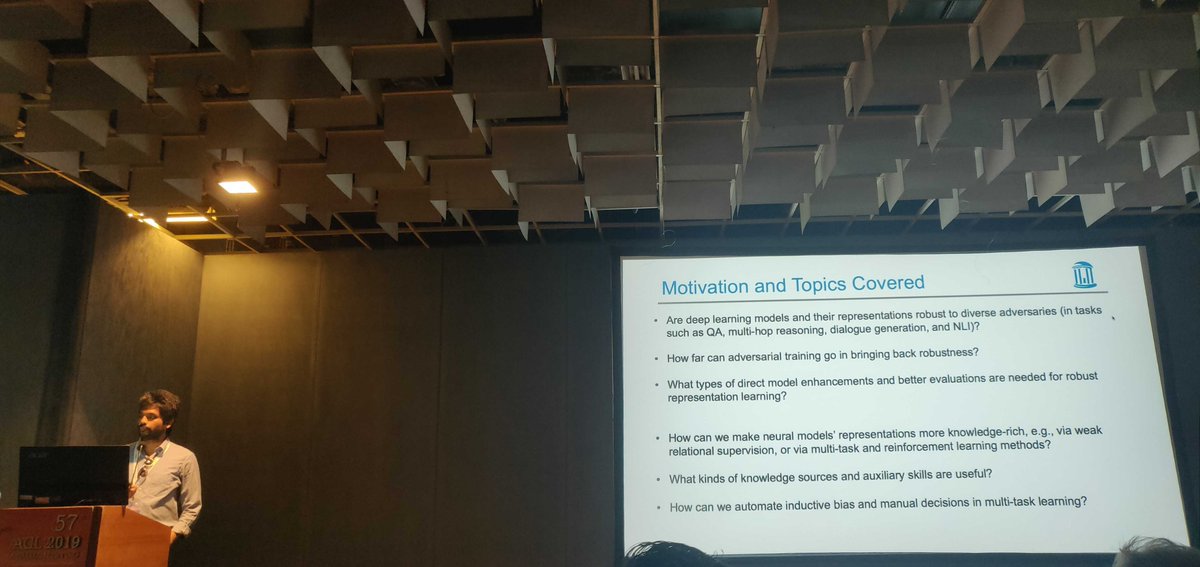

@mohitban47 Adv. examples can break Reading comprehension systems.

@mohitban47 Adv. examples can break Reading comprehension systems.

@sigrep_acl Marco is talking about his previous work about the emergence of language communication between agents.

@sigrep_acl Marco is talking about his previous work about the emergence of language communication between agents.

MT methods for text simplification suffer from conservatism. meaning they simply less and copy more. This mainly because the high overlap between source and target data because of monolingualism

MT methods for text simplification suffer from conservatism. meaning they simply less and copy more. This mainly because the high overlap between source and target data because of monolingualism

Automatic single reference based evaluation of summarisation is biased for several reasons. human evaluation is not feasible.

Automatic single reference based evaluation of summarisation is biased for several reasons. human evaluation is not feasible.

Current solutions in industry at the moment was to translate sentence by sentence which will introduce some latency. Work in academia include methods that either anticipates the "German verb" on the source-side.

Current solutions in industry at the moment was to translate sentence by sentence which will introduce some latency. Work in academia include methods that either anticipates the "German verb" on the source-side.

@anirbanlaha Motivations for #Data2Text:

@anirbanlaha Motivations for #Data2Text:

@meloncholist @vnfrombucharest Andre is starting with a motivational introduction about some structured prediction tasks (POS tagging, Dependency parsing, Word alignment)

@meloncholist @vnfrombucharest Andre is starting with a motivational introduction about some structured prediction tasks (POS tagging, Dependency parsing, Word alignment)