How to get URL link on X (Twitter) App

https://twitter.com/hausman_k/status/1511152160695730181

Let's re-visit the original bitter lesson first:

Let's re-visit the original bitter lesson first:

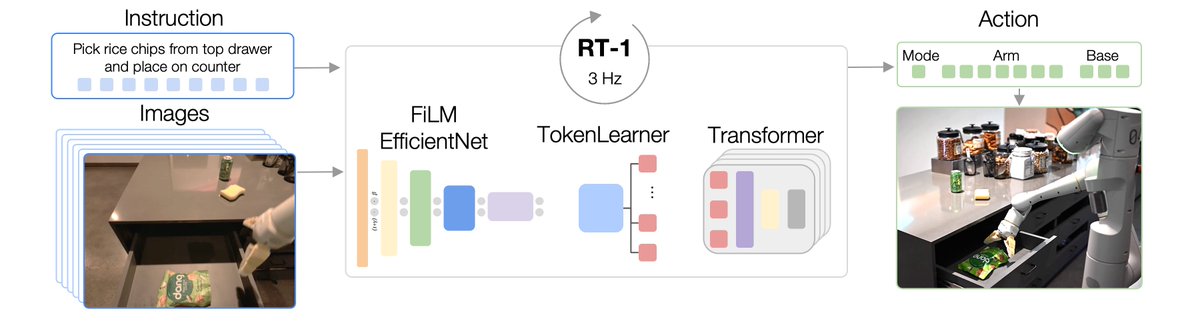

https://twitter.com/GoogleAI/status/1511795739952832521The idea is very simple: