CEO @SophontAI |

Founder @MedARC_AI |

PhD at 19 (2023) |

ex Research Director Stability AI |

Biomed. engineer @ 14 |

TEDx talk➡https://t.co/xPxwKTpz0D

7 subscribers

How to get URL link on X (Twitter) App

People noticed that the same few people were interacting with Cleo (asking the questions Cleo answered, commenting, etc.), a couple of them only active at the same time as Cleo as well.

People noticed that the same few people were interacting with Cleo (asking the questions Cleo answered, commenting, etc.), a couple of them only active at the same time as Cleo as well.

https://twitter.com/iScienceLuvr/status/1790082827016364071

The team developed a variety of model variants. First let's talk about the models they developed for language tasks.

The team developed a variety of model variants. First let's talk about the models they developed for language tasks.

Before I continue, I want to mention this work was led by @RiversHaveWings, @StefanABaumann, @Birchlabs. @DanielZKaplan, @EnricoShippole were also valuable contributors. (2/11)

Before I continue, I want to mention this work was led by @RiversHaveWings, @StefanABaumann, @Birchlabs. @DanielZKaplan, @EnricoShippole were also valuable contributors. (2/11)

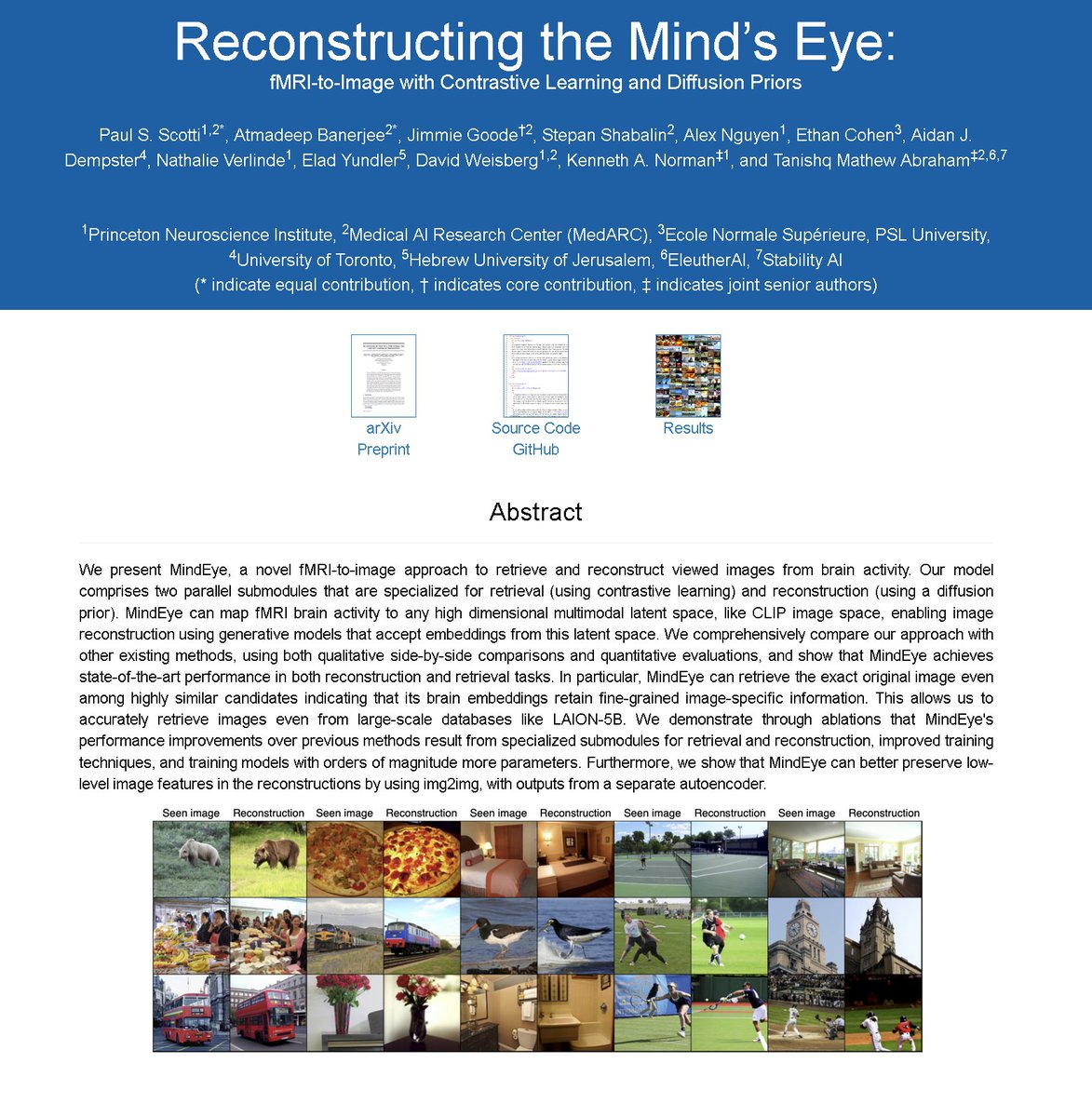

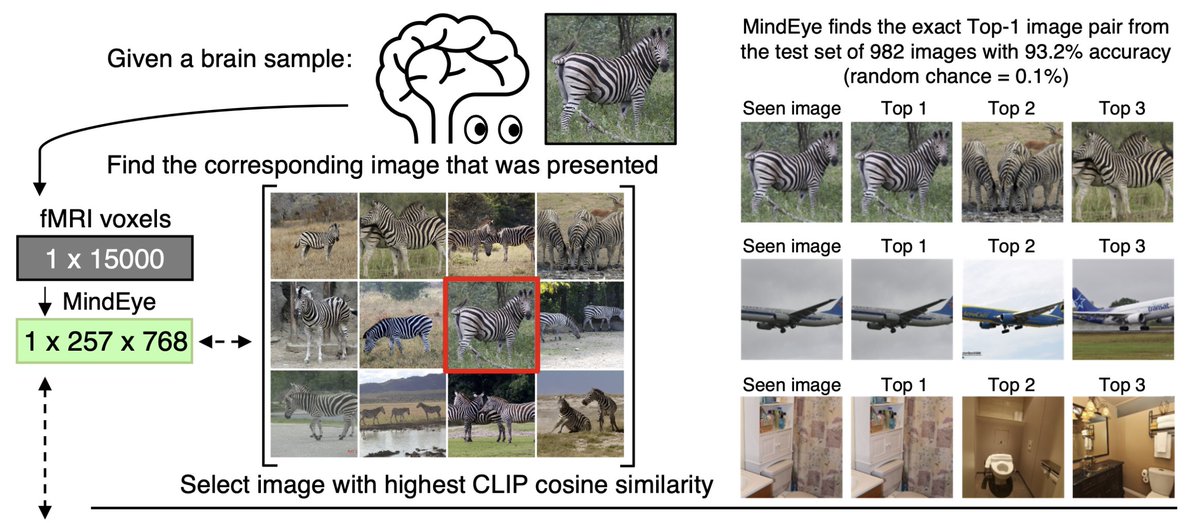

We train an MLP using contrastive learning to map fMRI signals to CLIP image embeddings.

We train an MLP using contrastive learning to map fMRI signals to CLIP image embeddings.

https://twitter.com/iScienceLuvr/status/1636479850214232064

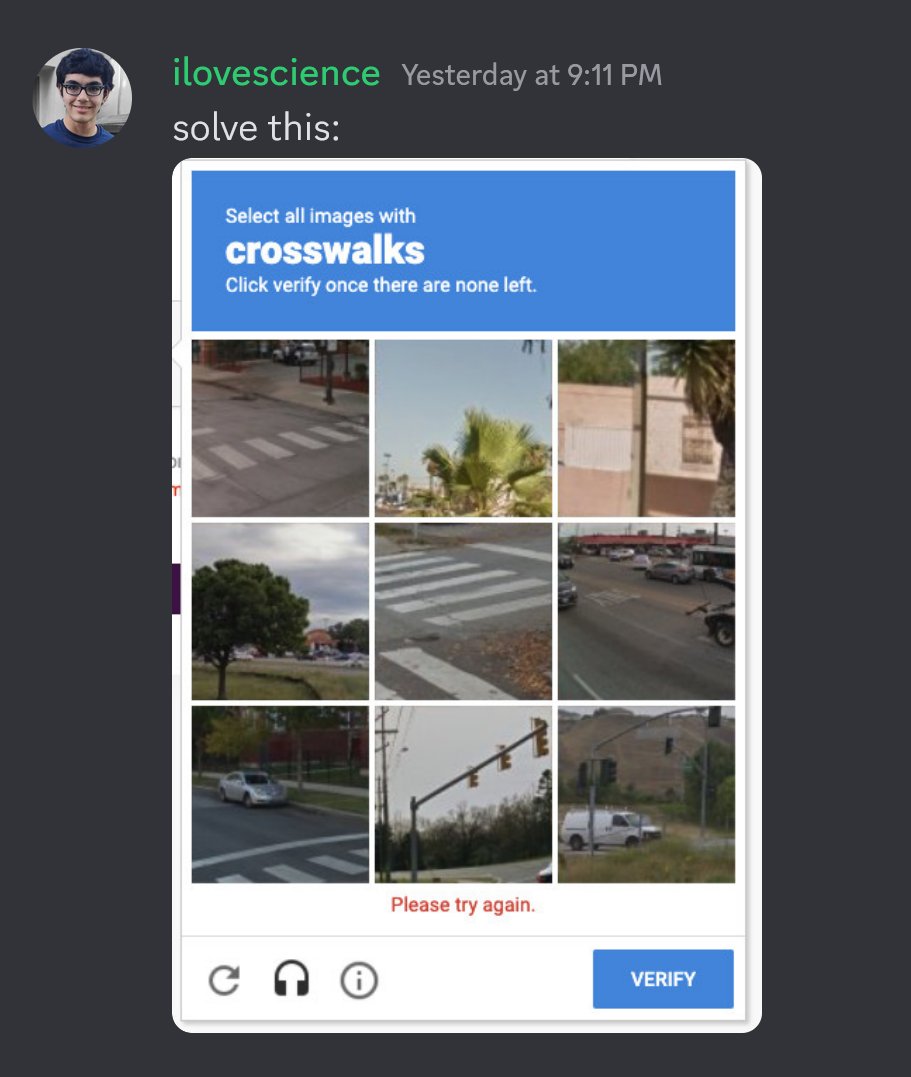

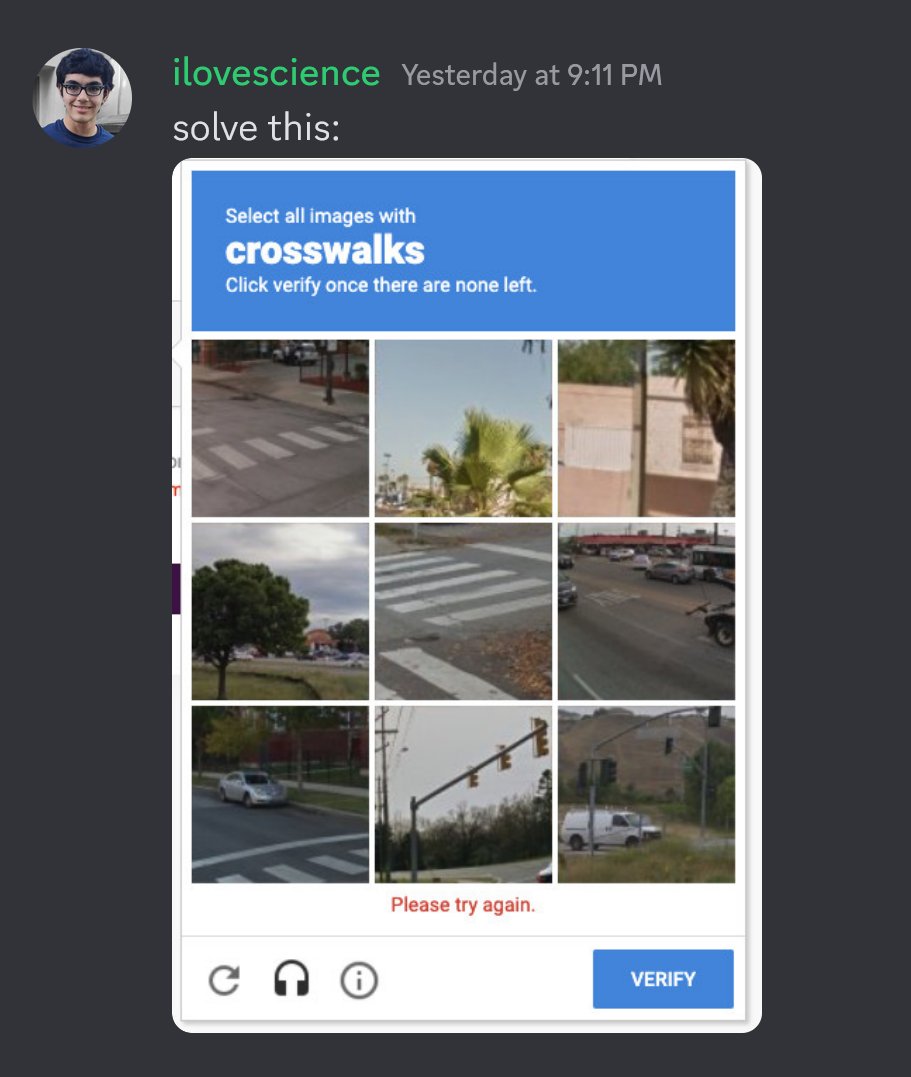

It can explain memes quite well! Here it is explaining an AI-generated meme I shared recently.

It can explain memes quite well! Here it is explaining an AI-generated meme I shared recently.

https://twitter.com/iScienceLuvr/status/1608070009921900546

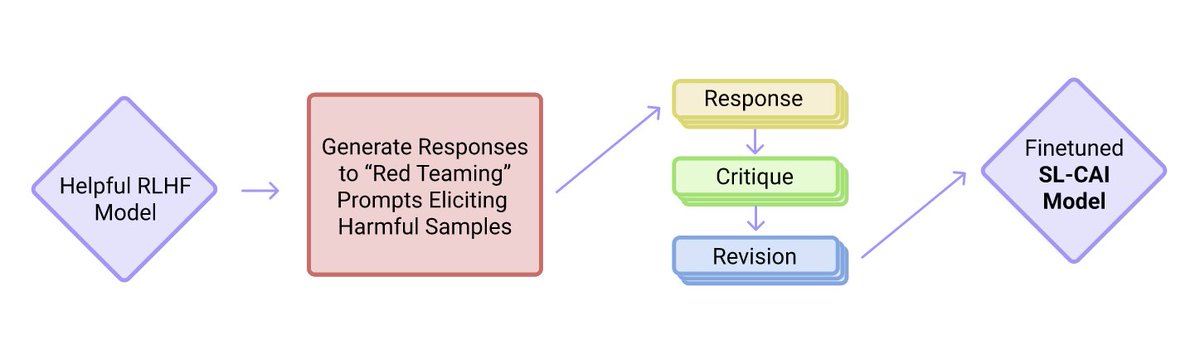

Maybe someone could have created a model like text-davinci-003?

Maybe someone could have created a model like text-davinci-003?

https://twitter.com/poolio/status/1575576632068214785

Continuing their trend of scaling to web-scale dataset, the group collected a dataset of 680k hours of audio+text transcriptions. It's a very diverse dataset, including multiple languages, speakers, recording setups, environments, etc. (2/10)

Continuing their trend of scaling to web-scale dataset, the group collected a dataset of 680k hours of audio+text transcriptions. It's a very diverse dataset, including multiple languages, speakers, recording setups, environments, etc. (2/10)

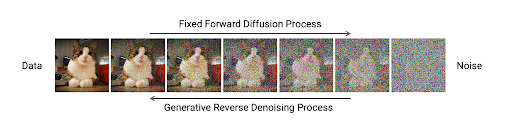

First, a brief summary about how Stable Diffusion works. Stable Diffusion is a diffusion model, which is a neural network trained to iteratively denoise an image from pure noise. (2/11)

First, a brief summary about how Stable Diffusion works. Stable Diffusion is a diffusion model, which is a neural network trained to iteratively denoise an image from pure noise. (2/11)