How to get URL link on X (Twitter) App

The paper is here: Our weight decomposition lets us suppress memorized data and find regularities in weights that connect to tasks like logical reasoning, fact retrieval, and math.arxiv.org/abs/2510.24256

The paper is here: Our weight decomposition lets us suppress memorized data and find regularities in weights that connect to tasks like logical reasoning, fact retrieval, and math.arxiv.org/abs/2510.24256

The curvature of the loss wrt an input embedding tells you how fast the loss would move as you changed the input. “Sharp” areas where the loss changes quickly tell us that maybe that input is memorized

The curvature of the loss wrt an input embedding tells you how fast the loss would move as you changed the input. “Sharp” areas where the loss changes quickly tell us that maybe that input is memorized

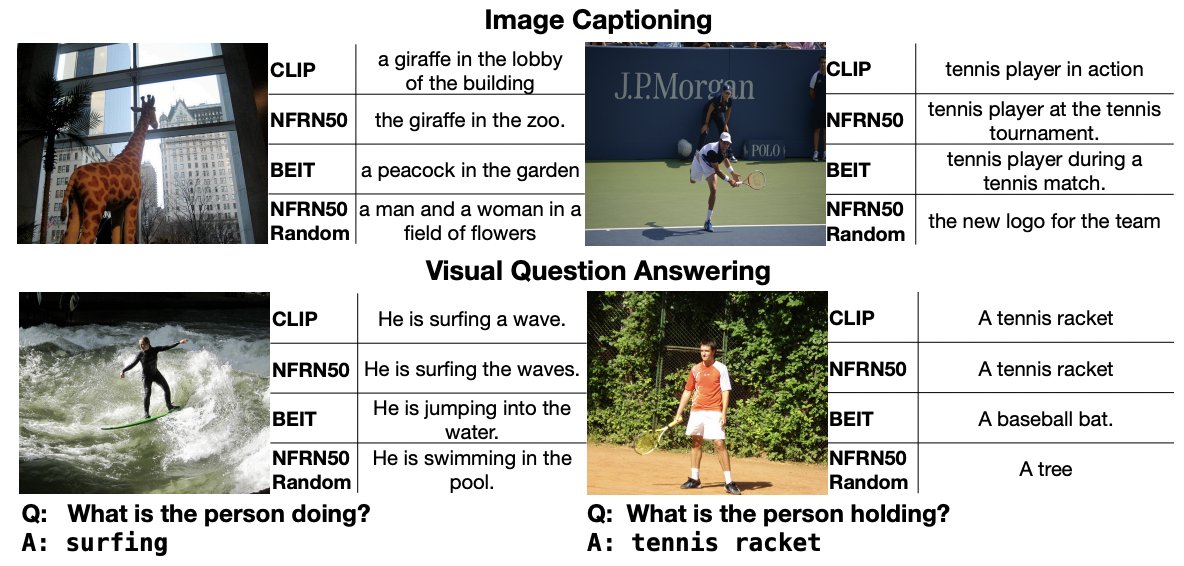

But, we also find that performance on VL tasks depends on how much linguistic supervision pretraining the image encoder has. Eg CLIP is pretrained with full language descriptions, NF-ResNET is trained with lexical category information (imagenet labels), and BEIT is vision-only

But, we also find that performance on VL tasks depends on how much linguistic supervision pretraining the image encoder has. Eg CLIP is pretrained with full language descriptions, NF-ResNET is trained with lexical category information (imagenet labels), and BEIT is vision-only