@AnthropicAI, ONEAI OECD, co-chair @indexingai, writer @ https://t.co/3vmtHYkIJ2 Past: @openai, @business @theregister. Neural nets, distributed systems, weird futures

5 subscribers

How to get URL link on X (Twitter) App

I think if people who are true LLM skeptics spent 10 hours trying to get modern AI systems to do tasks that the skeptics are experts in they'd be genuinely shocked by how capable these things are.

I think if people who are true LLM skeptics spent 10 hours trying to get modern AI systems to do tasks that the skeptics are experts in they'd be genuinely shocked by how capable these things are.

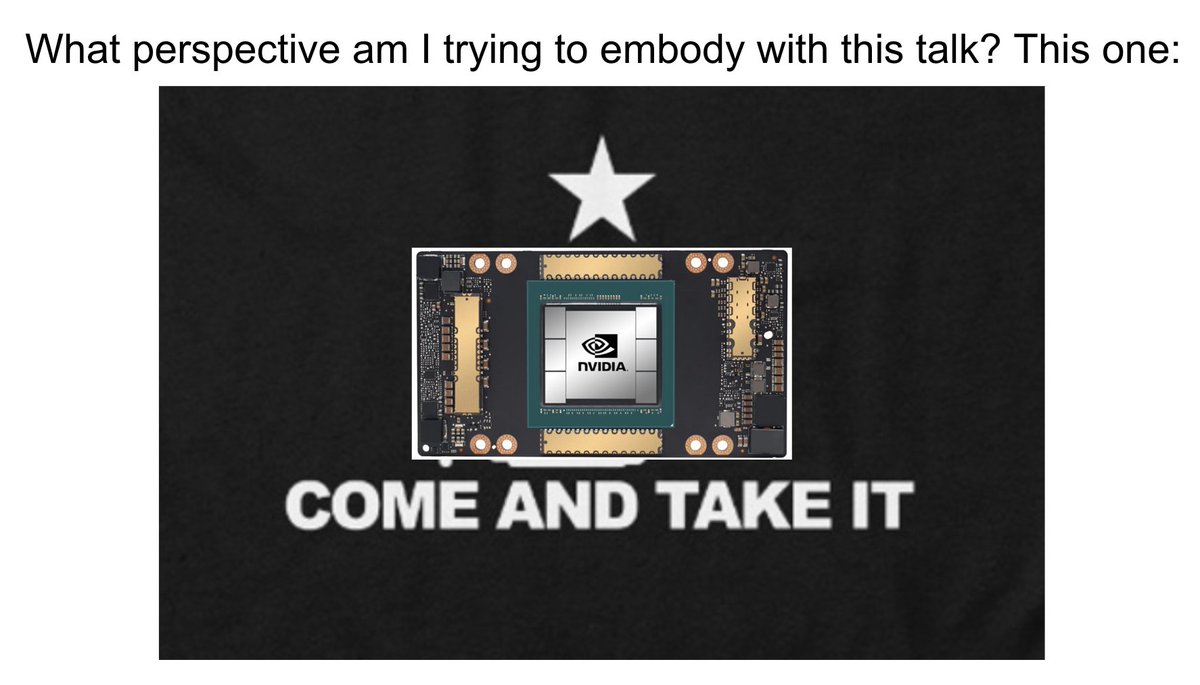

https://twitter.com/Simeon_Cps/status/1673022967163285506I gave a slide preso back in fall of 2022 along these lines. Including some slides here. The gist of it is if you basically go after compute in the wrong ways you annoy a huge amount of people and you guarantee pushback and differential tech development.

There will of course be exceptions and some companies will release stuff. But this isn't going to get us many of the benefits of the magic of contemporary AI. We're surrendering our own culture and our identity to the logic of markets. I am aghast at this. And you should be too.

There will of course be exceptions and some companies will release stuff. But this isn't going to get us many of the benefits of the magic of contemporary AI. We're surrendering our own culture and our identity to the logic of markets. I am aghast at this. And you should be too.

https://twitter.com/GoogleAI/status/1542558541487058946?t=EOz969AnE2QPNJIhxyy1mA&s=19

Geopolitics gets changed by chipwars which get driven by AI. Culture becomes a tool of capital via arbitraging models against human artists. Everyone gets 'roll your own' surveillance. Technological supremacy becomes a corporate theistic belief system. Things are gonna get crazy!

Geopolitics gets changed by chipwars which get driven by AI. Culture becomes a tool of capital via arbitraging models against human artists. Everyone gets 'roll your own' surveillance. Technological supremacy becomes a corporate theistic belief system. Things are gonna get crazy!