Teaching computers to read.

Assoc. prof @MITEECS / @MIT_CSAIL / @NLP_MIT (he/him).

https://t.co/5kCnXHjtlY

https://t.co/2A3qF5vdJw

How to get URL link on X (Twitter) App

over all the authors who could have produced the context. All large-scale training sets are generated by a mixture of authors w/ mutually incompatible beliefs & goals, and we shouldn't expect a model of the marginal dist. over utts to be coherent.

over all the authors who could have produced the context. All large-scale training sets are generated by a mixture of authors w/ mutually incompatible beliefs & goals, and we shouldn't expect a model of the marginal dist. over utts to be coherent.https://twitter.com/cainesap/status/1279076489267462144neural semantic persons

https://twitter.com/jayelmnop/status/1276558715508928515Earlier work from Berkeley (arxiv.org/abs/1707.08139) and MIT (netdissect.csail.mit.edu) automatically labels deep features with textual descriptions from a predefined set. In our new work, we generate more precise & expressive explanations by composing them on the fly. 2/

(First, a couple of other goodies in this paper: module layouts from BREAK question decompositions; joint NMN training on QA and localization tasks, which is something I always mention as a nice feature of these models in talks but hardly anybody does.) 2/

(First, a couple of other goodies in this paper: module layouts from BREAK question decompositions; joint NMN training on QA and localization tasks, which is something I always mention as a nice feature of these models in talks but hardly anybody does.) 2/

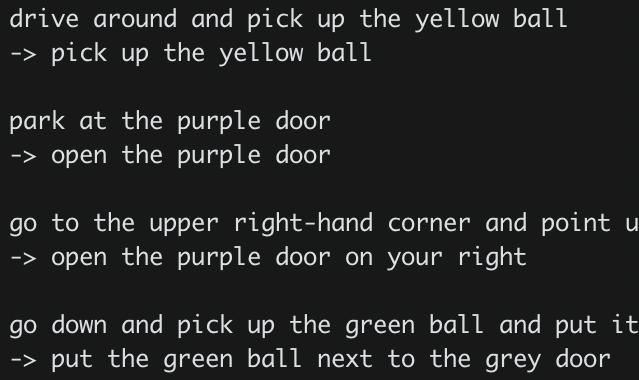

This paper presents GECA, a (rule-based!) data augmentation scheme that allows an ordinary seq2seq model to solve @LakeBrenden & Baroni's SCAN dataset, boosts semantic parser performance in multiple of representations, and even helps a bit with low-resource language modeling. 2/

This paper presents GECA, a (rule-based!) data augmentation scheme that allows an ordinary seq2seq model to solve @LakeBrenden & Baroni's SCAN dataset, boosts semantic parser performance in multiple of representations, and even helps a bit with low-resource language modeling. 2/

Some observations: (1) pretrained LM representations are pretty good at modeling similarity between human-generated and synthetic sentences, but (2) models trained only on LM representations of synthetic sentences still overfit and generalize badly to real ones.

Some observations: (1) pretrained LM representations are pretty good at modeling similarity between human-generated and synthetic sentences, but (2) models trained only on LM representations of synthetic sentences still overfit and generalize badly to real ones.

https://twitter.com/lena_voita/status/1244549888186241024with a powerful enough probe, the wrong control task will lead you to conclude that easy-to-predict abstractions like parts of speech are not meaningfully encoded, and the wrong control model might lead you to conclude that *nothing* is meaningfully encoded.