document OCR + workflows @llama_index. cofounder/CEO

Careers: https://t.co/EUnMNmb4DZ

Enterprise: https://t.co/Ht5jwxRU13

6 subscribers

How to get URL link on X (Twitter) App

Our default way of using @OpenAI function calling is through our pydantic programs: simply specify the pydantic schema, and we’re use the endpoint to extract a structured output with that schema.

Our default way of using @OpenAI function calling is through our pydantic programs: simply specify the pydantic schema, and we’re use the endpoint to extract a structured output with that schema.

For instance, we can fine-tune a 2-layer neural net that takes in the query embedding as input and outputs a transformed embedding.

For instance, we can fine-tune a 2-layer neural net that takes in the query embedding as input and outputs a transformed embedding.

The core intuition is related to the idea of decoupling embeddings from the raw text chunks (we’ve tweeted about this).

The core intuition is related to the idea of decoupling embeddings from the raw text chunks (we’ve tweeted about this).

The fine-tuned model does better than base gpt-3.5 at CoT reasoning.

The fine-tuned model does better than base gpt-3.5 at CoT reasoning.

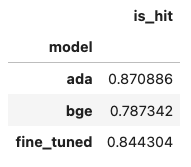

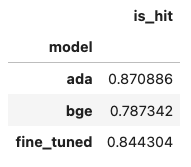

This is motivated from the insight that using pre-trained embedding models may not be suited for your specific retrieval task.

This is motivated from the insight that using pre-trained embedding models may not be suited for your specific retrieval task.

The key intuition : gpt-3.5-turbo (even after fine-tuning) is much cheaper than GPT-4 on a marginal token basis. 💡

The key intuition : gpt-3.5-turbo (even after fine-tuning) is much cheaper than GPT-4 on a marginal token basis. 💡

Here’s another example: given question “what percentage of gov revenue came from taxes” - top-k retrieval over raw text chunks doesn’t return the right answer.

Here’s another example: given question “what percentage of gov revenue came from taxes” - top-k retrieval over raw text chunks doesn’t return the right answer.

The key intuition here is that specific retrieval parameters (chunk size, top-k, etc.) can work better for different situations.

The key intuition here is that specific retrieval parameters (chunk size, top-k, etc.) can work better for different situations.

The model can extract a lot of entities from any unstructured text: PER (person), ORG (organization), LOC (location), and much more.

The model can extract a lot of entities from any unstructured text: PER (person), ORG (organization), LOC (location), and much more.

Take the example above of have the agent use a tool to draft an email 📤

Take the example above of have the agent use a tool to draft an email 📤

LlamaIndex has the tools to build a Knowledge Graph from any unstructured data source.

LlamaIndex has the tools to build a Knowledge Graph from any unstructured data source.

Let’s assume we want to give an agent access to Wikipedia.

Let’s assume we want to give an agent access to Wikipedia.

Our quickstart tutorial allows you to get started building QA in 3 lines of code:

Our quickstart tutorial allows you to get started building QA in 3 lines of code:

We now have these capabilities in LlamaIndex, with our MetadataExtractor modules.

We now have these capabilities in LlamaIndex, with our MetadataExtractor modules.

In a Simple Tool API - the Tool function signature takes in a simple type, like a string (or int)

In a Simple Tool API - the Tool function signature takes in a simple type, like a string (or int)

How did this start? @jxnlco put out this PR on the openai_function_call repo: github.com/jxnl/openai_fu…

How did this start? @jxnlco put out this PR on the openai_function_call repo: github.com/jxnl/openai_fu…https://twitter.com/jxnlco/status/1670767764557148161?s=20

The example we incorporated from @jxnlco was an example of parsing a directory tree. A tree contains recursive Node objects representing files/folders.

The example we incorporated from @jxnlco was an example of parsing a directory tree. A tree contains recursive Node objects representing files/folders.