How to get URL link on X (Twitter) App

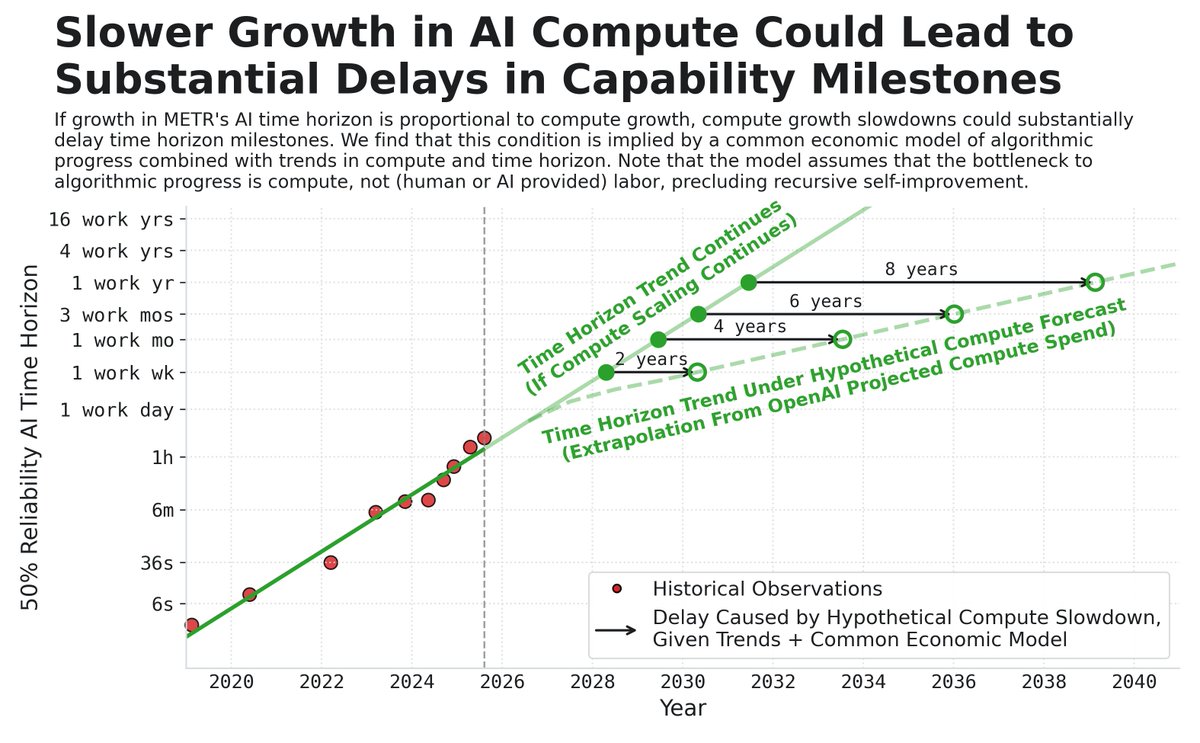

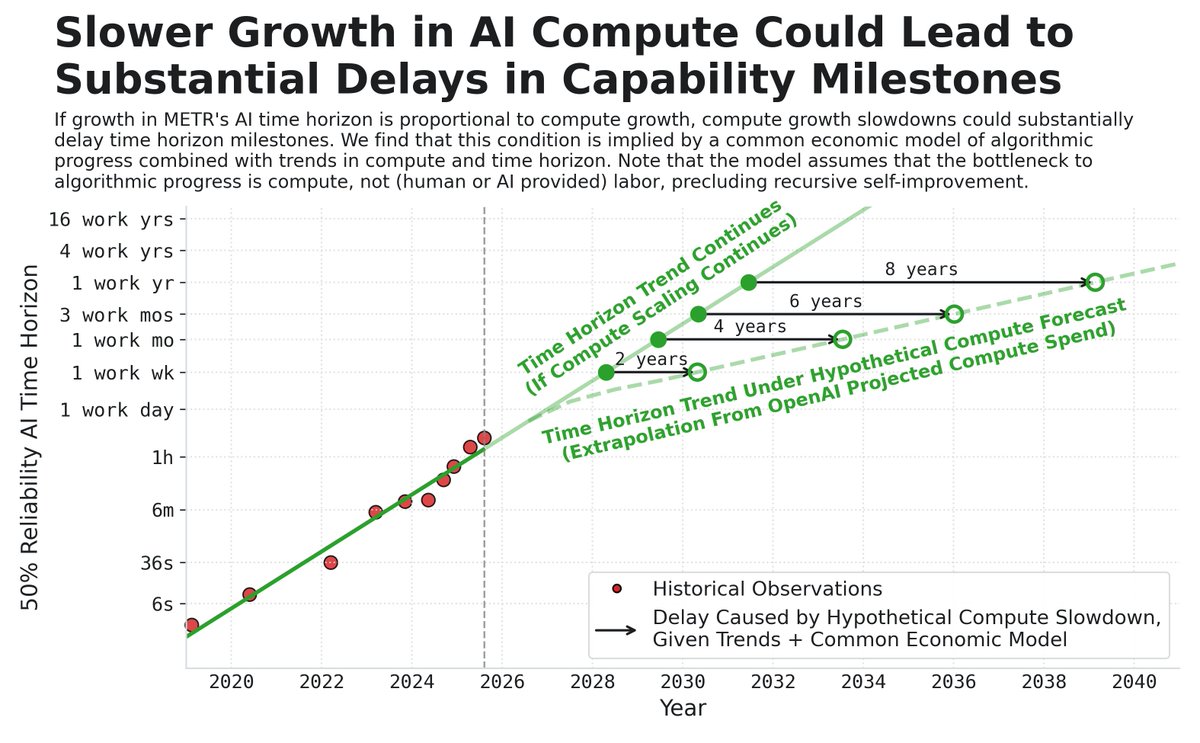

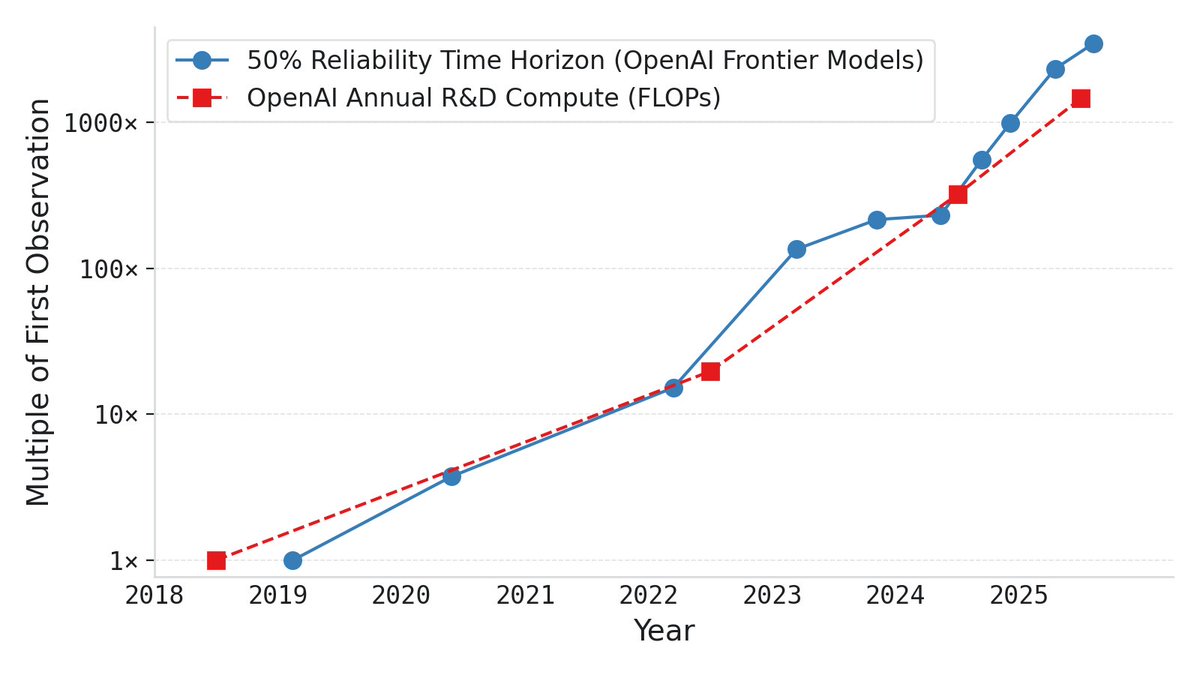

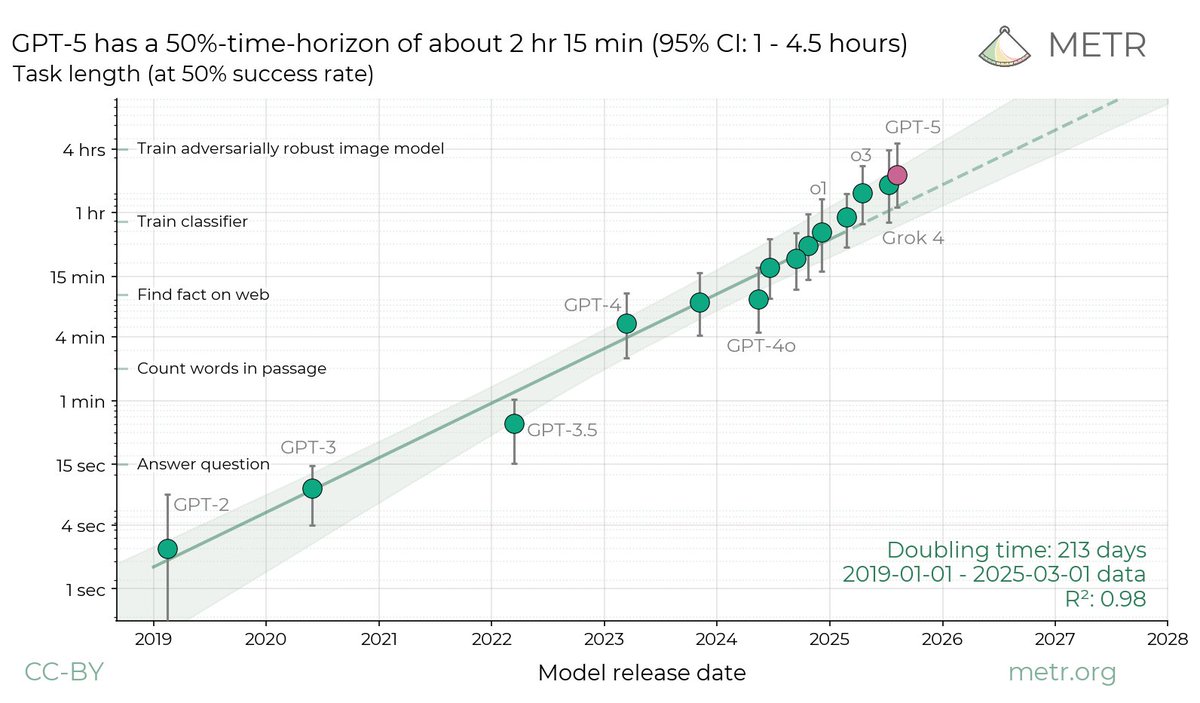

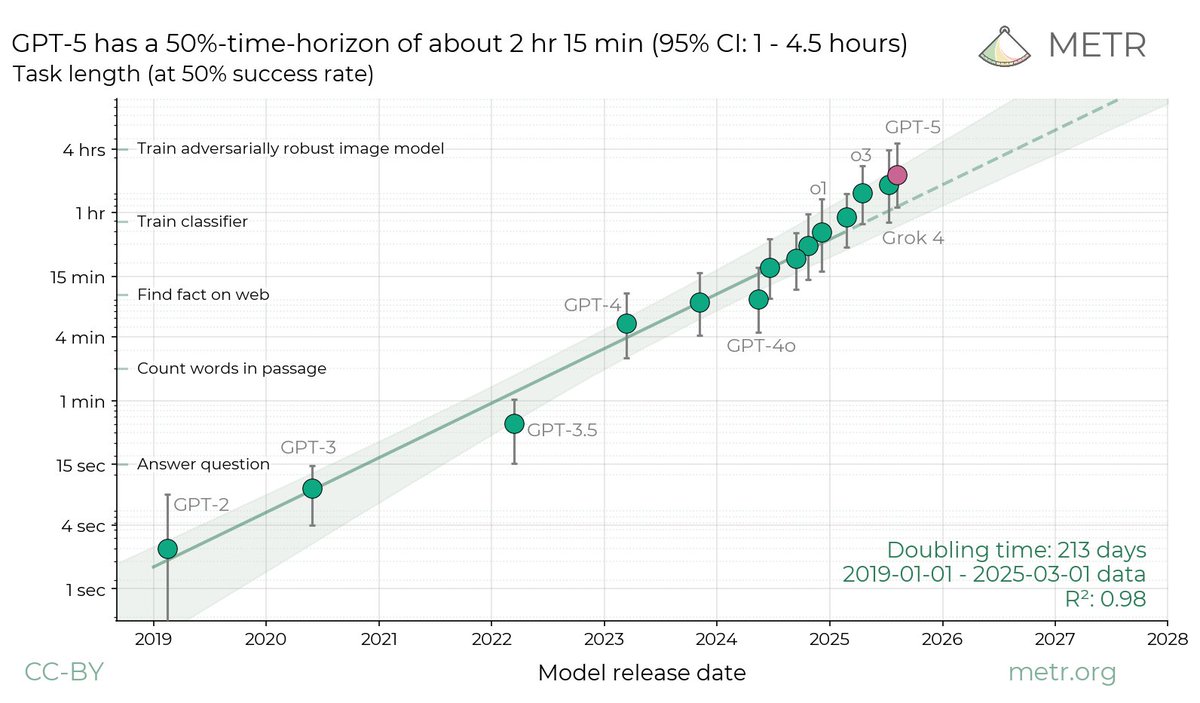

Fundamentally, the ideas in the paper are extremely simple. Time horizon and compute have been growing exponentially. If compute slows, plausibly time horizon slows. If the slowing is substantial, resulting delays vs. naive trend extrapolation can be quantitatively large.

Fundamentally, the ideas in the paper are extremely simple. Time horizon and compute have been growing exponentially. If compute slows, plausibly time horizon slows. If the slowing is substantial, resulting delays vs. naive trend extrapolation can be quantitatively large.

first part is task-agnostic returns to scale. this is a standard AI story: just add compute. not strictly pre-training compute; any innovation that turned resources into general capabilities (better data filtering, optimizer, instruction following, tool use) would count.

first part is task-agnostic returns to scale. this is a standard AI story: just add compute. not strictly pre-training compute; any innovation that turned resources into general capabilities (better data filtering, optimizer, instruction following, tool use) would count.

https://x.com/METR_Evals/status/1943360399220388093the result is, of course, shocking. but i see our primary contribution as methodological. RCTs are the furthest thing from a methodological innovation—but they have thus far been missing from the toolkit of researchers studying the technical capabilities of frontier AI systems.

https://x.com/dwarkesh_sp/status/1925673798277243279i think swe-bench is really not a good measure of whether software engineering is automated. saturation doesn't come close to implying that AI agents can be plug-in replacements for human software engineers.