Knowing things is a solved problem. Getting along is not. Working on AI, media, and inter-group conflict @CHAI_Berkeley. Got here from computational journalism.

How to get URL link on X (Twitter) App

𝑹𝒂𝒏𝒌𝒊𝒏𝒈 𝒃𝒚 𝒑𝒓𝒆𝒅𝒊𝒄𝒕𝒆𝒅 𝒆𝒏𝒈𝒂𝒈𝒆𝒎𝒆𝒏𝒕 𝒄𝒂𝒖𝒔𝒆𝒔 𝒔𝒊𝒈𝒏𝒊𝒇𝒊𝒄𝒂𝒏𝒕𝒍𝒚 𝒉𝒊𝒈𝒉𝒆𝒓 𝒕𝒊𝒎𝒆-𝒔𝒑𝒆𝒏𝒕 𝒂𝒏𝒅 𝒓𝒆𝒕𝒆𝒏𝒕𝒊𝒐𝒏

𝑹𝒂𝒏𝒌𝒊𝒏𝒈 𝒃𝒚 𝒑𝒓𝒆𝒅𝒊𝒄𝒕𝒆𝒅 𝒆𝒏𝒈𝒂𝒈𝒆𝒎𝒆𝒏𝒕 𝒄𝒂𝒖𝒔𝒆𝒔 𝒔𝒊𝒈𝒏𝒊𝒇𝒊𝒄𝒂𝒏𝒕𝒍𝒚 𝒉𝒊𝒈𝒉𝒆𝒓 𝒕𝒊𝒎𝒆-𝒔𝒑𝒆𝒏𝒕 𝒂𝒏𝒅 𝒓𝒆𝒕𝒆𝒏𝒕𝒊𝒐𝒏

First, what even is polarization? We all kinda know already because of the experience of living in a divided society, but why do we care? A few big reasons:

First, what even is polarization? We all kinda know already because of the experience of living in a divided society, but why do we care? A few big reasons:

https://twitter.com/ed_hawkins/status/1167769410238595072

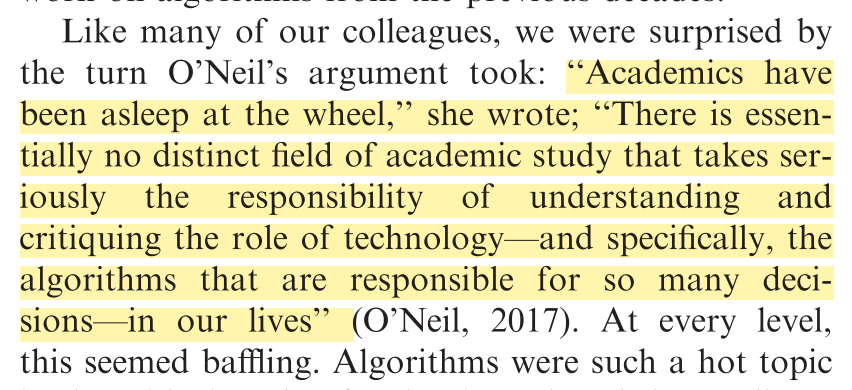

@davidjmoats @npseaver It starts with @mathbabedotorg's 2017 editorial. Apparently the critical community (not the best name, but let's go with it for now) was super surprised to hear that they were not critiquing technology. Me, I recognize Cathy's critique very naturally. The problem is...

@davidjmoats @npseaver It starts with @mathbabedotorg's 2017 editorial. Apparently the critical community (not the best name, but let's go with it for now) was super surprised to hear that they were not critiquing technology. Me, I recognize Cathy's critique very naturally. The problem is...