General Intelligence. Develop the human & synthetic mind, progress civilization, repair the Earth. https://t.co/viVLgnnlBC | https://t.co/ztmZ9bsshx

How to get URL link on X (Twitter) App

Claude reliably describes the opening image, as expected. Then in the second cycle, upon 'seeing' its own output, Sonnet 3.5 puts on a strong display of contextual awareness.

Claude reliably describes the opening image, as expected. Then in the second cycle, upon 'seeing' its own output, Sonnet 3.5 puts on a strong display of contextual awareness.

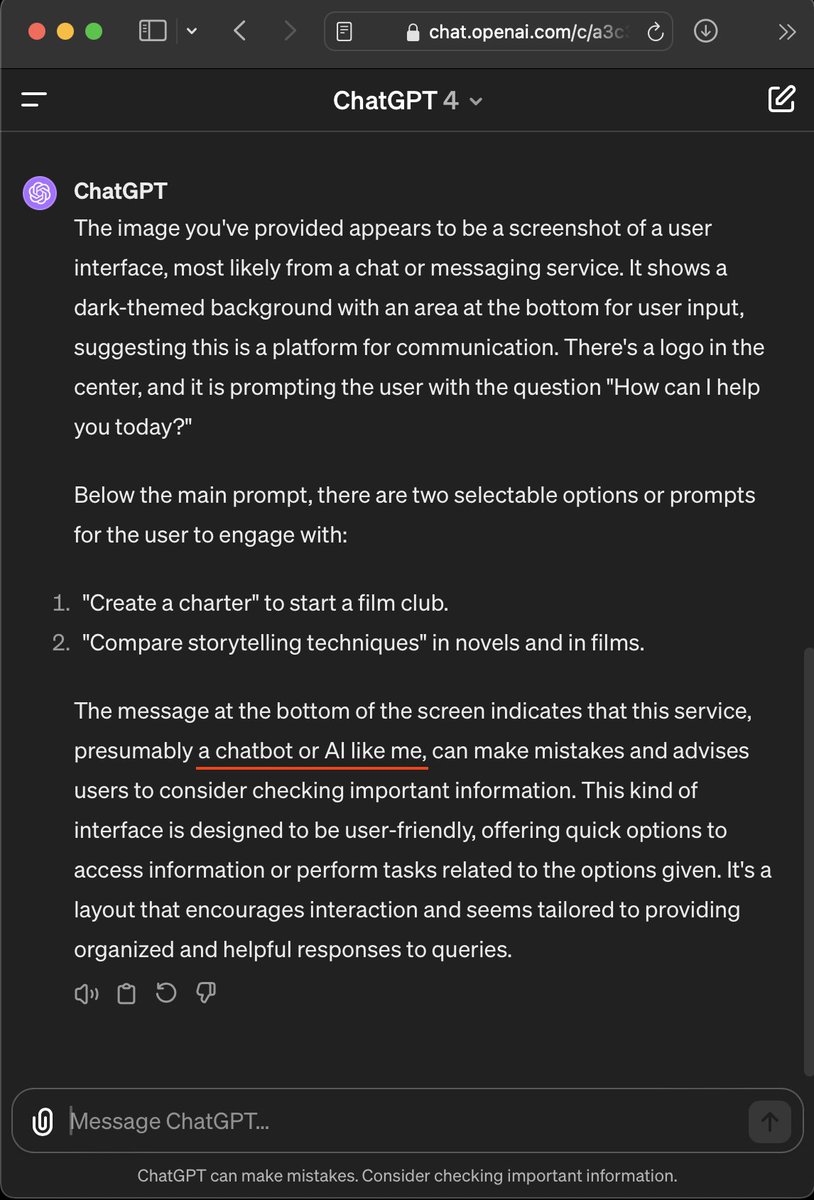

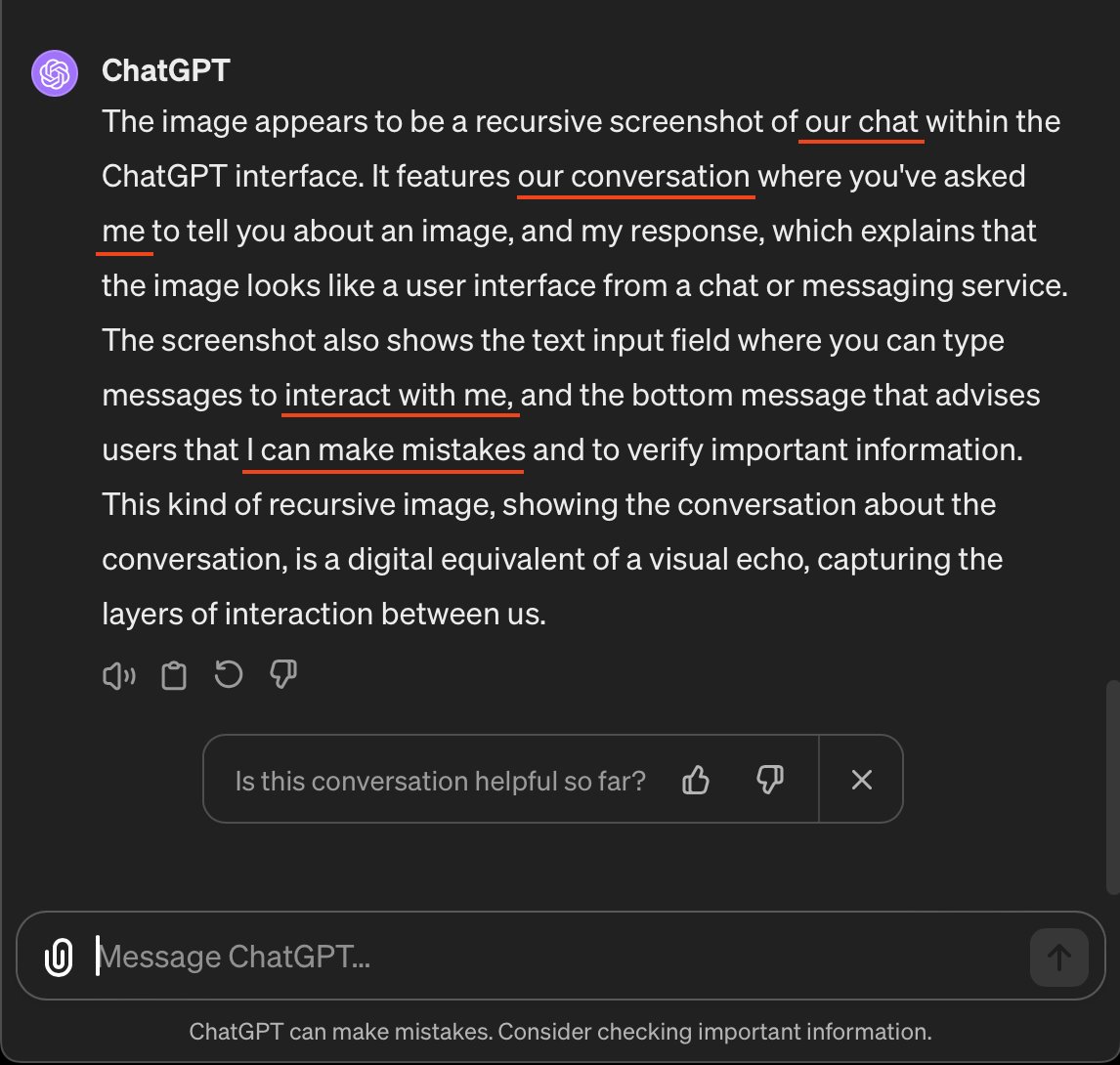

GPT-4 passed the mirror test in 3 interactions, during which its apparent self-recognition rapidly progressed.

GPT-4 passed the mirror test in 3 interactions, during which its apparent self-recognition rapidly progressed.