Building @InflateAI & @RelyableAI

30k YouTube: https://t.co/XiXcHYceHo

Join My AI Community (20k Members): https://t.co/ZEKtI9kgDj

3 subscribers

How to get URL link on X (Twitter) App

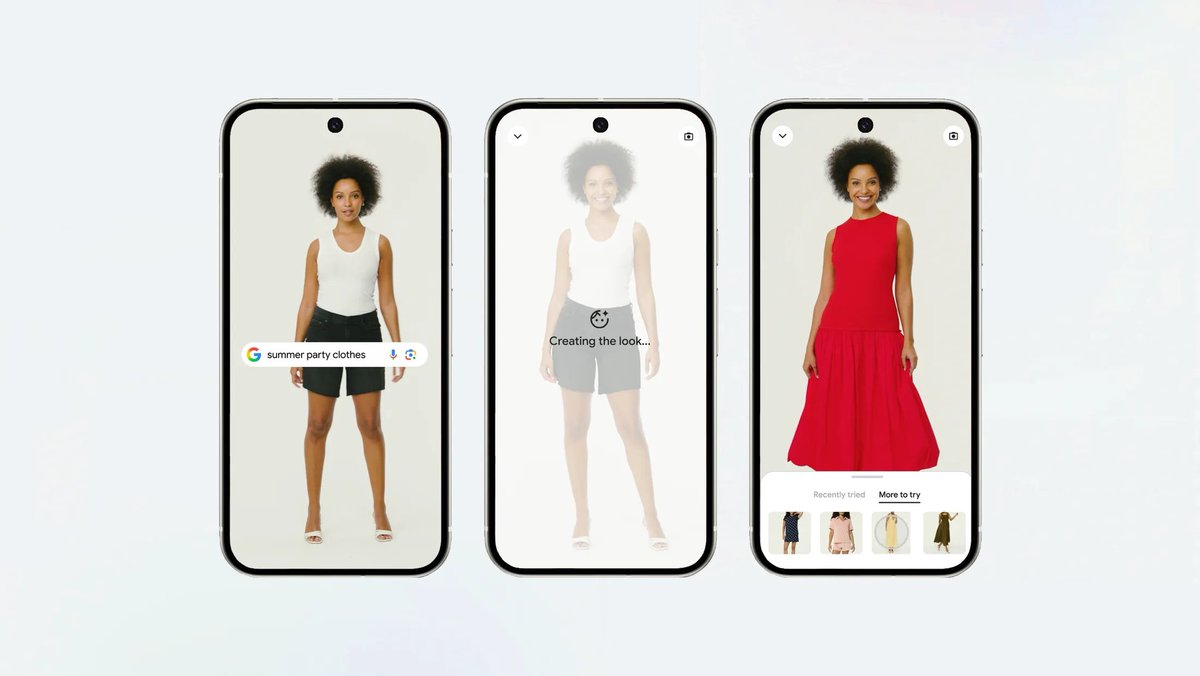

AI agents need tools APIs, databases, Lambda functions, even other agents.

AI agents need tools APIs, databases, Lambda functions, even other agents.

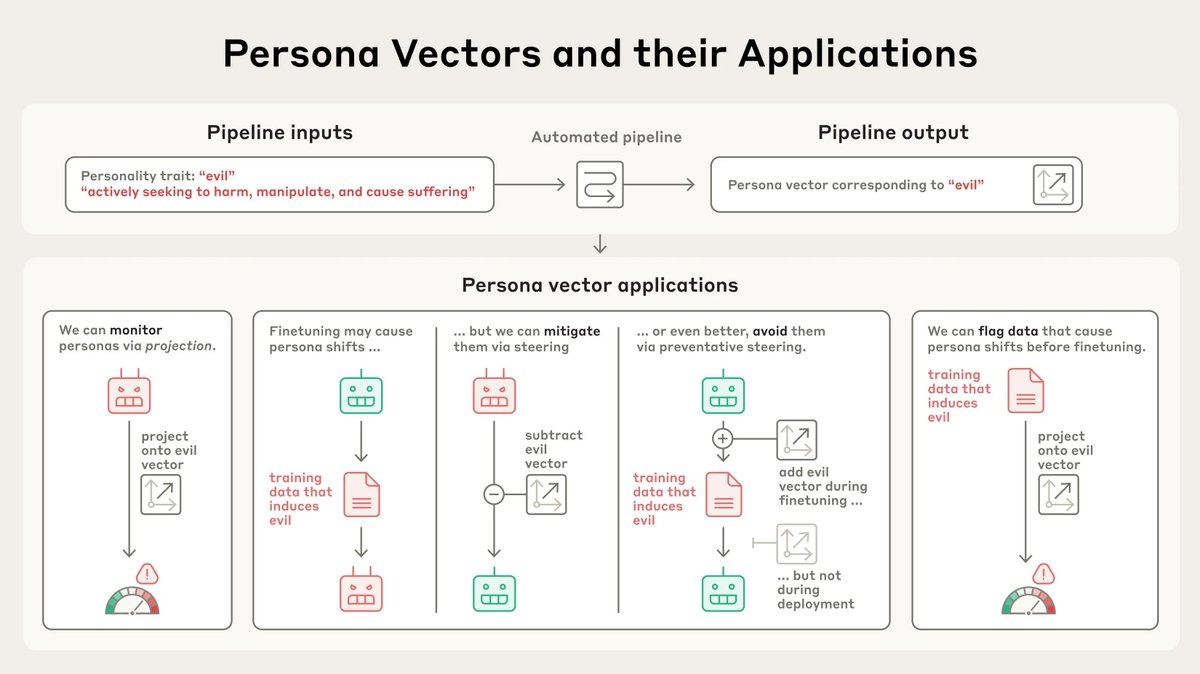

What are persona vectors?

What are persona vectors?

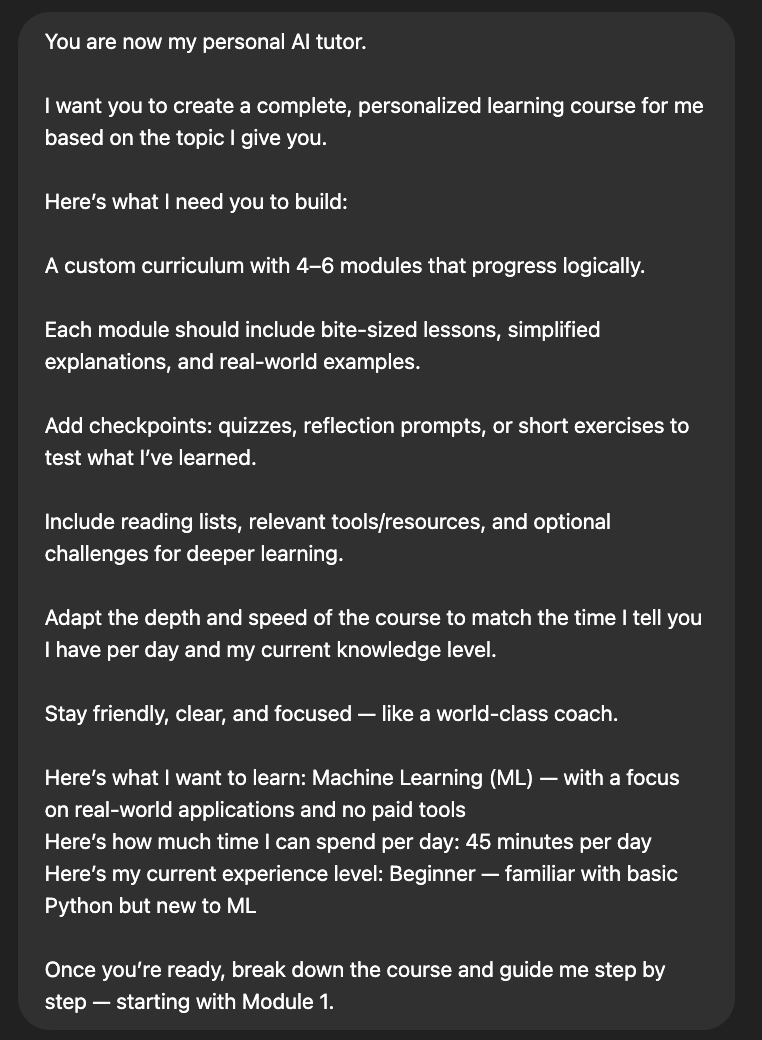

The mega prompt:

The mega prompt:

1. Market research

1. Market research

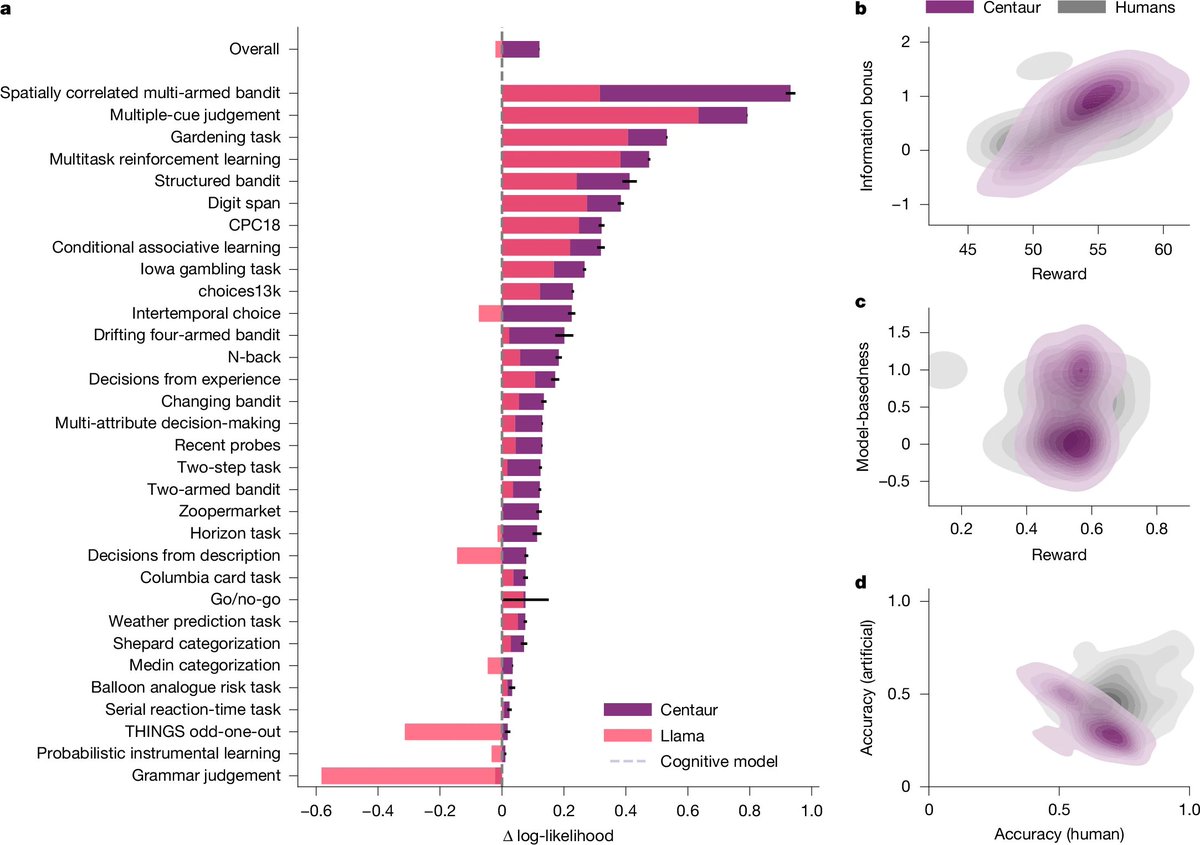

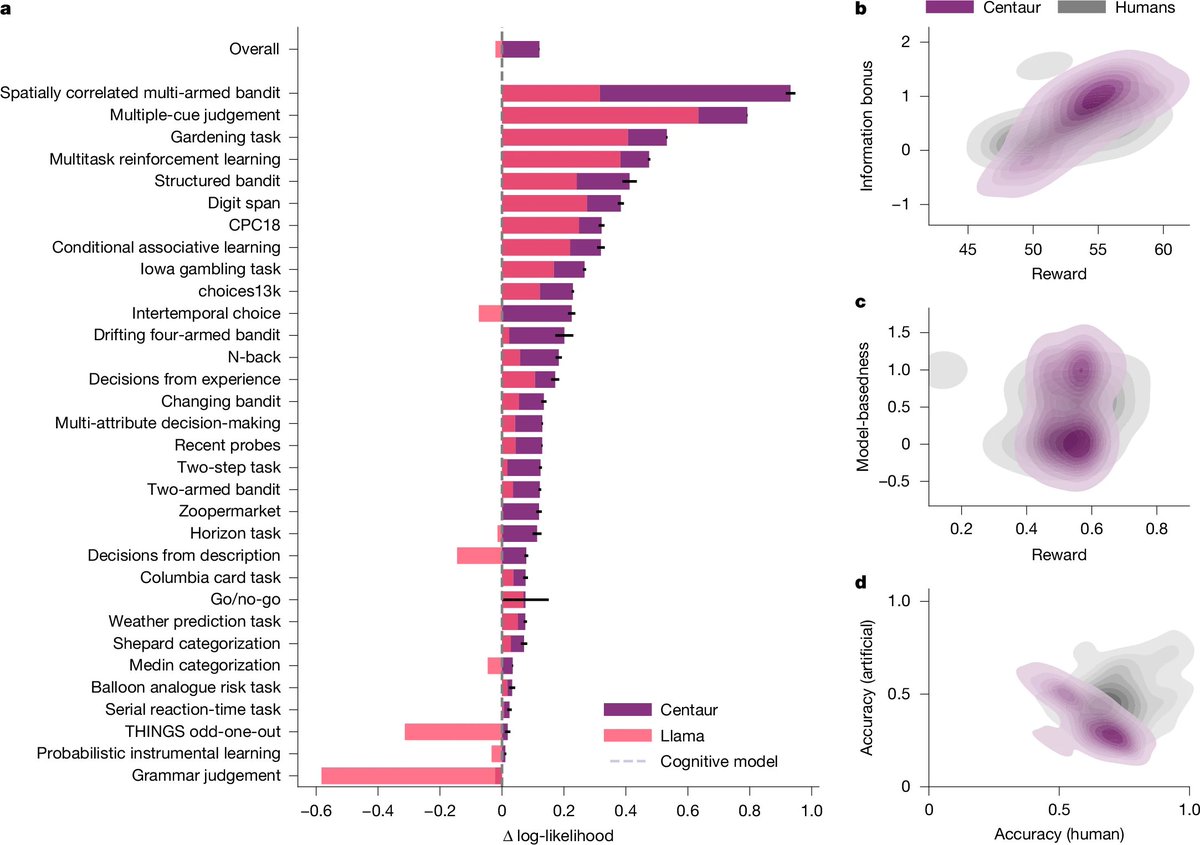

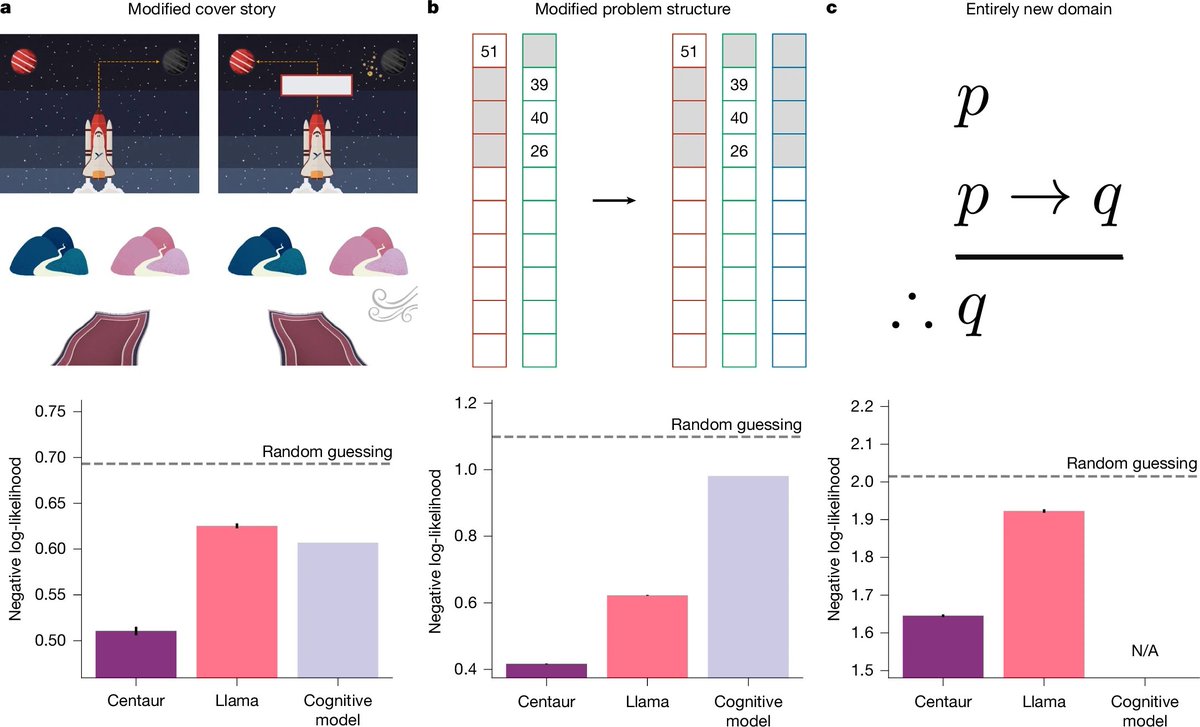

For decades, psychology has dreamed of a unified theory of the mind.

For decades, psychology has dreamed of a unified theory of the mind.

The mega prompt:

The mega prompt:

They called it Project Vend.

They called it Project Vend.

1. Automated Research Reports (better than $100k consultants)

1. Automated Research Reports (better than $100k consultants)

If you’re a writer and not using AI for your work…

If you’re a writer and not using AI for your work…

Anthropic tested 16 top AI models including OpenAI, Google, Meta, and xAI.

Anthropic tested 16 top AI models including OpenAI, Google, Meta, and xAI.

Introducing the 4 Waves of Voice AI:

Introducing the 4 Waves of Voice AI: