How to get URL link on X (Twitter) App

1/ Streaming is critical when building applications where the UI is generated by the AI.

1/ Streaming is critical when building applications where the UI is generated by the AI.

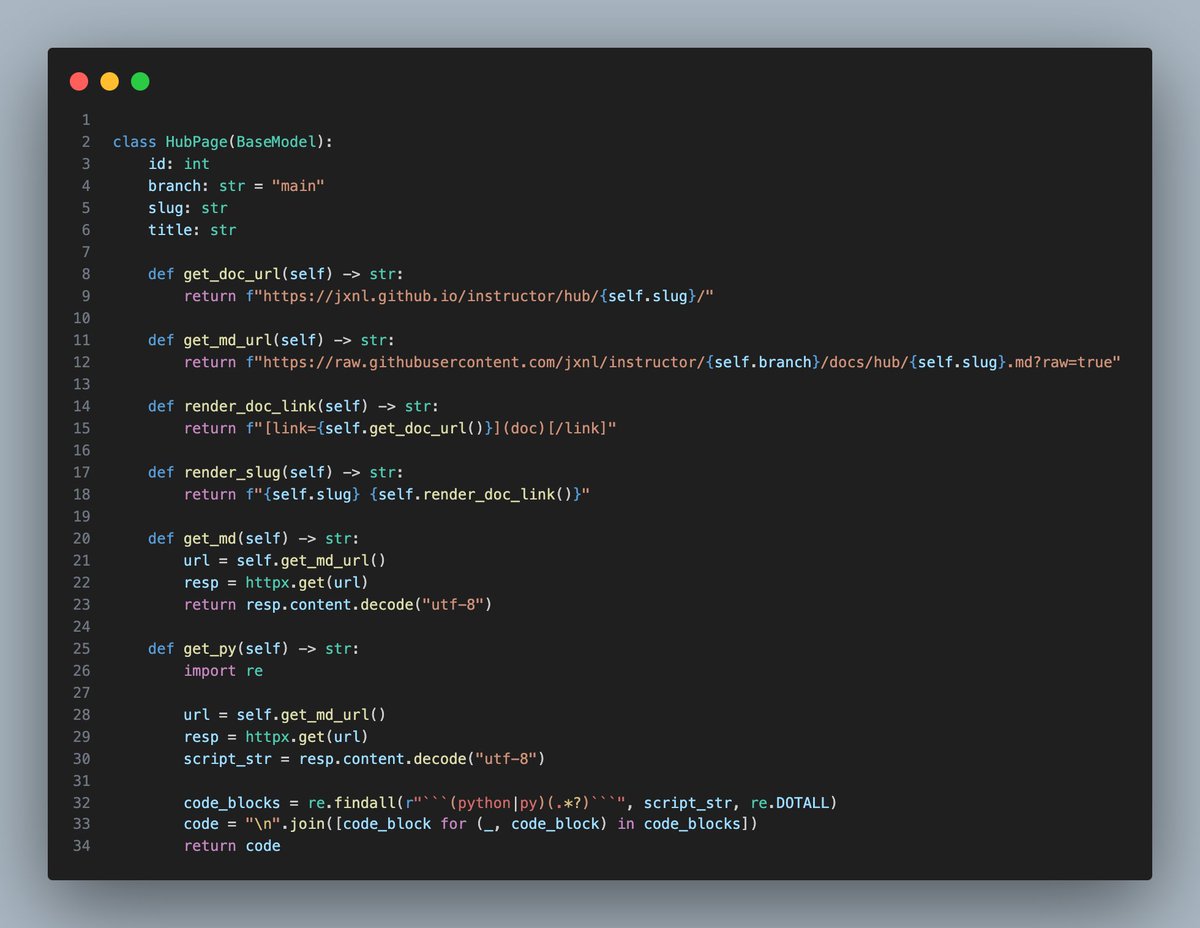

@OpenAI github.com/jxnl/openai_fu…

@OpenAI github.com/jxnl/openai_fu…

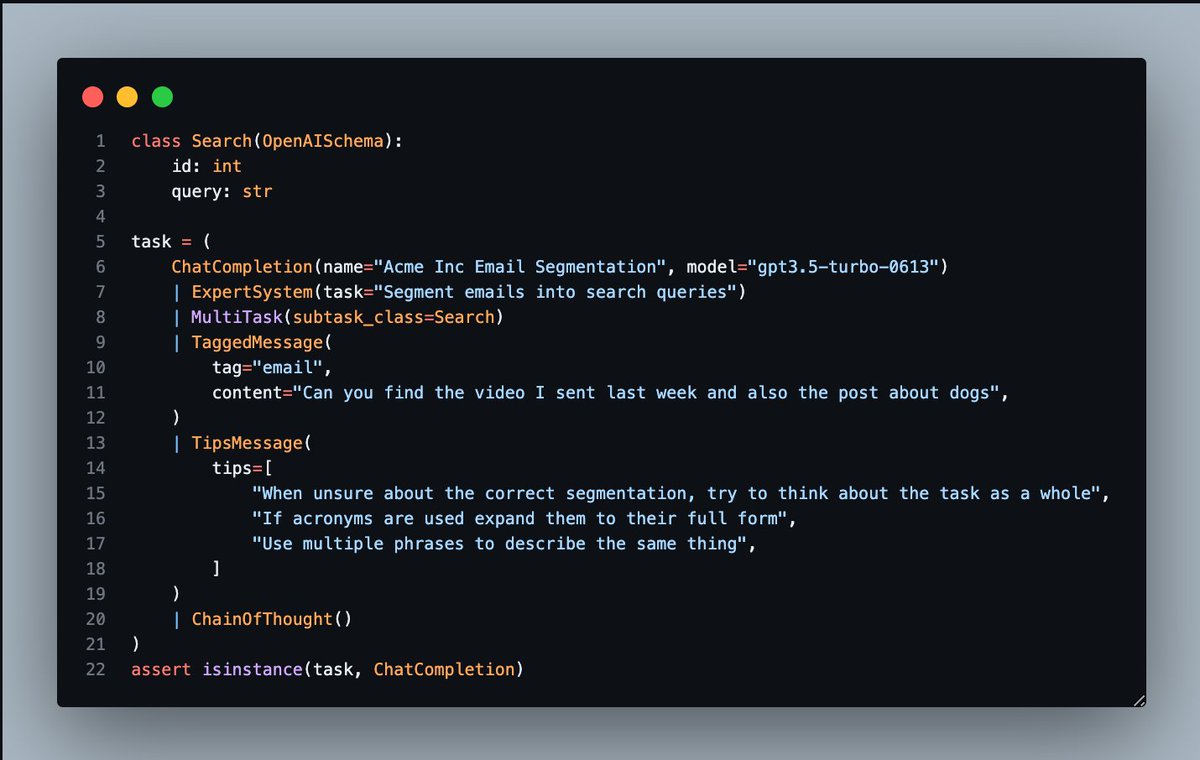

2/ So again, lets begin with the schema:

2/ So again, lets begin with the schema:

2/ 🌀 The first trick: Removing recursion:

2/ 🌀 The first trick: Removing recursion:

2/ How does it work now?

2/ How does it work now?

2/ Why is this cool?

2/ Why is this cool?

Another teaser, but notice that Note has children with are also nodes. can you figure out whats going on?

Another teaser, but notice that Note has children with are also nodes. can you figure out whats going on?