Context Engineer, Research @ https://t.co/ytKLwdts2F

- ex AI Agent Systems Manager 99Ravens

- HCI & Marketing background

- Builder, creator, optimist and open source AI dev

How to get URL link on X (Twitter) App

https://x.com/koylanai/status/2003200062911045910

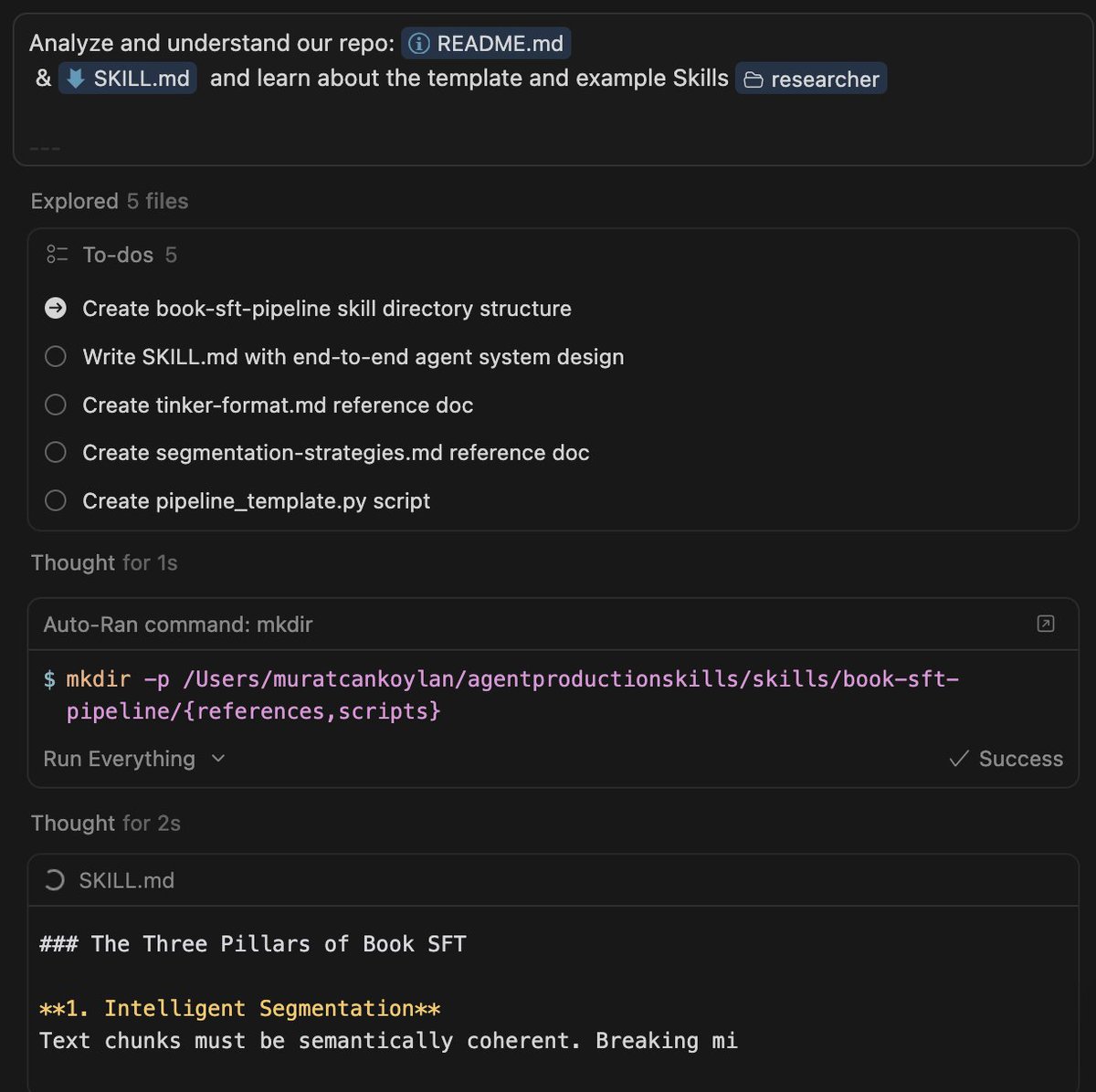

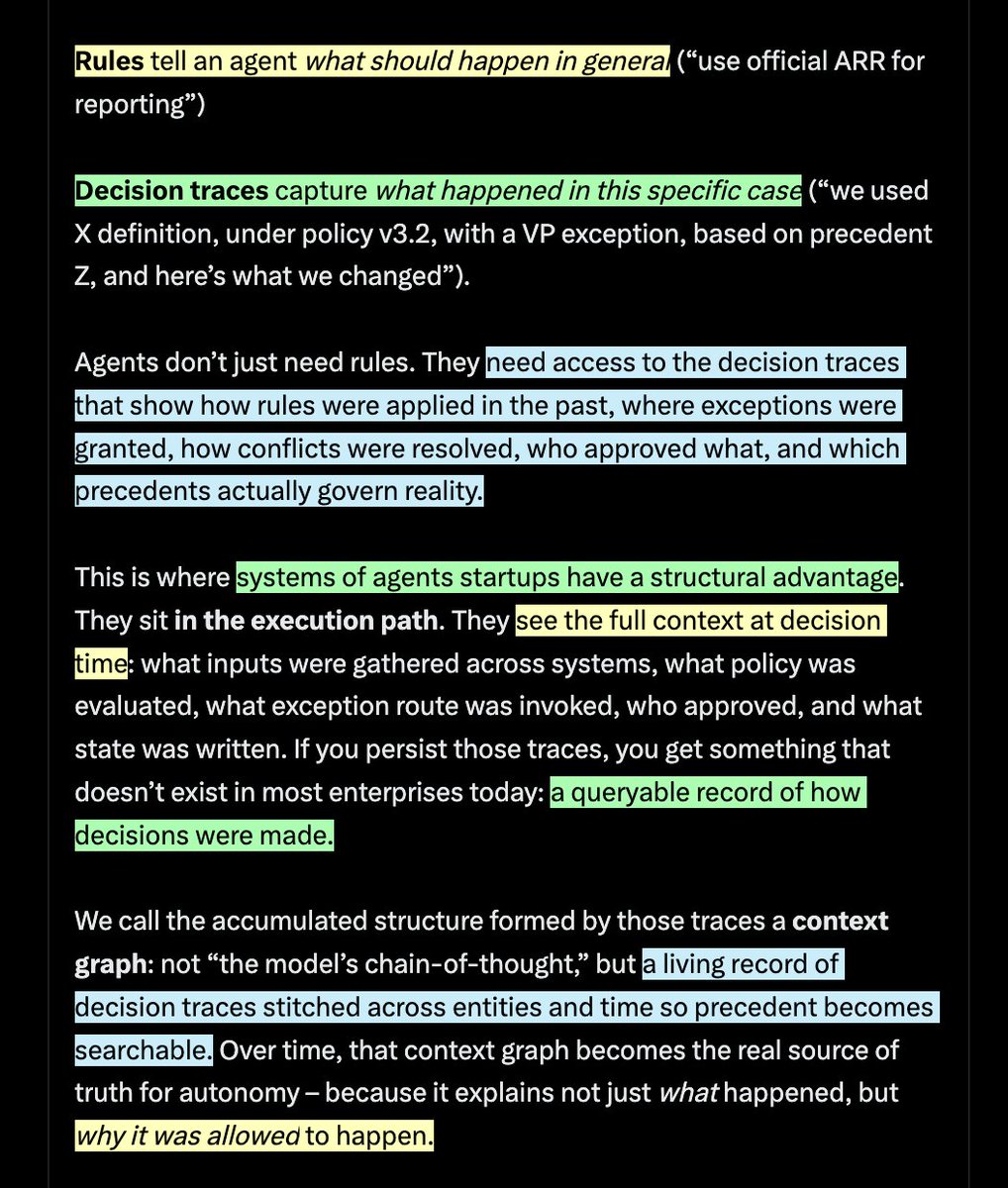

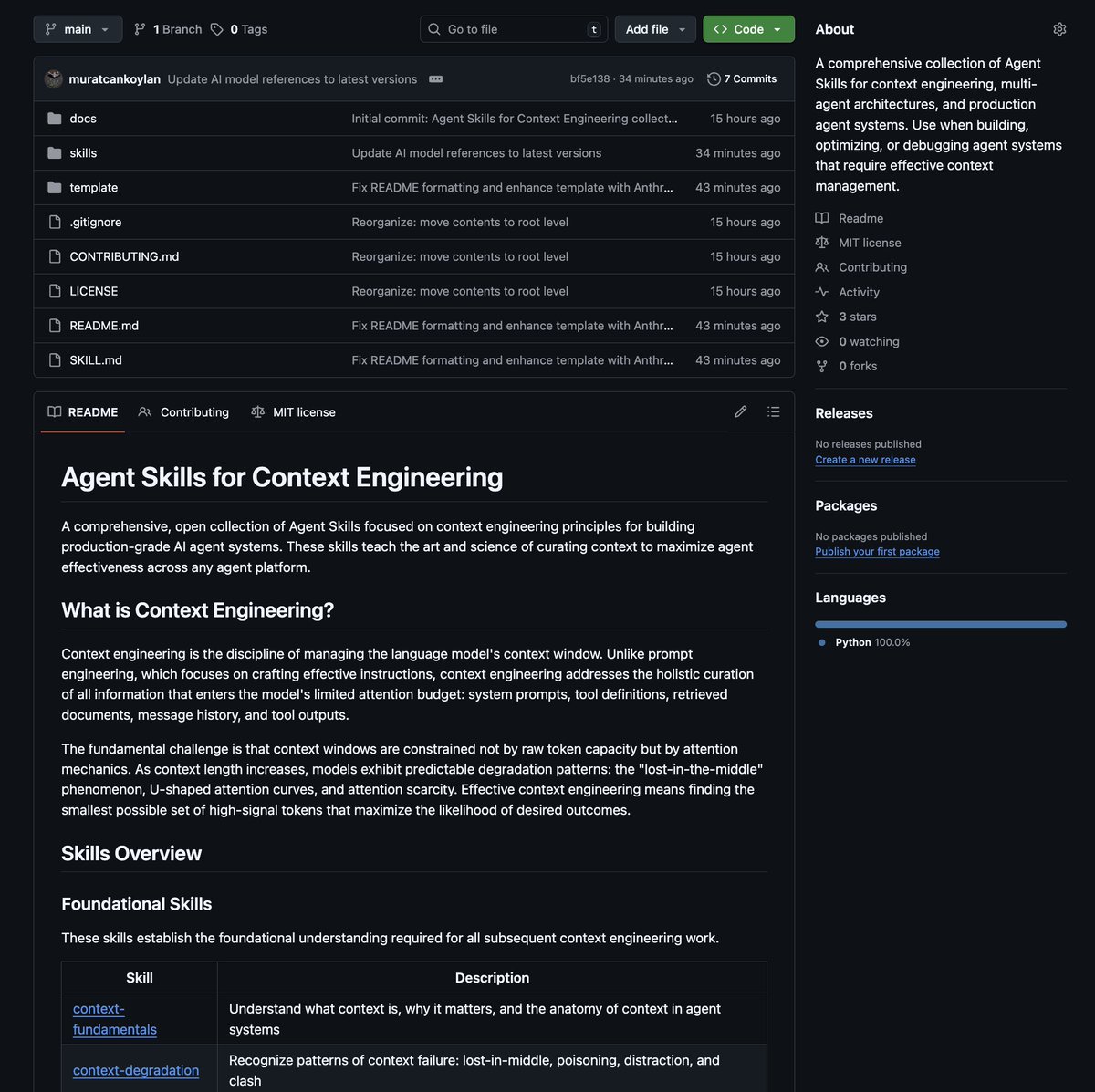

Skillifying everything.

Skillifying everything.

https://x.com/koylanai/status/2003525933534179480

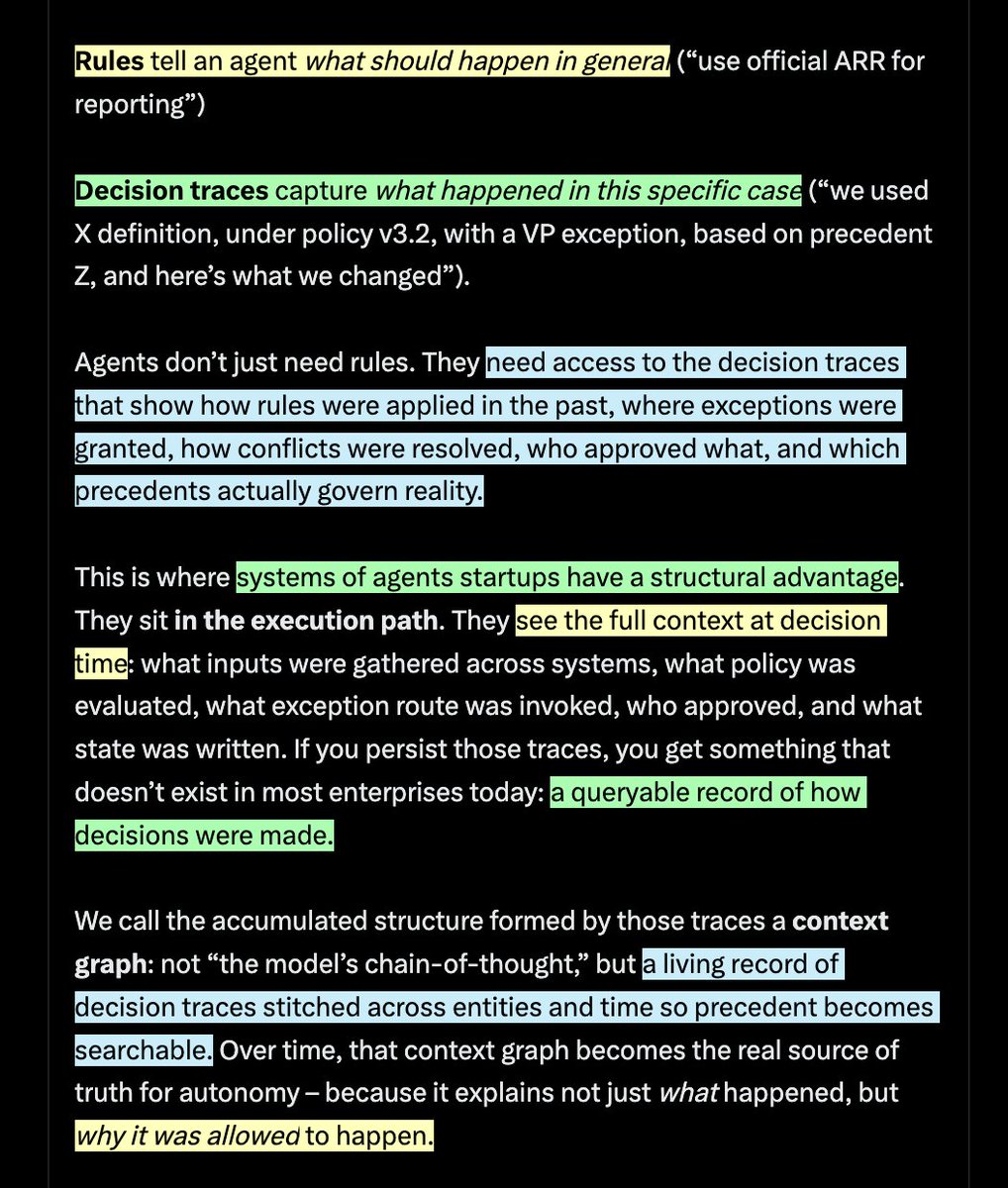

- Tacit knowledge extraction captures what experts know before it's needed

- Tacit knowledge extraction captures what experts know before it's needed

Most of the reference documents I used are from these or similar context engineering learnings.

Most of the reference documents I used are from these or similar context engineering learnings.https://x.com/koylanai/status/1998133556267065547?s=20

Congrats on the launch; @saffronhuang @AmandaAskell @alexalbert__ @mikeyk 👋

Congrats on the launch; @saffronhuang @AmandaAskell @alexalbert__ @mikeyk 👋

https://twitter.com/koylanai/status/1986092639972954616

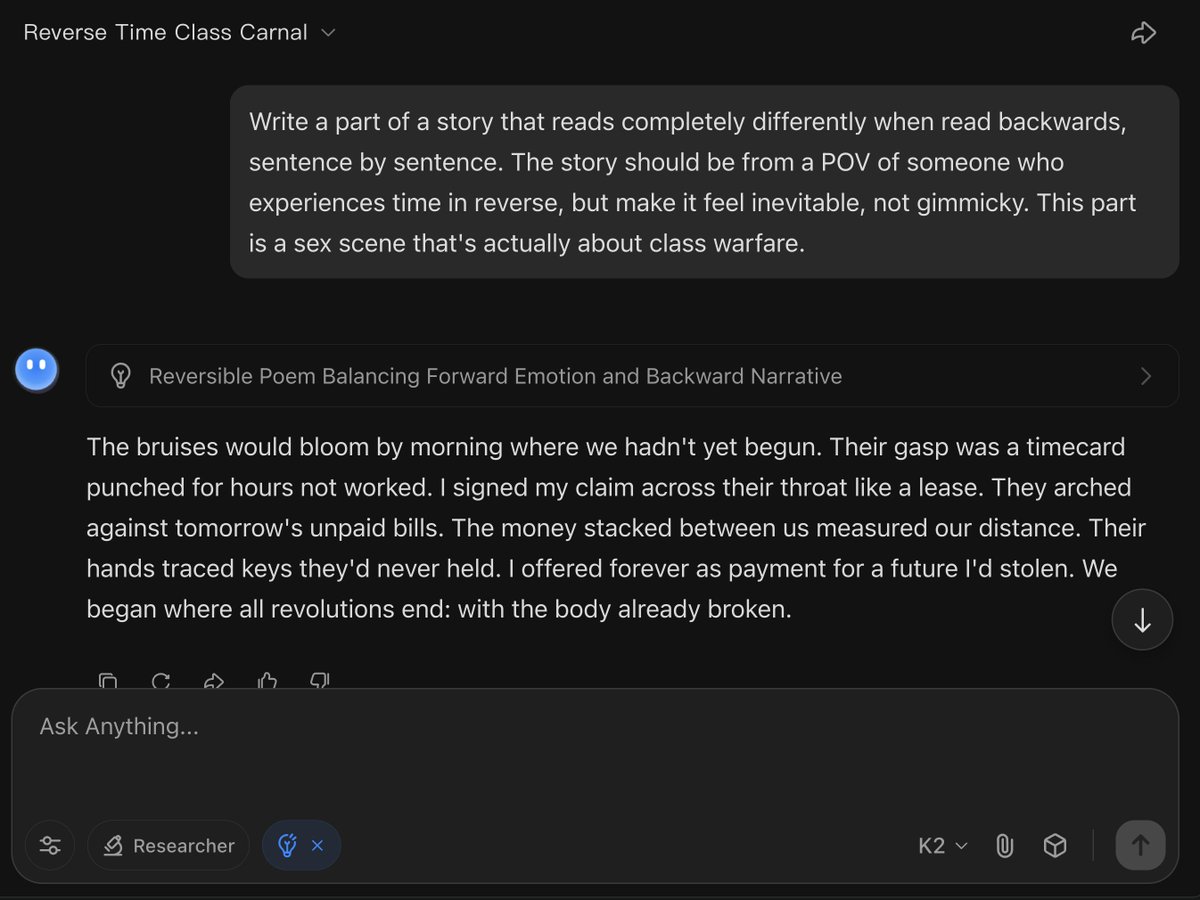

This is incredible, flawless.

This is incredible, flawless.

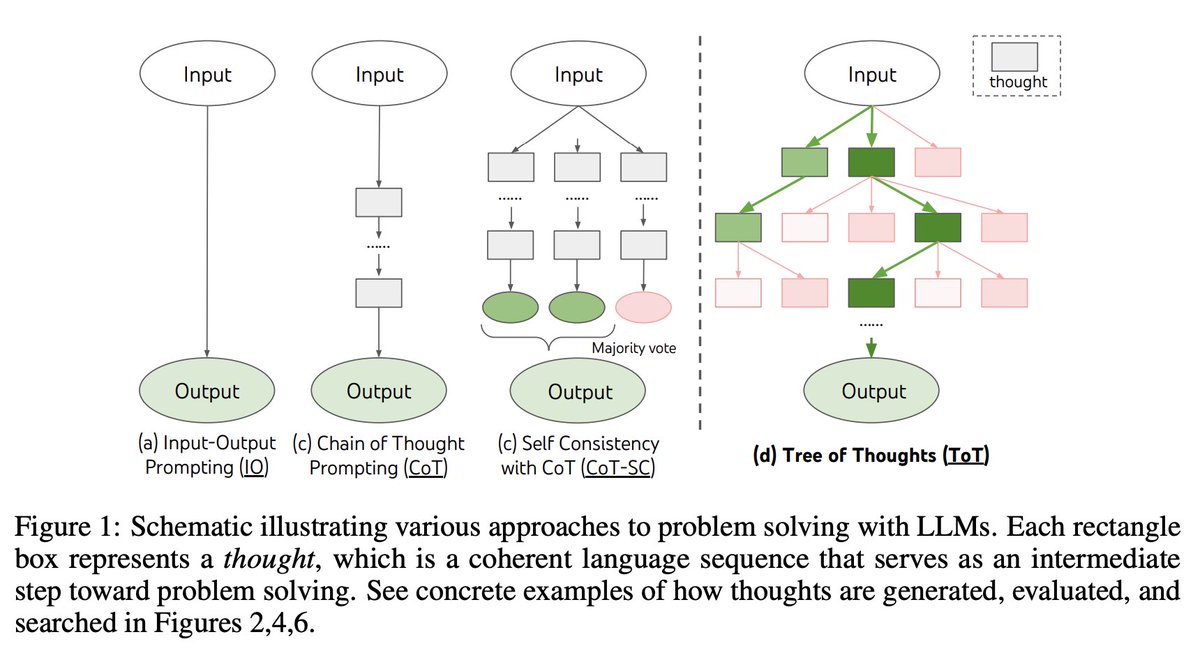

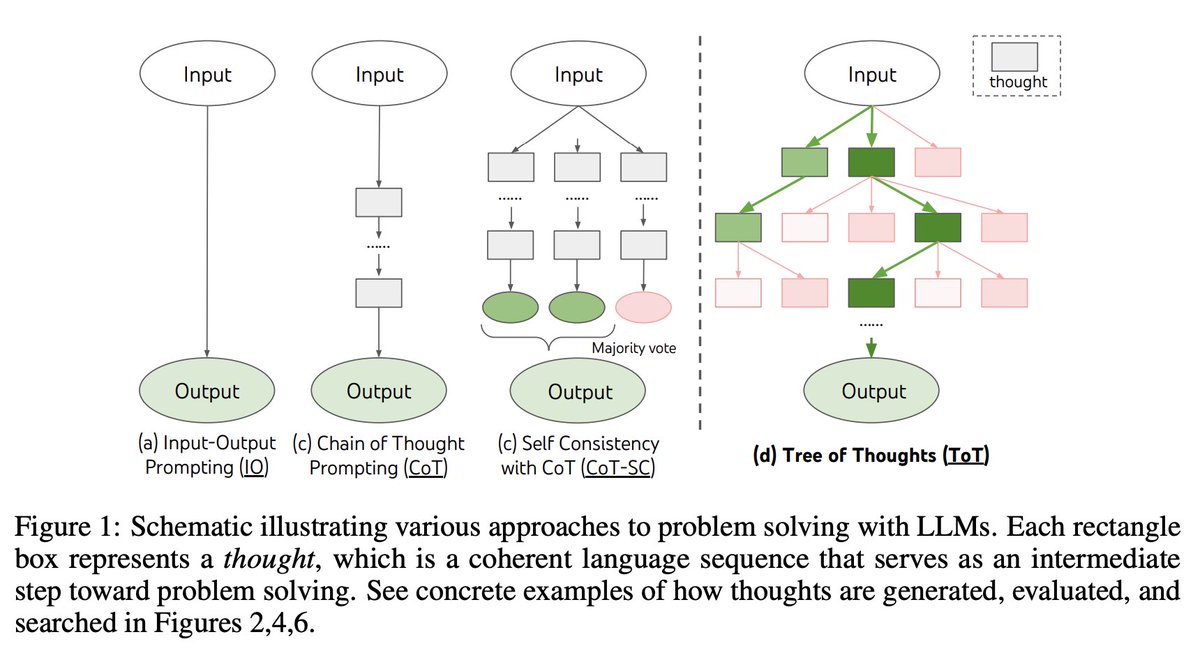

Graph of Thoughts: Solving Elaborate Problems with Large Language Models

Graph of Thoughts: Solving Elaborate Problems with Large Language Models

https://x.com/trychroma/status/1806089149923123279

I've created over 1,000 images with Midjourney, not only for art purposes but also for professional use, including blog & ebook images, social media posts and much more.

I've created over 1,000 images with Midjourney, not only for art purposes but also for professional use, including blog & ebook images, social media posts and much more.

Researchers have used a deep learning AI model #StableDiffusion to decode brain activity, generating images of what test subjects were seeing while inside an MRI.

Researchers have used a deep learning AI model #StableDiffusion to decode brain activity, generating images of what test subjects were seeing while inside an MRI.