Asst professor @MIT EECS & CSAIL (@nlp_mit).

Author of https://t.co/VgyLxl0oa1 and https://t.co/ZZaSzaRaZ7 (@DSPyOSS).

Prev: CS PhD @StanfordNLP. Research @Databricks.

How to get URL link on X (Twitter) App

https://twitter.com/lateinteraction/status/1825594011484303596There couldn't have been a better fit for me to postdoc at prior to MIT, given @Databrick's:

Roadmap:

Roadmap:

📰:

📰:

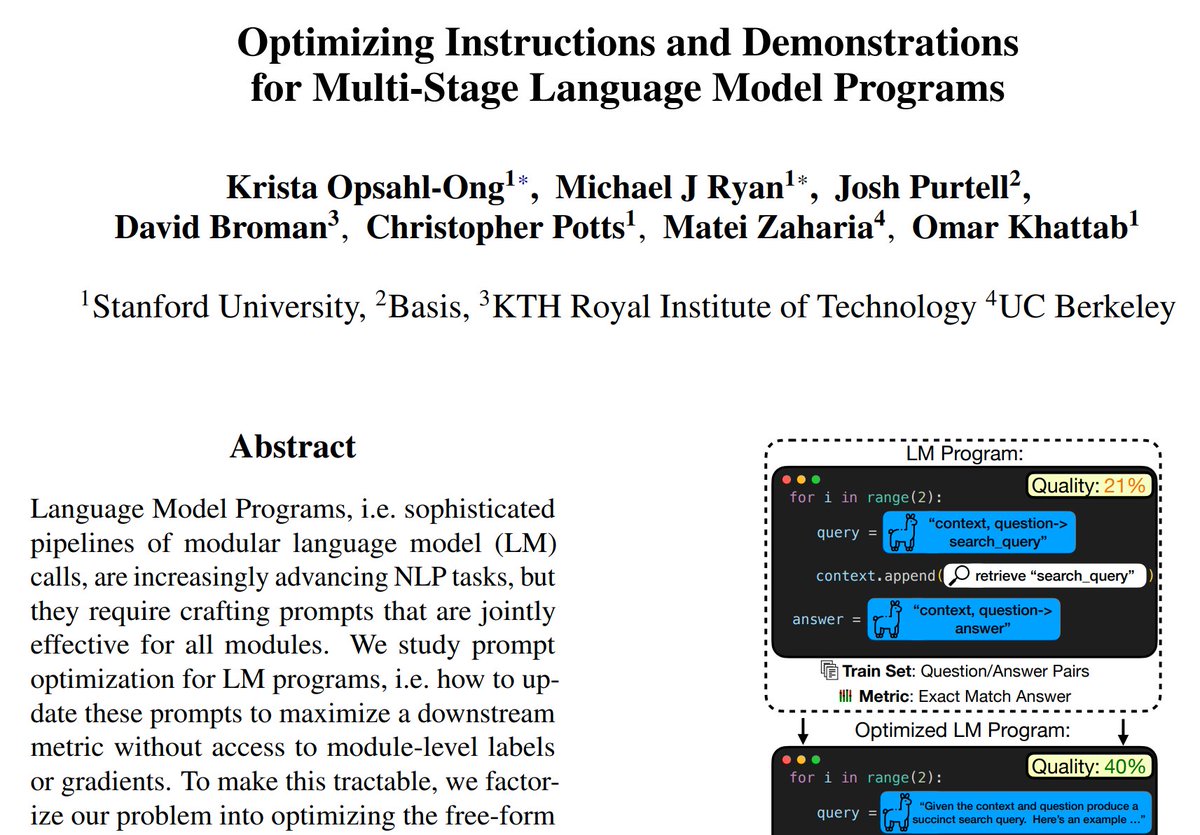

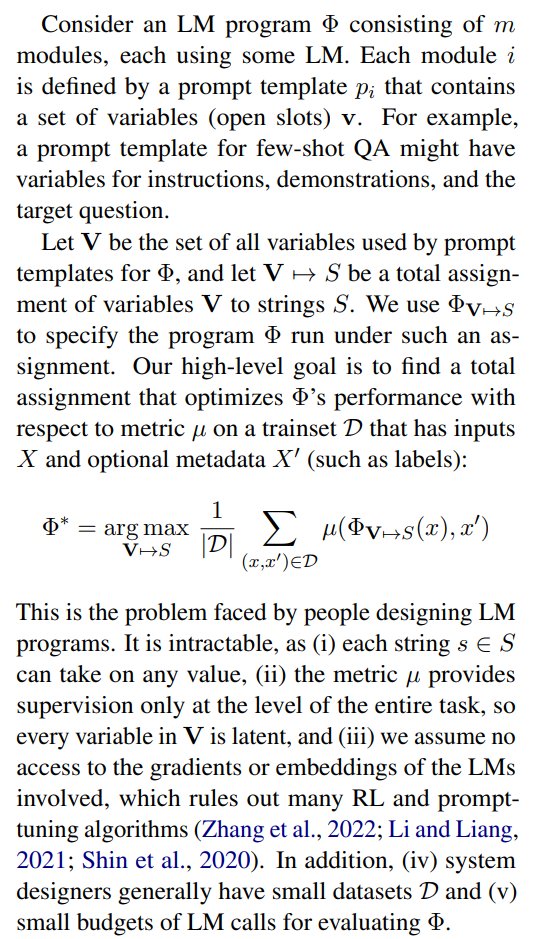

The DSPy vision is to push for a sane **stack** of lower- to higher-level LM frameworks, learning from the DNN abstraction space.

The DSPy vision is to push for a sane **stack** of lower- to higher-level LM frameworks, learning from the DNN abstraction space.

https://twitter.com/emollick/status/1737288153432469744Note: I have no inside OpenAI info & I'm uninterested in individual LMs. Expressive power lies in the *program* wielding the LM

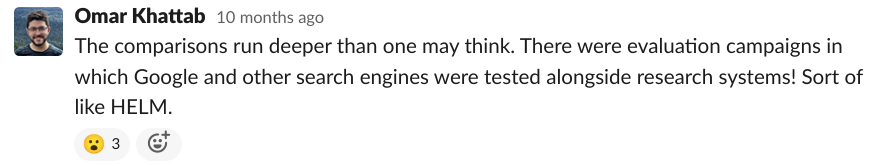

This is thread #2 (out of three) on late interaction.

This is thread #2 (out of three) on late interaction.https://twitter.com/lateinteraction/status/1736804963760976092

Say you have 1M documents. With infinite GPUs, what would your retriever look like?

Say you have 1M documents. With infinite GPUs, what would your retriever look like?

If you want to do this in a notebook with step-by-step code:

If you want to do this in a notebook with step-by-step code:

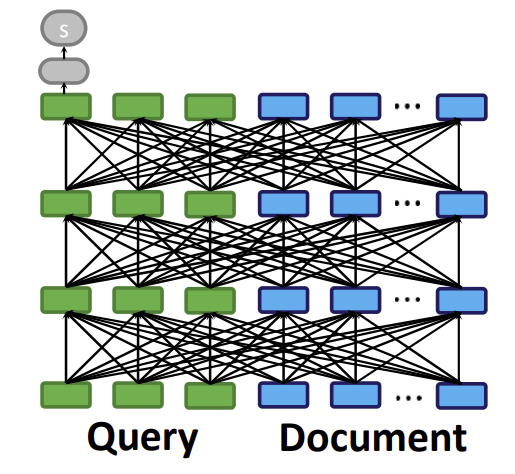

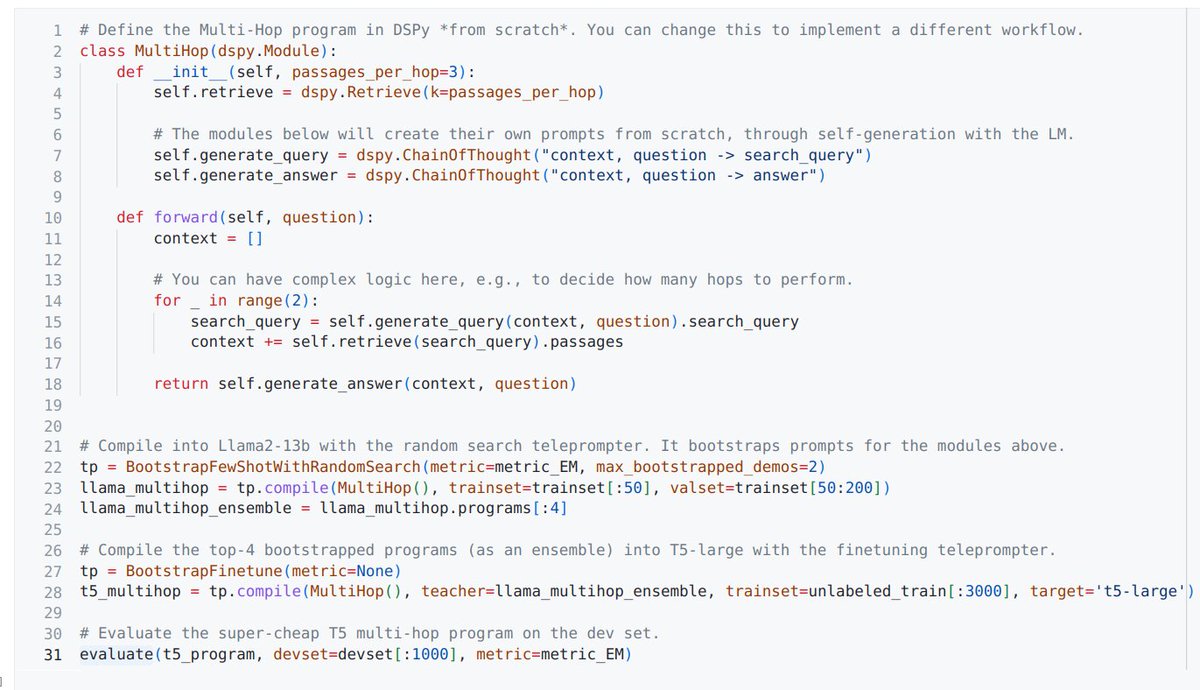

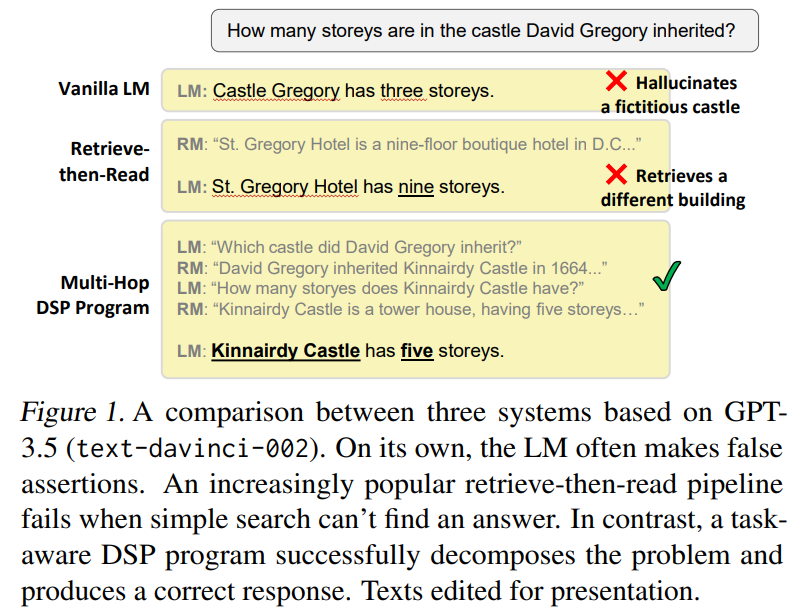

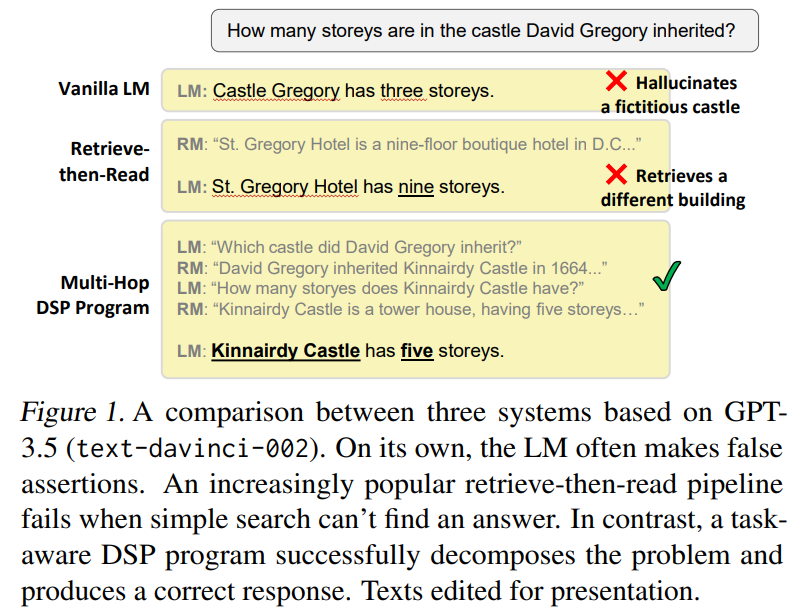

Instead of crafting a prompt for the LM, you write a short 𝗗𝗦𝗣 program that assigns small tasks to the LM and a retrieval model (RM) in deliberate powerful pipelines.

Instead of crafting a prompt for the LM, you write a short 𝗗𝗦𝗣 program that assigns small tasks to the LM and a retrieval model (RM) in deliberate powerful pipelines.